What is VMware vMotion? It is THE feature, the function, which “hooked” thousands of millions IT guys on the plane for using VMware for their IT infrastructure. It is kind of a holy grail of virtualization where a running VM moves to another host without interruption of service (of with just a few pings lost).

I still remember when I did my first setup of a vMotion, then open a DOS window and run a ping command to see how many packets will be lost during vMotion operation.

In this post, we will learn not only What is VMware vMotion, but also see different versions of vMotions (such as long distance vMotion or vMotion across country, or across datacenters) and will set up a multi-NIC vMotion allowing to maximize throughput and lower the migration time which is especially useful for VMs configured with a lot of VRAM.

The usual admin does not need to know in details what's happening behind the covers. For most situations, it is enough to know that VM moves from host to host without losing connection.

From a higher perspective, vMotion copies all the VM's memory pages to the target VM first, via a vMotion network. The target VM is called also shadow VM. This first copy operation is also called PreCopy. Since the source VM continues to change its memory, the process starts once again and copies any delta changes occurred since the las PreCopy.

Once all the copies are done, and there are no more changes to copy, the source VM process shuts down, the delta of the remaining memory is copied to the target VM, which is resumed. The last part is happening within milliseconds. The main part why we have to wait for vMotion to finish is because of the network. And that's why it is interesting to have multi-NIC vMotion.

vMotion across country (Long-distance vMotion)

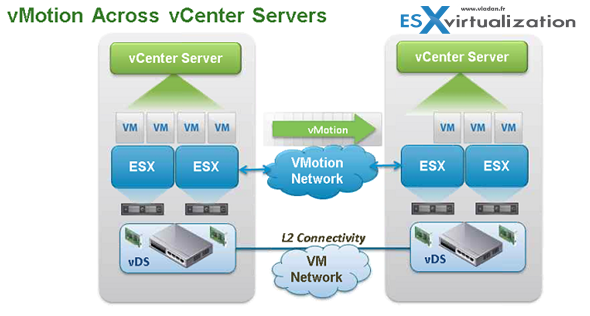

A long-distance vMotion is present since vSphere 6.0. An RTT (round-trip time) latency of 150 milliseconds or less, between hosts. Your license must cover vMotion across long distances. The cross vCenter and long distance vMotion features require an Enterprise Plus license.

is Long-distance vMotion allowing to vMotion VMs from one data center to another (to remote site or cloud data center) and the requirements on this is to have a link with at least 150 ms RTT (previously 10 ms was necessary ).

The vMotion process is able to keep the VMs historical data (events, alarms, performance counters, etc.) and also properties like DRS groups, HA settings, which are in relation and tighten to vCenter. It means that the VM can not only change compute (a host) but also network, management, and storage – at the same time. (until now we could change host and storage during vMotion process – correct me if I’m wrong)

Requirements:

- vCenter 6 (both ends)

- Single SSO domain (same SSO domain to use the UI). With an API it’s possible to use different SSO domain.

- L2 connectivity for VM network

- vMotion Network

- 250 Mbps network bandwidth per vMotion operation

vMotion across vCenters:

- VM UUID is maintained across vCenter server instances

- Retain Alarms, Events, task and history

- HA/DRS settings including Affinity/anti-affinity rules, isolation responses, automation level, start-up priority

- VM resources (shares, reservations, limits)

- Maintain MAC address of Virtual NIC

- VM which leaves for another vCenter will keep its MAC address and this MAC address will not be reused in the source vCenter.

It’s possible to move VMs:

- from VSS to VSS

- from VSS to VDS

- from VDS to VDS

vMotion Across vCenters

Allows to change compute, storage, networks, and management. In single operation you’re able to move a VM from vCenter 1 where this VM is placed on certain Host, lays on some datastore and is present in some resource pool, into a vCenter 2 where the VM lays on different datastore, is on a different host and it’s part of the different resource pool.

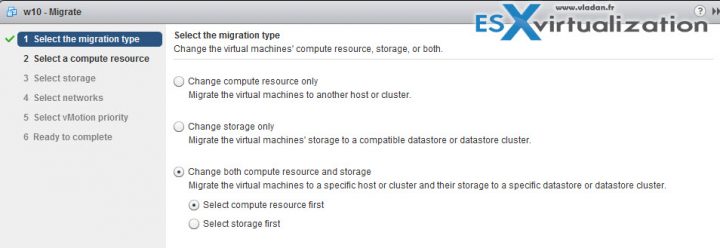

vMotion without shared storage (Shared Nothing vMotion)

This is part of vSphere since version 5.1. You no longer need shared storage to move a VM from and to a host. The process is the same as traditional vMotion but part of the process is also a storage vMotion. You'll need to choose to what host do you want to migrate to, what datastore, and a priority level.

Useful for cross-cluster migrations, when the target cluster machines might not have access to the source cluster's storage. Processes that are working on the virtual machine continue to run during the migration with vMotion.

But, if you want to speed up the migration, it is better to shut down the VM as the amount of data to transfer will be less important and the transfer will put less pressure on your infrastructure.

Requirements:

- The destination host must have access to the destination storage.

- The hosts must be licensed for vMotion.

- Virtual machine disks must be in persistent mode or be raw device mappings (RDMs). When you move a virtual machine with RDMs and do not convert those RDMs to VMDKs, the destination host must have access to the RDM LUNs.

- ESXi 5.1 or later.

- On each host, configure a VMkernel port group for vMotion. ( You can configure multiple NICs for vMotion by adding two or more NICs to the required standard or distributed switch.)

- Make sure that VMs has access to the same subnets or VLANs on a source and destination hosts. This applies also to standard (traditional) vMotion.

Limitations:

- This type of vMotion counts against the limits for both vMotion and Storage vMotion, so it consumes both a network resource and 16 datastore resources

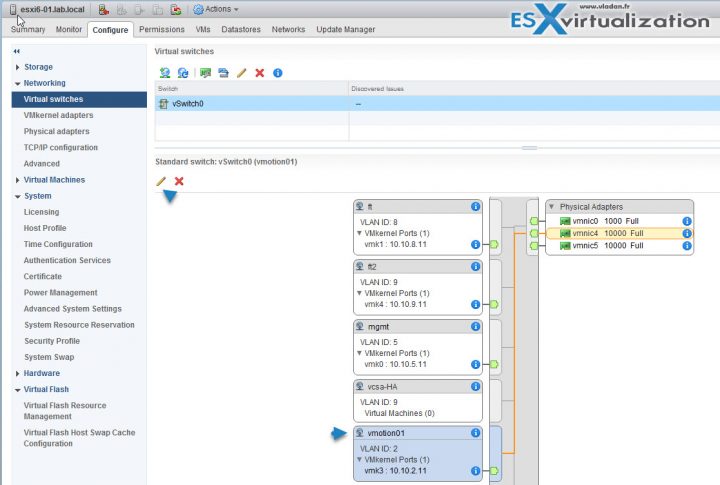

How to configure Multiple-NIC vMotion with Standard Switches

Requirements:

- You'll need at least two dedicated network adapters for vMotion per host.

- All VMkernel ports configured are in the same IP subnet/VLAN on all hosts

We might do a follow-up post for vDS switches where you can use a network I/O control for load balancing (NIOC) in case you don't use dedicated NICs for vMotion or you're using 10GbE NICs which share other types of traffic.

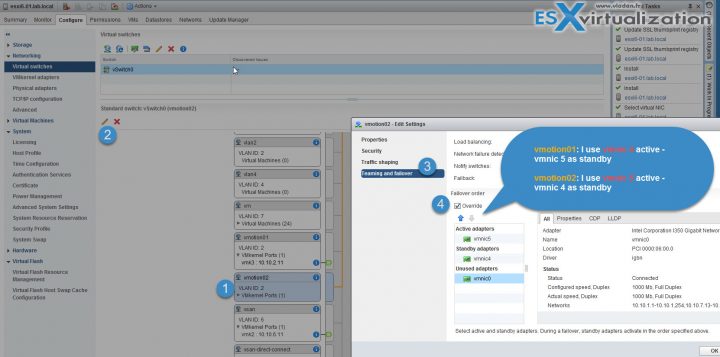

There are several ways to do it. One way can be by creating a separate vSwitch for vMotion or you can also have both VMkernel ports attached to the same vSwitch. We'll stick to the second option. As we already have one VMkernel port with a vMotion network configured, we'll just do the second one. The first VMKernel vMotion portgroup is called vmotion01 and it is using vmnic4 as an active adapter.

It looks like this through vSphere web client.

We're on 10.10.2.11 network with VLAN2.

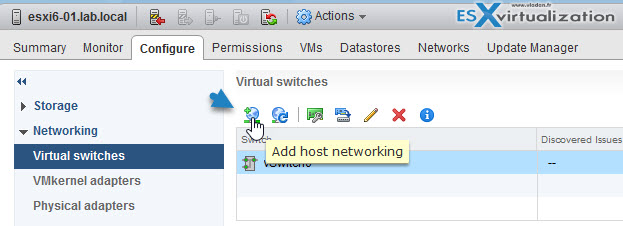

Let's add second VMkernel and activate vMotion traffic.

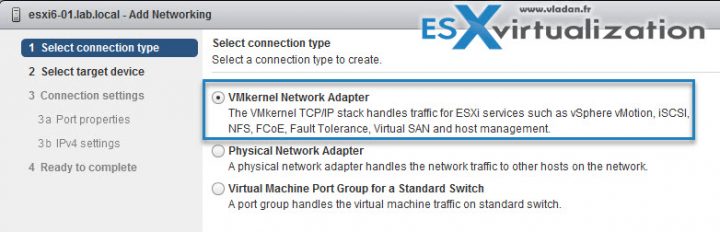

Click the Add host networking icon.

And follow the assistant.

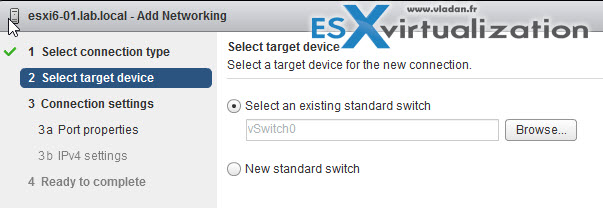

Use existing standard switch is my option (you can create new vSwitch too), but as being said, other options exists.

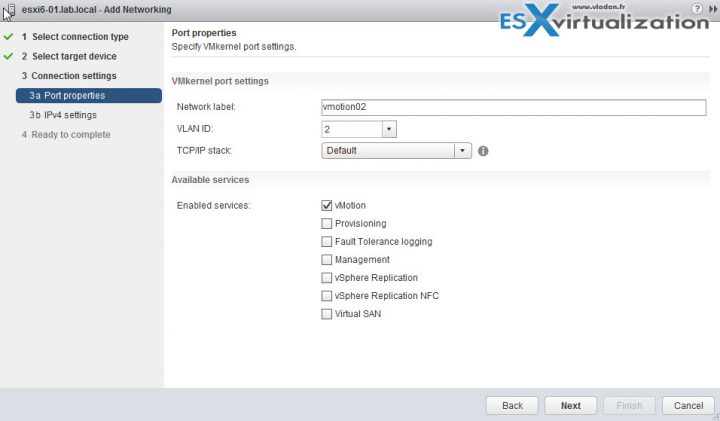

And then put some meaningful name for network label (simply vmotion02 in my case), pick a VLAN ID and check the vMotion service checkbox.

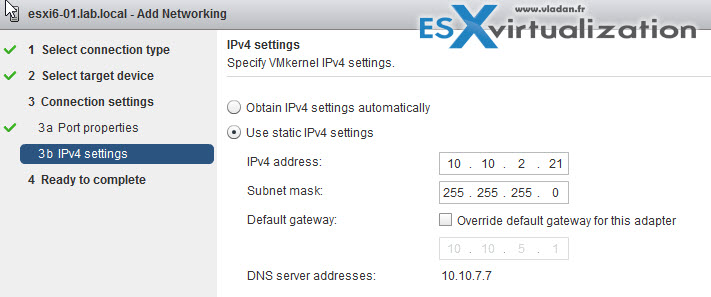

And chose an IP address within the same range/VLAN as the first VMkernel port for vMotion.

Then Select the vSwitch, select the VMkernel port, click the pencil icon and configure the teaming and failover this way:

- vmotion01: I use vmnic4 as active – vmnic5 as standby

- vmotion02: I use vmnic5 as active – vmnic4 as standby

Then rinse and repeat for each of your hosts within your cluster. That's where also you would like to have vSphere Distributed vSwitch (vDS) where you configure things only once, at the vDS level.

The multi-NIC vMotion has been documented in many places, and it is here since vSphere 5. The source I used was this VMware KB article – Multiple-NIC vMotion in vSphere – which also has 2 videos you can watch.

More from ESX Virtualization:

- What is VMware Storage DRS (SDRS)?

- What Is Erasure Coding?

- What is VMware Orchestrated Restart?

- What is VMware Cluster?

- VMware vSphere Essentials Kit Term

Stay tuned through RSS, and social media channels (Twitter, FB, YouTube)

Another answer to “What is vmotion?”: It’s the first sign that your application is probably better suited for containers. Dragging the entire operating system between datacenters and booting it is a huge waste if time and resources/bandwidth.

David, In any case The OS is booting at any particular time, during vMotion operation. You are perhaps right about a use case for container apps, but those are just not a widely used as they should.