With VMware vSphere 6.5 there was also a new introduction of VMware Virtual NVMe Device. Non Volatile Memory Express stands for NVMe. In this post, we will discuss what is it and what is good for. Check out our VMware vSphere 6.5 detailed page with How-to, news, videos, and tutorials.

Virtual NVMe Device is new virtual storage host bus adapter (HBA) which has been designed lower IO overhead and scalable IO for all flash SAN/vSAN storages.

As you know in the real life, with hardware NVMe SSDs taking significant advantage over old SATA/SAS based Flash devices, the mass adoption and the storage revolution is NOW.

The main benefit of NVMe interface over SCSI is that it reduces the amount of overhead, and so consumes fewer CPU cycles. Also, there is a reduction of IO latency for your VMs. Which Guest OS are supported?

Which Guest OS are supported?

Not all operating systems are supported. Before you start adding (or replacing) the controllers on your VMs, make sure that your OS is suported. You'll also need to make sure that the Guest OS has a driver installed to use the NVMe controller.

Not ALL operating systems are supported.

According to this VMware KB article, there are the following Guest OS supported.

- Windows 7 and 2008 R2 (hot fix required: https://support.microsoft.com/en-us/kb/2990941)

- Windows 8.1, 2012 R2, 10, 2016

- RHEL, CentOS, NeoKylin 6.5 and later

- Oracle Linux 6.5 and later

- Ubuntu 13.10 and later

- SLE 11 SP4 and later

- Solaris 11.3 and later

- FreeBSD 10.1 and later

- Mac OS X 10.10.3 and later

- Debian 8.0 and later

What is Supported Configuration of Virtual NVMe?

- Supports NVMe Specification v1.0e mandatory admin and I/O commands

- Maximum 15 namespaces per controller – (each namespace is mapped to a virtual disk enumerated as nvme0:0, ….., nvme0:15)

- Maximum 4 controllers per VM – (Enumerated as nvme0, nvme1,…… , nvme3)

- Maximum 16 queues (1 admin + 15 I/O queues) and 16 interrupts.

- Maximum 256 queue depth (4K in-flight commands per controller)

- Interoperability with all existing vSphere features, except SMP-FT.

VMware vSphere Compatibility?

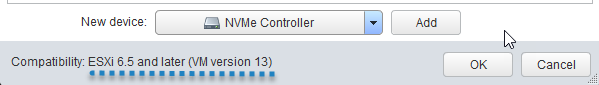

Also, don't forget that you'll need a virtual machine compatibility is ESXi 6.5 or later. The Add New device wizard when adding a new virtual hardware to a VM which has Virtual Hardware 13 configured (vmx-13).

How to Add VMware Virtual NVMe Device?

Step 1: Right-click the virtual machine in the inventory and select Edit Settings.

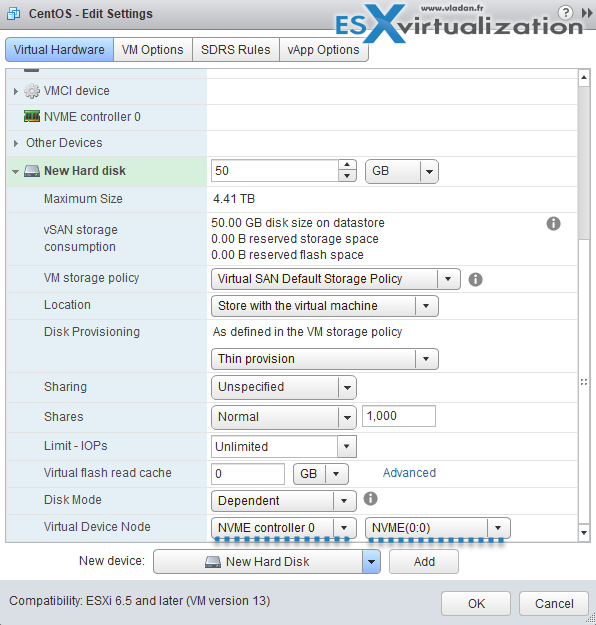

Step 2: Click the Virtual Hardware tab, and select NVMe Controller from the New device drop-down menu > Click Add > The controller appears in the Virtual Hardware devices list > Ok to validate. This is part one.

Part two is actually when you attach a new disk (or old one) to the controller. You can see it on the screenshot bellow…

Wrap Up:

This is certainly a change to a management of VMs for the future. When running on All-Flash hardware, having the NVMe adapter configure will certainly increase the IOPs. Some tests show up to 5 times boost.

The benefit of increased performance on VMs configured with NVMe might be a good way to increase the performance of your application(s) as we all know it's all about application performance. We did not play any tests in the lab but this topic seems to be fairly interesting.

Non Volatile Memory Express (NVMe) is a specification for connecting a flash directly to a PCIe bus on the server/workstation. And as such you're basically circumventing latencies generated by connecting the flash drive to an old SATA HDD controller.

Concerning the queue depth (queuing up of I/Os waiting to be served by a storage device) which is might lower (see inexistent due to parallel processing) means that storage is not creating any additional source of latency which slows down the VM performance.

Slowly the SATA/SAS formats seem to look old when compared side by side and the emergence (and the performance! ) of PCIe format.

More from ESX Virtualization

- Three Ways To Determine VM Hardware Version on VMware vSphere

- What is VMware Storage DRS (SDRS)?

- What is VMware vMotion?

- How-to Create a Security Banner for ESXi

- What is VMware Orchestrated Restart?

- How VMware HA Works?

Stay tuned through RSS, and social media channels (Twitter, FB, YouTube)

Thanks for your great sharing. Shall we use ‘Virtual NVMe Device’ on the datastore which on the all flash array?

I am a little confused. Actually, VM write data to the vmdk file. How NVMe works on the file? Thanks a lot!

Well, if you have all flash storage AND your VM’s OS is supported (driver) then yes. That’s the main goal of the virtual NVMe – reduce storage latency. It’s a storage controller, same as SATA, SAS, or PVSCSI….. Depends via which storage controller you attach your virtual disk to the VM.

Hello ,

is this feature have some relation with vSphere Flash Read Cache ?

if no , could you say can we use it for read and write together ?

To you this option to accelerate IO what we need exactly , Should we use any cache software like Intel CAS ? or its compliantly standalone ?

What about clustered environment , vMotion is supported ?

Thanks

No, nothing to do with vFlash Read Cache. NVMe is to use on All-Flash Arrays, including VMware vSAN AF.

Do you have any more information about the queue depths for the NVMe Controller, e.g. default values for the controller and disks? (And if so, then where the heck do you find such information?!?)

Best regards, Daniel…

No. Not myself. Perhaps some VMware folks…

Given a compatible guest OS and HW v13: Would virtual NVMe adapter be the new standard option over pvscsi for now on even if the storage is “hidden” in a NetApp SAN (either SATA or SCSI disks or SSDs depending on the requirements of the VM)?

Has NVMe any drawback that prevents it not to use it as the new standard storage controller for VMs even if the storage could not provide that high IOPS right now?

Wondering the same thing…

Any thoughts on this? e.g. any ‘drawback’ to using it as our default controller for all new VMs?

Just had this limitation mentioned from a customer, never saw that anywhere documented (which is… unfortunate in operations): https://kb.vmware.com/s/article/2147574