VMware VSAN product is nice piece of software which allows using local DAS storage as well as local SSD(s) in each host to configure a pooled datastore. The product shall be released in 2014 and you can find all the necessary info and guides through VMware VSAN community page, where you can currently download public beta.

My interest in VSAN is not only as an IT professional, but also as a home labber. That's why this post (or serie) where I'll try to think of possible scenarios and also I'll post about the troubles and experiences I have acquired from building my homebrew VSAN. I have already talked about the principal difficulty and enemy of VSAN homebrew installs – the cost, in my article Homelab Thoughts – VSAN.

I always go the consumer grade way to build my ESXi boxes. The latest build was my low power Haswell box with 32 Gb of RAM with an energy efficient Intel CPU – i7-4770S model (only 65w TDP). The power consumption is important, as well as the amount of RAM. You could certainly go with less powerfull build with i5 CPU as well, or with smaller case, but then you would probably face some extensibility problems, where the motherboard usually has a single PCIe slot which is certainly not a lot considering that VSAN needs VMkernel adapter for VSAN traffic and at least single dedicated pNIC.

Where I shall be going the Infiniband way for my VSAN cluster, where it's not exactly in place yet, as I'm currently facing physical space problems at my current place and also the old IB switch I bought is very noisy. So I must first solve those two problems before really having 20Gb backend for my VSAN. But with 2 hosts scenarios without VSAN, the solution works very well.

For the moment, I try to reuse as much as hardware as I can to build my VSAN cluster. That's why I'm still using my 2 older Nehalem boxes I've build back in 2011, which do have 24Gb of RAM each. But as you know the i7 Nehalem CPUs have 130 TDP so the plan is to use them for something else in the future and complete the VSAN cluster with more energy efficient whiteboxes.

Homebrew VSAN Node

The Haswell box is a good candidate for a VSAN, with a price tag and costed around €650 when I built it. So if you think doing the same and have VSAN back in your head, then multiply this by 3 plus get some SSDs…. For VSAN I needed to fit in at least one SSD and at least one HDD. That's currently done..

Update: The node will receive a IO controller card which is on the VMware HCL. Check out the My VSAN journey part 3 to see the details which controllers are supported, how to search the VMware HCL for IO controller cards and full nodes too.

The picture shows how it started (6month ago). I'm getting the Dell PERC H310 8-port 6Gb/s SAS/SATA w/RAID 0,1 from eBay, as a storage controller card.

The Motherboard does not have any fancy stuff like IPMI, but hey, it's not a Supermicro board, but only a €90 board and one has to think that 3 of those are necessary if you want to play with physical VSAN cluster instead of just going single box way with nested ESXi hypervizors.

But lets move on my thoughts and installation. As I said I've had some SSDs and HDDs laying around, different capacities, some Vertex 4 and some Crucial ones. VSAN allows mixing different capacity for creation of disk groups. In my case I've only put in one of each, just enough to “pass” the VSAN assistant.

The Networking

As for the networking part, it's fairly simple. The best would be to dedicate a physical NIC gigabit adapter (better two of those) for VSAN traffic as VSAN needs a big “pipe”. It's not a hard requirement thought, but higly recommended.

That's where the first limitation might occur if you using mini or micro boxes that's are limited in PCIe (or PCI) slots. The second limitation will be the RAM.

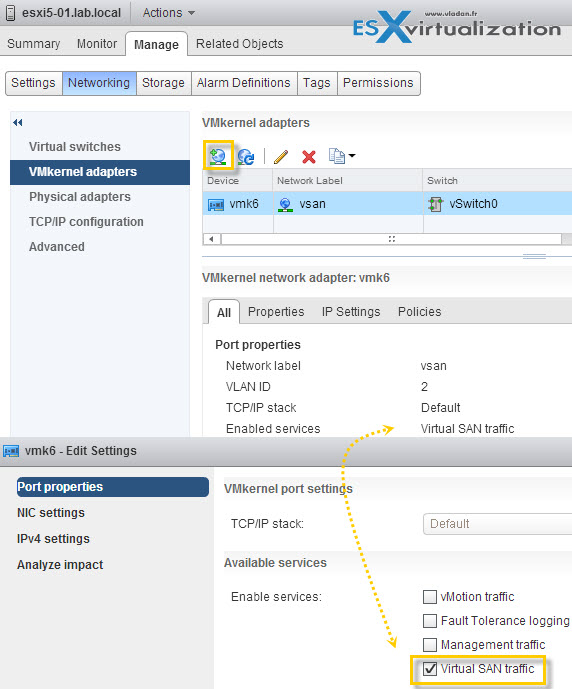

Where to do the network config? Through the vSphere web client. Select your host > Manage > Networking > Add VMKernel adapter icon.

All you need to do is add an additional VMKernel port and check the box for VSAN traffic.

Repeat those steps for each ESXi host participating in VSAN cluster. Notice that if you select your next ESXi host, you'll find yourself directly where you need to be… In front of network configuration where you need to add VMkernel adapter. Pretty cool, he?

The Storage

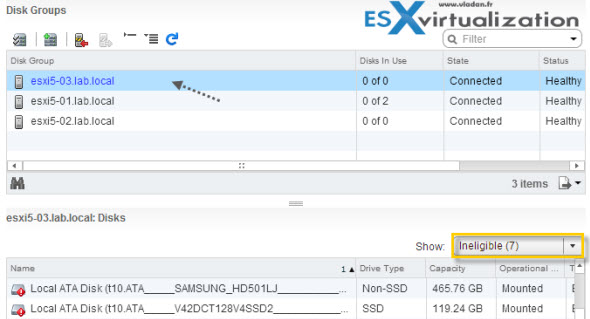

The “storage part” of my homebrew node was kind of more tricky. The fact that I'm using disks which were not blank, so existing partitions were present on my disks. Both. SSDs and HDDs. You might say, that does not matter as VSAN will probably format those anyway. No. In addition, the disks even if they were visible through the disk management, but only as ineligible disks!

That's pretty odd I thought to myself and fired up my Chrome to seek for a reply….

The answer came back quick. Because the disks weren't blank, VSAN basically says – ” I don't want those”…. You have two options:

1. The easy way – format and create VMFS datastore > and then delete this datastore. Repeat for each local disk you want to use in VSAN.

2. The more difficult way – through Ruby console > you need to check the state of each locally attached disk first. The output shows you that possibly multiple partitions are detected > you must delete those, one by one by using this PartEdutil command. Make sure to double check the device ID to not delete wrong partition on wrong disk! There is good KB on this – Using the partedUtil command line utility on ESXi and ESX

Once you deleted all partitions on the disks, they can be claimed by VSAN. But before checking that, rescan your adapters.

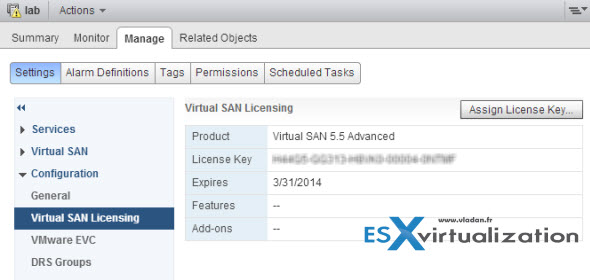

Don't forget about Licensing

When registering for VSAN public beta you'll be given a license. Don't forget to enter the license number as you won't be able to create disk groups.

Where to configure VSAN licensing? Go and select your cluster > Manage > Settings

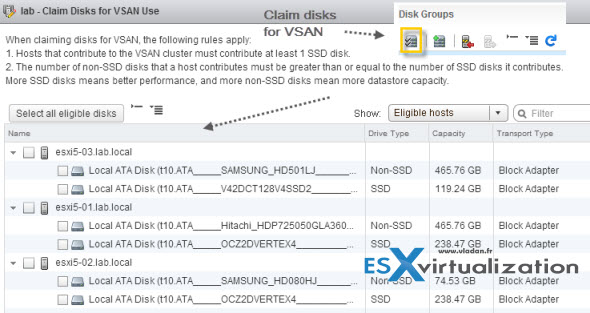

What else to add? After creating the vmkernel ports for VSAN you can activate the VSAN cluster specifying that you want to manually Add your local disks. The steps of manually adding disks into disk groups are dead easy, the same as activating VSAN cluster.

Select your Cluster > Manage > Settings > Disk Management > Click the Claim Disks icon.

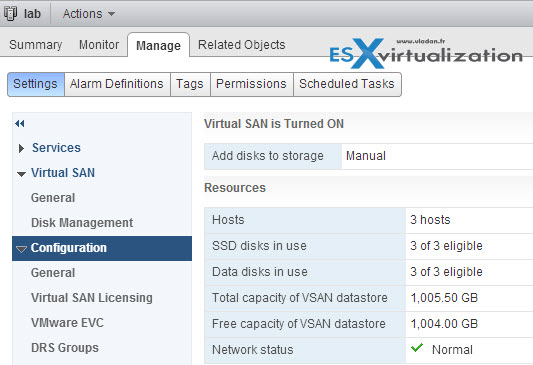

You should end up with a view of your VSAN capacity and number of hosts – like this:

In my home lab setup (which will evolve soon I hope) I've ended with 3 hosts participating in VSAN cluster which gives me rough capacity of 1TB where more than half of the capacity is provided by SSDs, not HDDs. You can easily add additional storage to the disk group by claiming additional disks. VMware recommends, if I remember right, 1:8 scenario. For single SSD you can have 8 HDDs. I can easily add 4 other SATA drives If I wanted to as there is space in the case and there are 4 SATA slots free. But it really depends on your scenario and what you have “laying around”. I've build my homebrew VSAN cluster on what I currently had. The price is one of the primary criteria….

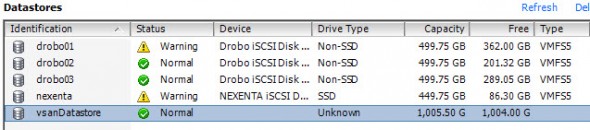

The Storage view through the Windows (now called “legacy”) client:

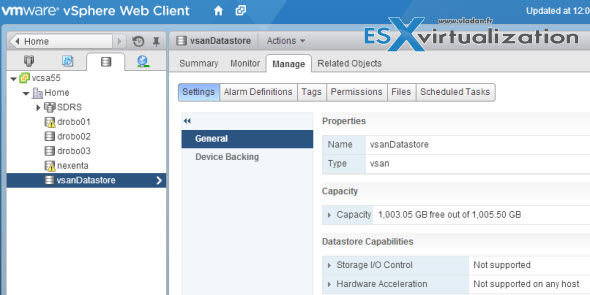

And here through the vSphere Web Client:

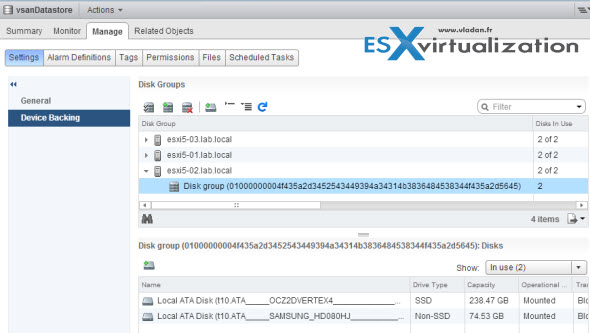

When you click the Device Backing you can see each host and disk groups that are created on each hosts, with details of which disks are attached to each disk group.

Not very difficult the setup where the most of my lab infrastructure was already in place (vSphere cluster, physical network with VLANs, Other ESXi Whiteboxes). Just a small gotcha when claiming the disks that weren't “clean”, but otherwise fine. The other problems you might encounter is when you might need to tag your hard drive as an SSD when it's not recognized natively by ESXi. You can follow up the VMware Online Documentation for that.

One thought here, with the prices of SSDs falling, one might even want to use only SSDs for the whole cluster, and tag the SSDs wanting to look like HDDs. Enough to pass the VSAN verification…..

VSAN in the Homelab – the whole serie:

- My VSAN Journey – Part 1 – The homebrew “node” – this post

- My VSAN journey – Part 2 – How-to delete partitions to prepare disks for VSAN if the disks aren’t clean

- Memory Channel per Bank getting non active when using PERC H310 Contoler card – Fixed!

- Infiniband in the homelab – the missing piece for VMware VSAN

- Cisco Topspin 120 – Homelab Infiniband silence

- My VSAN Journey Part 3 – VSAN IO cards – search the VMware HCL

- My VSAN journey – all done!

- How-to Flash Dell Perc H310 with IT Firmware To Change Queue Depth from 25 to 600

Nice write up about VSAN. I think the SSD to HDD ratio is 1:7. You can tie together a single SSD and up to 7 HDD for a disk group.