Another VSAN homelab is born! After waiting some time for more SSDs, Infiniband cables and Perc H310 storage controller cards from eBay, now when all the pieces has arrived I'm able to put everything together and run my VMware VSAN cluster. I expanded my older hosts with internal storage (previously there was a shared storage only), controller cards and some server side flash devices.

Update: Check out How-to Flash Dell Perc H310 with IT Firmware To Change Queue Depth from 25 to 600, because the H310 suffers when used with default firmware…

Update 2: There is now a VSAN All Flash running in my lab. I think you should check it out.

Storage network

The storage network uses Cisco Topspin 120 (I think other references this switch as Cisco 7000 model too) which has 24 ports.

- Infiniband switch (Topspin 120 from eBay – you can find one of those for roughly $200 + shipping) providing me with 10Gb for VSAN traffic and 10Gb for VMotion traffic.

- The Mellanox HCA cards do provide 2x10Gb network speed and are also from eBay (HP 452372-001 Infiniband PCI-E 4X DDR Dual Port Storage Host Channel Adapter HCA – €26 each).

- Infiniband Cables – MOLEX 74526-1003 0.5 mm Pitch LaneLink 10Gbase-CX4 Cable Assembly – roughly €29 a piece.

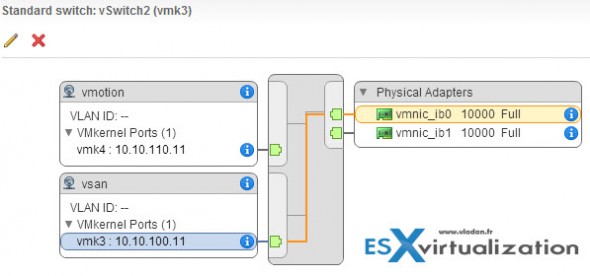

Config of the storage network:

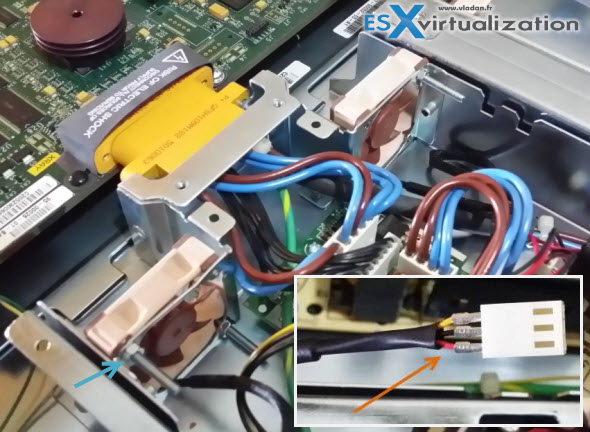

The switch has finally received a new Noctua fans (Noctua nf-a4x10) which do have 3 wires. But for my particular situation I had to change the order of those wires. But the results are excellent, otherwise the switch was just too noisy to keep it in the house!!

Here is the “wiring” …. You can also see the very narrow Noctua fans. Needed to be bolted – the bolts are pretty long as I did not know that the fans will be that “slim”… -:). But the silence is important and I'm quite proud …

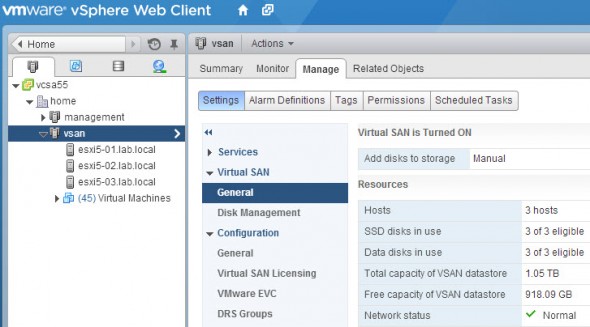

What I liked with the VSAN config?

It allows me to go through the process almost as it would have been a production environment and see the gotchas or problems you can encounter during the setup.

During the config process if you for example forget to check box on the vmkernel port group for the VSAN traffic, the VSAN assistant won't let you activate the VSAN cluster and you'll get a nice warning “Misconfiguration detected”. You can see it in my post which was done on testing VSAN on nested ESXi hypervisors.

If a disk has already some partitions VSAN won't let you use this disk to be a part of disk group. You must delete those partitions first. You can do it by creating and deleting VMFS datastore. Note that If you're having trouble to create VMFS5 filesystem based datastore, then try the older VMFS3 based datastore. I had a situation when this has happens.

I also changed my core switch in the lab so I have some spare ports too. I got a “bigger brother” of the SG300-10 which was the first switch L3 I used in my lab. The bigger one SG300-28 is fan-less and do provides inter vlan routing too.

The config is pretty much the same, but I'd say it's more convenient as everything can be done through the GUI. First thing to do is to switch from L2 to L3 mode, which will reboot and reset all the config. So don't start to configure the switch when you're in L2 mode as you will lose everything.

Here is what the lab looks like – physically. Note that in the room is also a laundry washing machine and dishes washer so it's most likely the piece where is the most noise. So even if the lab itself isn't too noisy still you can hear the spinning disks and some fans. But it's not a noisy system.

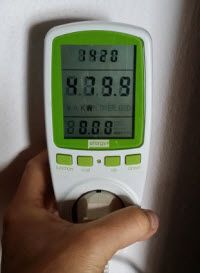

The Power consumption

The lab draws less than 500W according to my powermeter. This is quite good considering that I'm running 4 physical hosts and with two switches and one iSCSI SAN device. Two Nehalem CPUs are the most power hungry as the Haswell low power CPU has TDP 65W only.

The lab draws less than 500W according to my powermeter. This is quite good considering that I'm running 4 physical hosts and with two switches and one iSCSI SAN device. Two Nehalem CPUs are the most power hungry as the Haswell low power CPU has TDP 65W only.

I now have enough resources to run the VMs I want and test solutions which I find interesting or emerging. As my two Nehalem boxes are maxing on 24Gb of RAM I think that this will be the first resource which I might run out. No problem, with VSAN I can just add another host to my VSAN cluster…

Wrap up:

The fact that I can experiment with physical VSAN cluster is quite what I expected. The IB network gave me some new knowledge and with this setup I can also test other solutions. It's a good learning system.

The storage can get expanded in each node too as I'm only using single magnetic disk in each disk group in each node.

You can read the previous parts of this serie here:

- My VSAN Journey – Part 1 – The homebrew “node”

- My VSAN journey – Part 2 – How-to delete partitions to prepare disks for VSAN if the disks aren’t clean

- Memory Channel per Bank getting non active when using PERC H310 Contoler card – Fixed!

- Infiniband in the homelab – the missing piece for VMware VSAN

- Cisco Topspin 120 – Homelab Infiniband silence

- My VSAN Journey Part 3 – VSAN IO cards – search the VMware HCL

- My VSAN journey – all done! – This post

- How-to Flash Dell Perc H310 with IT Firmware To Change Queue Depth from 25 to 600

Feel free to subscribe to the RSS feed for updates or follow me on Twitter (@vladan).

Great end of your VSAN Journey. Hope you can now start playing with VSAN.observer and see the rebuilding of the storage across your 3 storage nodes.

Thanks and Congratulations !

Yeah, cool. It’s already on my to-do list… Thx Eric for your sharing and twitter help too -:). Hopefully this post will help others as well to get some ideas for a relatively cheap VSAN lab.-:)

Hey Vladan, I put up a post about your article at my blog at:

http://TinkerTry.com/virtualization-lab-for-sale-with-10gb-vsan-ships-from-a-tiny-island-near-madagascar

I remember read your article about 10GB VSAN back in May, great stuff, glad to see some of your items have already sold!

Hi Paul,

Thanks for the post. It’s quite crowdy here actually because 800 000 peoples lives here at Reunion Island. And the distance does not really matter. I we were in 1960 than it would probably have been different .. -:). Otherwise there are tourists from all over the world. One of the american tourists that has been interviewed on the local TV yesterday told the journalist that it’s like Hawaii…

Hi Vladan,

a regular reader of your web / blog. thank you for the time and effort to bring and share the knowledge / experience for novices / enthusiast like me:-)

HOMELAB: I have 3 x Optiplex 9010 (SFF) hosts, I have installed 1 TB + 240GB SSD in each host plus 2 x 1gb nics in each hosts. all 3 hosts have 32GB DDR3 ram, and corei5 / core i7 processors.

I am using the onboard sata ports as i don’t have much left now for 3 x supported HBAs. 🙂

I am planning to use / install VSAN (after getting a vmug membership). can I also use 2 x 256GB SSD per host to make it an all flash vsan ?

thanks and regards

rihatum

Hi rihatums,

note that the post is over one year old. I don’t think it’ll work with onboard SATA. If yes, the perfs will be “terrible”. Last year I’ve done it with Dell HBAs quite cheap off eBay…. I still have 2 of those…

Second thing is the All-flash (now “advanced”) version of VSAN. Not sure that VMUG has the license for that. Better check with someone that has the membership.

Good luck.

Hi, Very instructive. I decided to try it with my Mellanox IS5030 QDR 36-port switch. Problems I encountered are two-fold. First, the need to replace 6 fans @ $17 each. Then when I pulled the old fans I discovered the were spec’d as moving 500% as much air volume. This is why they were squealing like a turbine.

Did you compare air volume capacity of the new and old fans? What kind of reduction was there and how has the switch faired?

I no longer run this hardware. Check http://www.vladan.fr/lab