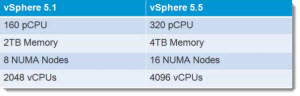

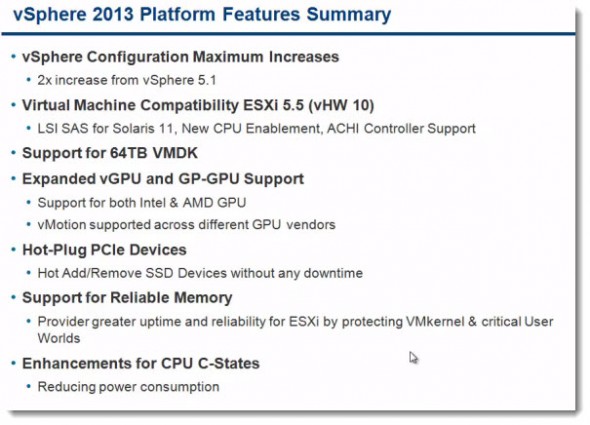

VMware vSphere 5.5 released and brings many enhancements, which are introduced with new virtual hardware version 10 (VMX-10). It brings new configuration maximums for ESXi hosts, clusters and VMs, by introducing Virtual Hardware version 10 (vmx-10) which allows to use larger VMDKs, larger number of CPUs and memory.

Virtual Machine Hardware Version 10 (vmx-10) – There is a new support in vSphere 5.5, for VMX-10 virtual hardware. Where new components are supported in the guest OS:

- LSI SAS for Solaris 11

- New CPU architecture enablements

- AHCI for SATA support (Advanced Host Controller Interface)

- Sata as controller is supported on CD-rom or Virtual disk

- On ESXi, the IDE only is supported as a CD-Rom controller

- Mac OSX don't support IDE, so CD-rom needs AHCI

- Many guest OS supports AHCI anyway

- Up to 30 devices per controller with up to 4 controllers per VM (120 devices)

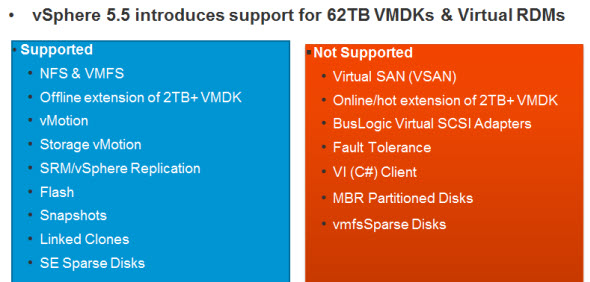

62 TB VMDK Support – yes, this one was a real limit because you could not create larger virtual disks than 2TB. As a workaround users usually used RDMs (link to a physical hard drive, or to physical lun). Support on VMFS 5 and NFS where the support on NFS depends of the underlying system. There is no hardware specific requirements, just the vSphere 5.5 (or ESXi 5.5). The 62 TB for VMDKs will only be possible through vSphere Web client !

Expanded vGPU and GP-GPU Support – after NVIDIA support in vSphere 5, there has been added an AMD and Intel GPUs support, where there are three rendering modes available (Automatic, Hardware or Software). There is also the possibility to vMotion VMs between different GPU vendors.

- There is an automatic mode which switches automatically into software rendering if hardware GPU isn't available. If the

- Hardware Mode is enabled and the destination does not have the hardware card installed, then the vMotion check fail and vMotion will not start at all.

Hardware support list:

- FirePro S7000 /S9000 /S10000

- FirePro v7800P /V9000P

NVIDIA:

- Grid K1 / K2

- Quadro 4000 / 5000 / 6000

- Tesla M2070Q

GP-GPU support – concerns high performance computing with DirectIO through PVSP (Partner verified & supported products).

VMs Support – virtual hardware version 8 and greater, where Win 8 needs vmx-09. The vGPU and GP-GPU is supported for Windows or Linux VMs where those limitations applies:

Windows 7 or W8 VMs are supported with View 5 and later.

Linux VMs (vmwgfx driver bundled) – Fedora 17+, Ubuntu 12+ or RHEL.

Graphic acceleration for Linux Guests – Linux VMs are now supported in vSphere 5.5 where the GPU installed on the ESXi host can accelerate the graphical treatment inside of those guests. VMware is the only virtualization player that does this today, where the whole Linux driver stack is provided as free software. Most of the modern Linux distributions do package the VMware drivers by default and no extra tools or packages are necessary to make it works. As a requirement, the Linux kernel has to be 3.2 or higher. This works perfectly on Ubuntu (from 12.04), Fedora (from (17 and higher) or RHEL 7.

There is an OpenGL support with following specifications:

- OpenGL 2.1

- DRM kernel modesetting

- Xrandr

- Xrender

- XV

Hot-Plug for PCI devices – New in vSphere 5.5 the ability to Hot-add PCIe SSD hard disk drives on running ESXi hosts. The host can also use PCIe expansion chassis in order to provide Hot plug capability for PCIe devices. Both, the Orderly or Surprise Hot-Plug operations are supported.

There is a hardware BIOS requirements, that must support Hot-Plug PCIe.

16 Gb End-to-End support for FC

vSphere 5.5 now supports 16Gb E2E (end-to-end) Fibre Channel.

Reliable Memory Support – in order to avoid memory corruptions leading often to PSODs, the benefit is obvious, to benefit greater uptime. The feature must be hardware supported. The hardware reports the state of the RAM to the ESXi. There are RAM which is more pure than the other, so the ESXi host can reserve the most critical services for VMkernel, Init thread or Hostd and Watchdog.

CPU-C States Enhancements – the c-state is now used in the default balanced policy (previously, 5.1 used the P states). Just a quick note: C-states are idle states and P-states are operational states. This difference, though obvious once you know, can be initially confusing. This behaviour saves much more power and can eventually increase performance for cores which aren't utilized and turbo mode can be taken advantage for a single core.

USB autosuspend – this function puts the idle USB hubs in lower power state.

vSphere 5.5 Release:

- VMware vSphere 5.5 – Storage enhancements and new configuration maximums – this post

- vCD 5.5 – VMware vCloud Director 5.5 New and enhanced features

- VMware VDP 5.5 and VDP Advanced – With a DR for VDP!

- VMware vSphere 5.5 vFlash Read Cache with VFFS

- VMware vSphere Replication 5.5 – what's new?

- VMware VSAN introduced in vSphere 5.5 – How it works and what's the requirements?

- VMware vSphere 5.5 Storage New Features

- VMware vSphere 5.5 Application High Availability – AppHA

- VMware vSphere 5.5 Networking New Features

- VMware vSphere 5.5 Low Latency Applications Enhancements

- ESXi 5.5 free Version has no more hard limitations of 32GB of RAM

- vCenter Server Appliance 5.5 (vCSA) – Installation and Configuration Video

hello

I have a Server Hp Dl580 G7 and I installed ESXi 5.5 on Server and Vcenter 5.5

I have a GPU AMD Firepro S10000 and I want to install on this Server.

Can I install the GPU on the Server ?

Do I need any Driver for this GPU?

Does a Server need any requirements for AMD Firepro S10000 ?

please help me because I have little time

thanks a lot

Have you checked VMware HCL for your server (is it supported for the 5.5 built?) and the GPU? Do you have an active VMware SnS support? Have you contacted your VMware representative yet? Just askin…. -:)

Usually drivers can be downloaded for supported HCL parts from VMware site or directly from the hardware manufacturer’s site. But if you’re trying to use unsupported hardware you basically will be strugling a bit. Not saying that it won’t work. Unfortunately I cannot help as I don’t have this kind of hardware in my lab.