VMware vSAN allows presenting locally attached storage (SSD and HDD) to create clustered datastore. VMware vSAN is a software feature is built into the hypervisor. To be able to leverage VMware vSAN, you'll need to associate at least one HDD and one SSD in each of the servers participating in the vSAN cluster, where the SSD don't contribute to the storage capacity. The SSD are doing just read caching and write buffer. The aggregation of HDDs from each server in the vSAN cluster forms a vSAN datastore.

Update: You should check the latest announce – VMware vSAN 6.6 Announced

The VSAN is a persistent storage which uses the locally attached SSD's as a read only cache. The SSD in the server is used as read cache and write buffer in front of the HDDs. The HDDs are there to assure the persistent storage. There is no single point of failure as the distributed object-based RAIN architecture is the core of the system. In this first version of VMware vSAN, the distributed RAID 10 or RAIN10 algorithm is used.

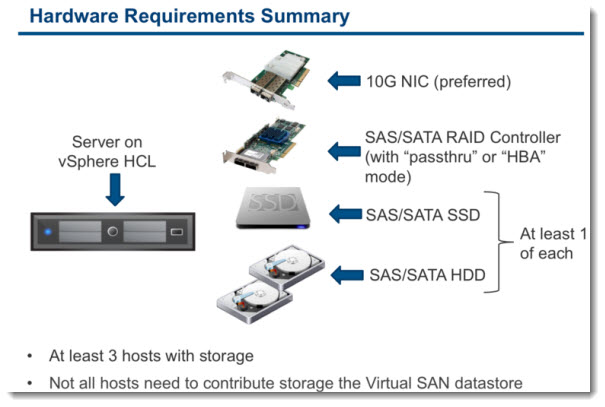

Here is a screenshot from the Hardware requirements for vSAN:

Note the requirements on the SAS controller which must be able to pass through in HBA mode, it means that the hypervisor will be able to interact directly with the disks.

VMware vSAN Features:

VMware vSAN Features:

- Integrated with vCenter, HA, DRS, vMotion

- Hypervizor based storage – software based

- vSphere management is the same concerning thin provisioning, snapshots, cloning, backups, replications

- VM storage management is policy based to enforce SLAs (you can set requirements for individual VMDKs)

- Local SSDs are used for read only cache, where locally attached HDDs are used for persistent storage

- Local HDDs are pooled together for clustered datastore

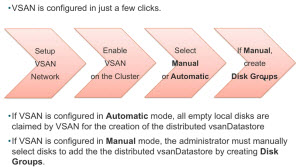

- simple activation

You can set requirements for VMs or individual VMDKs in terms of policies or storage services levels, which are applied to individual VMDK. Once applied, vSAN will take these requirements, and makes sure that those those VMs (and the underlying VMDKs) that sits on the vSAN datastore are always in compliances with those requirements.

Very simple management through vSphere Web client and vCenter. Tightly integrated with vSphere replication, backup, HA, DRS, vMotion….

Very simple management through vSphere Web client and vCenter. Tightly integrated with vSphere replication, backup, HA, DRS, vMotion….

The hardware requirements is to have the server on the VMware HCL, and if there is a RAID controller the controller has to support passthrough or “HBA mode”. This is because the vSAN interacts directly with the hardware (the HDDs and SSDs).

Software Requirements

On the software side, you'll need to create a VMkernel port for the vSAN traffic. The same way as you create VMkernel port for vMotion or FT, an additional VMkernel port for vSAN is necessary. Depending on your networking design, if you're using VLANs, you can probably just create an additional VMkernel without adding an additional physical NIC.

Key Features

Key Features

- Policy-driven per-VM SLA

- vSphere and vCenter Integration

- Scale-out storage

- Built-in resiliency

- SSD caching

- Converged compute and storage

The performance of vSAN datastore?

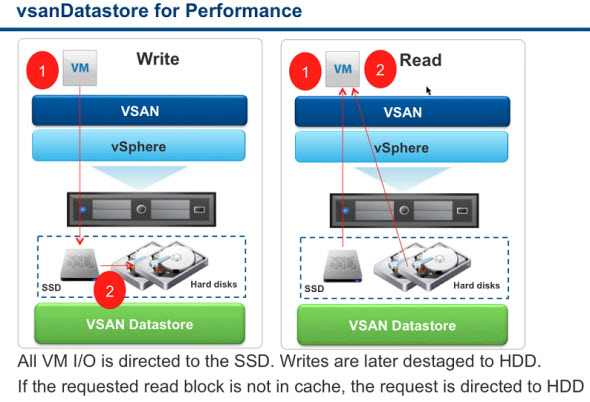

All the VMs IOs are directed directly to the SSD where the writes are destaged to HDD later. If a block gets requested which is not in the read cache, the request is directed to the HDD.

So as you can see on the schema below:

- First, write to SSD cache and then to HDD

- First read from SSD cache and then if the block isn't there, read from HDD.

vSAN Availability

Here is how a VM looks like on a vSAN datastore. Depending on the policy of the VMs (stripes and replicas), different parts of the VM can be stored across different hosts. The virtual machine storage objects (VM Home, VMDK, delta, swap) can be distributed across the hosts and disks in the vSAN cluster. VMs may have a replica copy for availability or stripe for HDD performance.

Here is how a VM looks like on a vSAN datastore. Depending on the policy of the VMs (stripes and replicas), different parts of the VM can be stored across different hosts. The virtual machine storage objects (VM Home, VMDK, delta, swap) can be distributed across the hosts and disks in the vSAN cluster. VMs may have a replica copy for availability or stripe for HDD performance.

With vSAN the VASA technology is leveraged (storage array awareness), so every host has a VASA capability in the vSphere 5.5. In case one of the hosts fails, another can take over.

VM Storage Policies

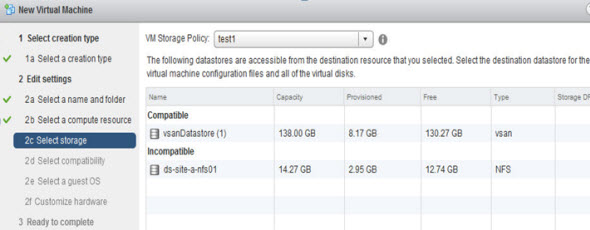

VM storage policies are built before you start deploying VMs. You can decide if you need availability, or performance (striping). Define if you need striping, reserving read cache, thin or thick provisioning. By default, VSAN does thin provisioning, but can be overridden by a storage policy.

VM storage policies are built before you start deploying VMs. You can decide if you need availability, or performance (striping). Define if you need striping, reserving read cache, thin or thick provisioning. By default, VSAN does thin provisioning, but can be overridden by a storage policy.

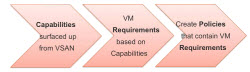

VM storage policy is based on vSAN capabilities. The policy is selected for a particular VM at deployment time and it's based on VM requirements.

Capabilities – the underlying storage surfaces up to vCenter its capabilities. Those capabilities show through the UI.

Requirements – can only be used against available capabilities, and means what we want for our VMs or applications.

Requirements – can only be used against available capabilities, and means what we want for our VMs or applications.

VM Storage Policies – is where the VMs requirements are, showing as capabilities.

vSAN 1.0 Capabilities

- Number of disk stripes per object – is the number of HDDs across which each replica of a storage object is distributed.

- Number of Failures to tolerate – how many numbers of hosts, network and/or disk failures a storage object can tolerate. Example for N number of failures to be tolerated in the cluster, “N+1″ copies of the storage object (VMs files) are created and at least “2N+1” hosts are required to be in the cluster.

- Object space reservation – is a percentage of a logical size of storage object (including snapshots, that should be reserved – thick). The rest of the object is thin provisioned.

- Flash Read Cache Reservation – is a percentage of a logical size of the flash object reserved as a read cache.

- Force Provisioning – allows to provision object (with non zero size) even if policy requirements are not met by the vSAN datastore.

Best Practices:

- Number of disk stripes per object – leave to 1 unless you need more performance

Flash Read Cache Reservation – should be left 0 unless a specific VM has performance requirements (and so can we can reserve flash read capacity for that VM).

Flash Read Cache Reservation – should be left 0 unless a specific VM has performance requirements (and so can we can reserve flash read capacity for that VM).- Proportional Capacity – should be left 0. Only if you need thick provisioned VM. By default, all VMs are deployed as thin.

- Force Provisioned VMs will not be compliant, but will be brought to compliance when the resources will become available.

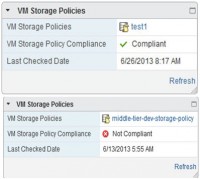

An example of the compliance of the vSAN Datastore – If the vSAN datastore can satisfy the capabilities, which were placed in the VM storage policy, the VM will be shown as compliant. Otherwise, if there is a failure, or if you force the provisioning capability, the VM will show as non-compliant.

vSAN Maintenance Mode

This is a mode when you need to evacuate the data which lays on the host going to the maintenance mode. As the host do have local storage, the data have to be evacuated elsewhere in order to stay available, when the host is in maintenance mode. However, there are few options which match more or less your scenario:

This is a mode when you need to evacuate the data which lays on the host going to the maintenance mode. As the host do have local storage, the data have to be evacuated elsewhere in order to stay available, when the host is in maintenance mode. However, there are few options which match more or less your scenario:

- Ensure Accessibility – Enough data gets evacuated so the VMs can keep running.

- Full Data Migration – all the data are migrated to the hosts which form the vSAN cluster.

- No data migration – do nothing. For short time maintenance only.

vSAN Limitations

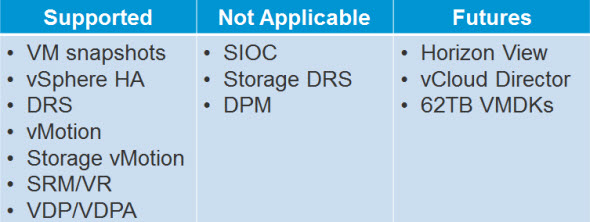

vSAN technology and vSAN datastores are compatible with most of VMware technologies like HA, DRS or vMotion, there are however some limitations in the version 1.0 which are shown in the image below.

Note: VMware View will work but won't see the vSAN datastore…

VMware vSAN is one of the new features that has been introduced in VMware vSphere 5.5.

Update: You can get hand on vSAN beta! Just register for a free download at https://www.vsanbeta.com

vSphere 5.5 Release:

- VMware vSphere 5.5 – Storage enhancements and new configuration maximums

- vCD 5.5 – VMware vCloud Director 5.5 New and enhanced features

- VMware VDP 5.5 and VDP Advanced – With a DR for VDP!

- VMware vSphere 5.5 vFlash Read Cache with VFFS

- VMware vSphere Replication 5.5 – what's new?

- VMware VSAN introduced in vSphere 5.5 – How it works and what's the requirements? – this post

- VMware vSphere 5.5 Storage New Features

- VMware vSphere 5.5 Application High Availability – AppHA

- VMware vSphere 5.5 Networking New Features

- VMware vSphere 5.5 Low Latency Applications Enhancements

- ESXi 5.5 free Version has no more hard limitations of 32GB of RAM

- vCenter Server Appliance 5.5 (vCSA) – Installation and Configuration Video

Vsan looks nice but what about synchronised io? and the SSD’s or SSA’s (pci) for write cache do they distribute all block across all nodes?

an real life solution situation would be:”

3 hosts / 20 or 30 vms all running 10GB nics 800MB/s on three hosts would you then 800MB/s across ALL vmdk disks is you have a total power outage?

in other words all the vm’s on the vsan would be instance corrupted!!

The same thing would happen on a regular SAN… if power drops you’ll possibly have some sort of corruption to deal with. I think VMware would tackle the multiple hosts corrupt thing by electing hosts to manage particular VMs based on the placement. So if a VM lives on Host-A, Host-A would have leadership of that VM, or something like this. A write local will be quicker than the replication off to additional hosts so it makes sense to elect local.

vSAN is suppose to be VSAN all Caps. vSAN refers to another term.