New version of VMware vSphere has introduced VMware vFlash Read cache, which enables you to use local SSD devices pooled together forming a pool of storage tier. The vFlash is integrated with vMotion, HA and DRS. The solution, the vFlash caching software, is tightly integrated into the hypevizor (placed into the data path), as an API, which is also available for third party caching modules. vFlash is a service within vSphere.

The vFlash Read Cache provides:

- Per-VMDK caching

- Hypervizor based caching

What are the benefits of vFlash?

The customers can benefit from an acceleration of VMs and applications while continuing to use shared storage as a principal storage for the existing VMs. It's important to note that the pooled flash resource it's not a fully featured storage, where you'll find a new datastore based on Flash, but it's an accelerator for an existing shared storage. So the shared storage with persistent storage is still needed.

vFlash Infrastructure

vFlash Infrastructure

The vFlash infrastructure will be managed as a single pool where all the locally attached SSD server storage will appear through the GUI of the vSphere Web Client, which is where you'll be managing the vFlash Infrastructure.

Flash Pooling as a resource pool:

- vFlash will appear as a new type of resource pool

- No consumption when VM is powered Off

- vMotion and DRS can be used

- The allocation of resources is based per virtual object (VM, Host…)

The Flash Resource management uses:

- Reservations, limits

- Uses per VMDK or per VM allocation (the config is at the VM level).

- Enforces admission control

- vFlash is a broker and manager for the entities which consumes the resources

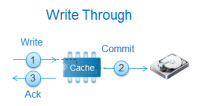

vFlash Release 1.0 supports Write Through Cache (Read only)

The first release supports write through mode, which is read only. The write back mode will be available in future releases. It's important to understand the the publicly available APIs gives opportunity to other storage companies to integrate their flash caching solution.

This makes me think of PernixData, which do have write back mode available in their Flash based storage hypervisor solution. I have participated on the Beta testing of their Flash Virtualization Platform (FVP) and you can read my detailed article about PernixData Here.

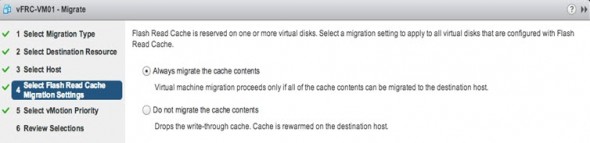

VMware enables the vFlash to be used during vMotion, where the content of the cache can be used during vMotion or also can be discarded, as needed.

The main use case of vFlash – Acceleration of applications

As said during the entry of this post, the vFlash framework accelerates the applications, but does not provides a storage features (enabling to create datastores). So the main benefit is the acceleration of applications, which can be different kind. Messaging, DB, collaborative applications, etc…

Another use case is VDI where the vFlash can significantly accelerate the deployment and execution of persistent and non-persistent desktops. During phases such of cloning, recomposition, suspend/resume, boot, rooming profile storms.

New FileSystem – VFFS

SSDs were used at most for caching for Swap to SSD only in previous versions of VMware vSphere. But now you can still use that feature and in addition, at the same time, you can use the flash device for vFlash read cache accelerations.

After creation of flash resource, there are two main features that are available:

- Virtual Flash Host Swap Cache – new name of old function… -:). The ability to use the virtual flash resource for memory swapping. You can take only part of an SSD to allocated it to SWAP. (ex 4 or 5 Gb).

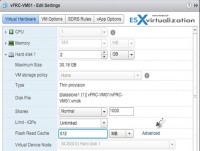

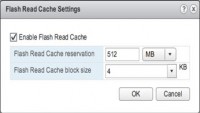

Virtual Machine Virtual Flash Read Cache – transparent flash access which accelerates the reads, with fine grain control with per-VMDK caching with variable block size capability (4kb – 1M). Depending of your application, which IO size or your application which runs in your VM. (For example by using vISCSI stats to) You'll figure it out and then you carve up those information to match the block size of the vFlash to give the best possible performance. vFlash and its configuration is visible through vSphere Web client only, so the management, configuration has to be done through there and not through the legacy Windows client.

vMotion migration possibilities

When choosing to vMotion a VM which disks are configured to use vFlash Read cache function, you have two options, which allows you to chose (or not) if you want to migrate the cache content. If you choose not to migrate the cache content, you will have to rewarm the cache at the destination, so the VM will perform slower until the cache gets recreated.

Hardware Requirements? Yes, the SSDs has to be on the HCL.

Yes, this might be a break for some and green light for others. But that's the way it is. Not every node in the vFlash cluster needs to have SSD installed, but if that's the case, the particular host won't be able to provide any vFlash resources.

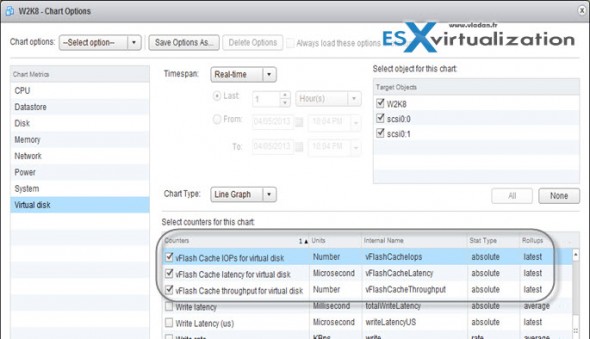

New Performance Statistics counters are available in vCenter.

Those statistics are available through vCenter server performance manager, and those are:

- FlashCacheIOPs

- FlashCacheLatency

- FlashCacheThroughput

More detailed stats can get stats with greater details.

vSphere 5.5 Release:

- VMware vSphere 5.5 – Storage enhancements and new configuration maximums

- vCD 5.5 – VMware vCloud Director 5.5 New and enhanced features

- VMware VDP 5.5 and VDP Advanced – With a DR for VDP!

- VMware vSphere 5.5 vFlash Read Cache with VFFS – this post

- VMware vSphere Replication 5.5 – what's new?

- VMware VSAN introduced in vSphere 5.5 – How it works and what's the requirements?

- VMware vSphere 5.5 Storage New Features

- VMware vSphere 5.5 Application High Availability – AppHA

- VMware vSphere 5.5 Networking New Features

- VMware vSphere 5.5 Low Latency Applications Enhancements

- ESXi 5.5 free Version has no more hard limitations of 32GB of RAM

- vCenter Server Appliance 5.5 (vCSA) – Installation and Configuration Video

I’m thinking about what’s the worst that could happen if using non-approved SSDs, which would save a lot in a home or lab environment. Thanks for the great info!

For home labbing there is usually no VMware support required, right? -:) So the question of using non-aproved (a hardware which is not on the HCL – http://www.vladan.fr/vmware-hcl-for-esxi-5-0/) is not really to worry about (for home labs). Have your important data backed up, of course !!

So, SSDs only for shared storage? What happens if I have only one host? Can I still use this? Can I use this also if my shared storage is a DAS device connected through SAS cable?

It’s very unlikely (except in cases like ROBO or home builds) that you run VMs out of local storage. But single host scenario allows still using SSDs for vFRC and HBRC (on the same SSD). By simply creating a cluster with single host in it.

Concerning the second part of your question.

Your DAS storage attached through SAS cable, it’s still DAS storage (deported physically from the server, but direct attached storage – not shared).

In the article I linked to here (clicking on my name above), you’ll see a video of me corrupting some running VMs (because I was using an untested, unsupported SSD for caching reads and writes on my LSI 9265-8i RAID controller). Yeah, I a lot of $ over buying enterprise SSDs, and it hasn’t happened again with my Samsung 830 SSD (also unsupported), and newer firmware on the RAID adapter.

So yes, I would agree, be sure you’re backed up, and the read-only caching your talking about would likely be far less risky anyway. And a lot easier for most folks to actually try out.

Thanks Vladan!

Hi, can I use vFlash with the free hypervisor or do I need vSphere Essentials?

Thanks!