Today's topic is again from VCP 6.5-DCV study guide. Our study guide page continues to fill up: VCP6.5-DCV Study guide. Let's talk about today's chapter: VCP6.5-DCV Objective 7.2 – Troubleshoot vSphere Storage and Networking. Not an easy one, to troubleshoot vSphere issues, but necessary to know for the exam, and in the real life.

Concerning VCP exam, you still have the choice to go for the latest certification exam, which is VCP6.5-DCV , or rather take the older one, VCP6-DCV which seems less demanding. It has 4 chapters less.

The exam has 70 Questions (single and multiple choices), passing score 300, and you have 105 min to complete the test. We wish everyone good luck with the exam.

Check our VCP6.5-DCV Study Guide Page. Note that this guide is not to be meant to be perfect and complete, but you can use it as an additional resource to traditional study PDFs, Books, Pluralsight trainings etc….

You can download your free copy via this link – Download Free VCP6.5-DCV Study Guide at Nakivo.

VCP6.5-DCV Objective 7.2 – Troubleshoot vSphere Storage and Networking

- Identify and isolate network and storage resource contention and latency issues

- Verify network and storage configuration

- Verify that a given virtual machine is configured with the correct network resources

- Monitor/Troubleshoot Storage Distributed Resource Scheduler (SDRS) issues

- Recognize the impact of network and storage I/O control configurations

- Recognize a connectivity issue caused by a VLAN/PVLAN

- Troubleshoot common issues with:

- Storage and network

- Virtual switch and port group configuration

- Physical network adapter configuration

- VMFS metadata consistency

Identify and isolate network and storage resource contention and latency issues

We'll heavily use VMware PDF called “vSphere Troubleshooting”. You'll find it on Google. There are several places which can slow performance of your storage:

SAN Performance Problems – A number of factors can negatively affect storage performance in the ESXi SAN environment. Among these factors are excessive SCSI reservations, path thrashing, and inadequate LUN queue depth.

To monitor storage performance in real time, use the resxtop and esxtop command-line utilities. For more information, see the vSphere Monitoring and Performance documentation.

Excessive SCSI Reservations Cause Slow Host Performance – When storage devices do not support the hardware acceleration, ESXi hosts use the SCSI reservations mechanism when performing operations that require a file lock or a metadata lock in VMFS. SCSI reservations lock the entire LUN. Excessive SCSI reservations by a host can cause performance degradation on other servers accessing the same VMFS.

Excessive SCSI reservations cause performance degradation and SCSI reservation conflicts – Several operations require VMFS to use SCSI reservations:

- Creating, resignaturing, or expanding a VMFS datastore.

- Powering on a virtual machine.

- Creating or deleting a file.

- Creating a template.

- Deploying a virtual machine from a template.

- Creating a new virtual machine.

- Migrating a virtual machine with VMotion.

- Growing a file, such as a thin provisioned virtual disk.

To eliminate potential sources of SCSI reservation conflicts, follow these guidelines:

- Serialize the operations of the shared LUNs, if possible, limit the number of operations on different hosts that require SCSI reservation at the same time.

- Increase the number of LUNs and limit the number of hosts accessing the same LUN.

- Reduce the number snapshots. Snapshots cause numerous SCSI reservations.

- Reduce the number of virtual machines per LUN. Follow recommendations in Configuration Maximums.

- Make sure that you have the latest HBA firmware across all hosts.

- Make sure that the host has the latest BIOS.

- Ensure a correct Host Mode setting on the SAN array.

Path Thrashing Causes Slow LUN Access – If your ESXi host is unable to access a LUN, or access is very slow, you might have a problem with path thrashing, also called LUN thrashing.

Your host is unable to access a LUN, or access is very slow. The problem might be caused by path thrashing. Path thrashing might occur when two hosts access the same LUN through different storage processors (SPs) and, as a result, the LUN is never available.

Path thrashing typically occurs on active-passive arrays. Path thrashing can also occur on a directly connected array with HBA failover on one or more nodes. Active-active arrays or arrays that provide transparent failover do not cause path thrashing.

- Ensure that all hosts that share the same set of LUNs on the active-passive arrays use the same storage processor.

- Correct any cabling or masking inconsistencies between different hosts and SAN targets so that all HBAs see the same targets.

- Ensure that the claim rules defined on all hosts that share the LUNs are exactly the same.

- Configure the path to use the Most Recently Used PSP, which is the default.

- Increased Latency for I/O Requests Slows Virtual Machine Performance

If the ESXi host generates more commands to a LUN than the LUN queue depth permits, the excess commands are queued in VMkernel. This increases the latency, or the time taken to complete I/O requests.

The host takes longer to complete I/O requests and virtual machines display unsatisfactory performance.

The problem might be caused by an inadequate LUN queue depth. SCSI device drivers have a configurable parameter called the LUN queue depth that determines how many commands to a given LUN can be active at one time. If the host generates more commands to a LUN, the excess commands are queued in the VMkernel.

If the sum of active commands from all virtual machines consistently exceeds the LUN depth, increase the queue depth.

The procedure that you use to increase the queue depth depends on the type of storage adapter the host uses.

When multiple virtual machines are active on a LUN, change the “Disk.SchedNumReqOutstanding” (DSNRO) parameter, so that it matches the queue depth value.

Resolving Network Latency Issues – Networking latency issues could potentially be caused by the following infrastructure elements:

- External Switch

- NIC configuration and bandwidth (1G/10G/40G)

- CPU contention

Verify network and storage configuration

Today's topic VCP6.5-DCV Objective 7.2 – Troubleshoot vSphere Storage and Networking, is really hard topic. You must have some experience with working with VMware vSphere environment and from labs. I highly encourage our readers who want to pass this exam, to get a home lab or do as much as Online Labs as you can in order to get to know vSphere environment.

For troubleshooting, you should Start from one end. Either from the host level > physical switch > uplinks > switches > port groups > VMs. Or from the other side.

- Check the vNIC status – connected/disconnected

- Check the networking config inside Guest OS – yes it might also be one of the issues. Bad network config of the networking inside of a VM.

- Verify physical switch config.

- Check the vSwitch or vDS config.

- ESXi host network (uplinks).

- Check Guest OS config.

In Windows VM do this:

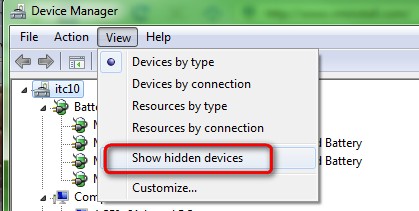

Click on Start > Run > “devmgmt.msc” > click + next to network adapters > check if it’s not disabled or not present.

You can also check the network config like IP address, Netmask, default gateway and DNS servers. Make sure that that information are correct.

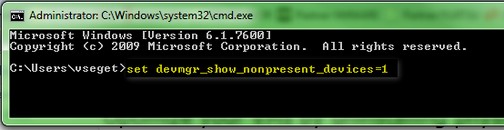

- If a VM was P2V – check if there are no “ghosted adapters”. To check that:

On your VM go to Start > RUN > CMD > Enter > Type “

set devmgr_show_nonpresent_devices=1

While still in the command prompt window type:

devmgmt.msc

and then open Device Manager and click on the Menu go to View > Show Hidden Devices (like on the pic).

Then you should see which devices are marked like ghosted devices.They are grayed out. Those devices you can safely remove from the device manager.

- Check IP stack – It happened to me several times that the IP stack of a VM was corrupted. The VM has had intermittent networking connectivity, everything seems to be ok but isn’t. You can clear the local cache by entering this:

ipconfig /renew

For Linux:

dhclient -r

dhclient eth0

Verify storage configuration

Verify the Connection Status of a Storage Device

Use the esxcli command to verify the connection status of a particular storage device. Install vCLI or deploy the vSphere Management Assistant (vMA) virtual machine. See Getting Started with vSphere Command-Line Interfaces. For troubleshooting, run esxcli commands in the ESXi Shell.

Run the command:

esxcli –server=server_name storage core device list -d=device_ID

Review the connection status in the Status: area.

- on – Device is connected.

- dead – Device has entered the APD state. The APD timer starts.

- dead timeout – The APD timeout has expired.

- not connected – Device is in the PDL state.

Verify that a given virtual machine is configured with the correct network resources

idem as above section (for Windows and/or Linux VM).

Monitor/Troubleshoot Storage Distributed Resource Scheduler (SDRS) issues

Storage Issues – Check that the virtual machine has no underlying issues with storage or it is not experiencing resource contention, as this might result in networking issues with the virtual machine. You can do this by logging into ESX/ESXi or Virtual Center/vCenter Server using the VI/vSphere Client and logging into the virtual machine console.

Storage DRS is Disabled on a Virtual Disk – Even when Storage DRS is enabled for a datastore cluster, it might be disabled on some virtual disks in the datastore cluster. You have enabled Storage DRS for a datastore cluster, but Storage DRS is disabled on one or more virtual machine disks in the datastore cluster.

There can be some scenarios which can cause Storage DRS to be disabled on a virtual disk.

(Sections from VMware documentation).

- A virtual machine’s swap file is host-local (the swap file is stored in a specified datastore that is on the host). The swap file cannot be relocated and Storage DRS is disabled for the swap file disk.

- A certain location is specified for a virtual machine’s .vmx swap file. The swap file cannot be relocated and Storage DRS is disabled on the .vmx swap file disk.

- The relocate or Storage vMotion operation is currently disabled for the virtual machine in vCenter Server (for example, because other vCenter Server operations are in progress on the virtual machine). Storage DRS is disabled until the relocate or Storage vMotion operation is re-enabled in vCenter Server.

- The home disk of a virtual machine is protected by vSphere HA and relocating it will cause loss of vSphere HA protection.

- The disk is a CD-ROM/ISO file.

- If the disk is an independent disk, Storage DRS is disabled, except in the case of relocation or clone placement.

- If the virtual machine has system files on a separate datastore from the home datastore (legacy), Storage DRS is disabled on the home disk. If you use Storage vMotion to manually migrate the home disk, the system files on different datastores will be all be located on the target datastore and Storage DRS will be enabled on the home disk.

- If the virtual machine has a disk whose base/redo files are spread across separate datastores (legacy), Storage DRS for the disk is disabled. If you use Storage vMotion to manually migrate the disk, the files on different datastores will be all be located on the target datastore and Storage DRS will be enabled on the disk.

- The virtual machine has hidden disks (such as disks in previous snapshots, not in the current snapshot). This situation causes Storage DRS to be disabled on the virtual machine.

- The virtual machine is a template.

- The virtual machine is vSphere Fault Tolerance-enabled.

- The virtual machine is sharing files between its disks.

- The virtual machine is being Storage DRS-placed with manually specified datastores.

Storage DRS Cannot Operate on a Datastore – Storage DRS generates an alarm to indicate that it cannot operate on the datastore. Storage DRS generates an event and an alarm and Storage DRS cannot operate.

vCenter Server can disable Storage DRS for a datastore, in case:

- The datastore is shared across multiple data centers.

Storage DRS is not supported on datastores that are shared across multiple data centers. This configuration can occur when a host in one data center mounts a datastore in another data center, or when a host using the datastore is moved to a different data center. When a datastore is shared across multiple data centers, Storage DRS I/O load balancing is disabled for the entire datastore cluster. However, Storage DRS space balancing remains active for all datastores in the datastore cluster that are not shared across data centers.

- The datastore is connected to an unsupported host.

Storage DRS is not supported on ESX/ESXi 4.1 and earlier hosts.

- The datastore is connected to a host that is not running Storage I/O Control.

- The datastore must be visible in only one data center. Move the hosts to the same data center or unmount the datastore from hosts that reside in other data centers.

- Ensure that all hosts associated with the datastore cluster are ESXi 5.0 or later.

- Ensure that all hosts associated with the datastore cluster have Storage I/O Control enabled.

Datastore Cannot Enter Maintenance Mode – You place a datastore in maintenance mode when you must take it out of usage to service it. A datastore enters or leaves maintenance mode only as a result of a user request. A data store in a datastore cluster cannot enter maintenance mode. The Entering Maintenance Mode status remains at 1%.

One or more disks on the datastore cannot be migrated with Storage vMotion. This condition can occur in the following instances.

- Storage DRS is disabled on the disk.

- Storage DRS rules prevent Storage DRS from making migration recommendations for the disk.

- If Storage DRS is disabled, enable it or determine why it is disabled.

- If Storage DRS rules are preventing Storage DRS from making migration recommendations, you can remove or disable particular rules.

How?

vSphere Web Client object navigator > Click the Manage tab > Settings > Configuration > select Rules and click the rule > Click Remove.

- Alternatively, if Storage DRS rules are preventing Storage DRS from making migration recommendations, you can set the Storage DRS advanced option IgnoreAffinityRulesForMaintenance to 1.

- Browse to the datastore cluster in the vSphere Web Client object navigator.

- Click the Manage tab and click Settings.

- Select SDRS and click Edit.

- In Advanced Options > Configuration Parameters, click Add.

- In the Option column, enterIgnoreAffinityRulesForMaintenance.

- In the Value column, enter 1 to enable the option.

- Click OK.

Recognize the impact of network and storage I/O control configurations

What's vSphere Storage I/O – vSphere Storage I/O Control allows cluster-wide storage I/O prioritization, which allows better workload consolidation and helps reduce extra costs associated with over-provisioning.

Storage I/O Control extends the constructs of shares and limits to handle storage I/O resources. You can control the amount of storage I/O that is allocated to virtual machines during periods of I/O congestion, which ensures that more important virtual machines get preference over less important virtual machines for I/O resource allocation.

When you enable Storage I/O Control on a datastore, ESXi begins to monitor the device latency that hosts observe when communicating with that datastore. When device latency exceeds a threshold, the datastore is considered to be congested and each virtual machine that accesses that datastore is allocated I/O resources in proportion to their shares. You set shares per virtual machine. You can adjust the number for each based on need.

The I/O filter framework (VAIO) allows VMware and its partners to develop filters that intercept I/O for each VMDK and provides the desired functionality at the VMDK granularity. VAIO works along Storage Policy-Based Management (SPBM) which allows you to set the filter preferences through a storage policy that is attached to VMDKs.

By default, all virtual machine shares are set to Normal (1000) with unlimited IOPS. Storage I/O Control is enabled by default on Storage DRS-enabled datastore clusters.

Network I/O – vSphere Network I/O Control version 3 introduces a mechanism to reserve bandwidth for system traffic based on the capacity of the physical adapters on a host. It enables fine-grained resource control at the VM network adapter level similar to the model that you use for allocating CPU and memory resources.

Version 3 of the Network I/O Control feature offers improved network resource reservation and allocation across the entire switch.

Models for Bandwidth Resource Reservation – Network I/O Control version 3 supports separate models for resource management of system traffic related to infrastructure services, such as vSphere Fault Tolerance, and of virtual machines.

The two traffic categories have different nature. System traffic is strictly associated with an ESXi host. The network traffic routes change when you migrate a virtual machine across the environment. To provide network resources to a virtual machine regardless of its host, in Network I/O Control you can configure resource allocation for virtual machines that is valid in the scope of the entire distributed switch.

Bandwidth Guarantee to Virtual Machines – Network I/O Control version 3 provisions bandwidth to the network adapters of virtual machines by using constructs of shares, reservation and limit. Based on these constructs, to receive sufficient bandwidth, virtualized workloads can rely on admission control in vSphere Distributed Switch, vSphere DRS and vSphere HA.

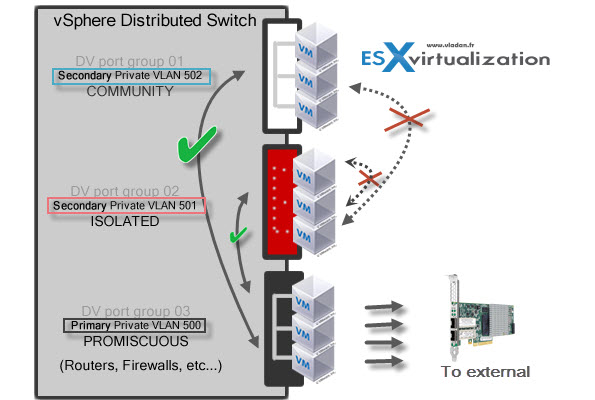

Recognize a connectivity issue caused by a VLAN/PVLAN

If you're not familiar with VLANS/PVLANS, I can recommend reading Objective 2.1 – Configure policies/features and verify vSphere networking.

VLANs let you segment a network into multiple logical broadcast domains at Layer 2 of the network protocol stack. Virtual LANs (VLANs) enable a single physical LAN segment to be further isolated so that groups of ports are isolated from one another as if they were on physically different segments. Private VLANs are used to solve VLAN ID limitations by adding a further segmentation of the logical broadcast domain into multiple smaller broadcast subdomains.

The VLAN configuration in a vSphere environment allows you to:

- Integrates ESXi hosts into a pre-existing VLAN topology.

- Isolates and secures network traffic.

- Reduces congestion of network traffic.

- Private VLANs are used to solve VLAN ID limitations by adding a further segmentation of the logical broadcast domain into multiple smaller broadcast subdomains.

A private VLAN (PVLAN) is identified by its primary VLAN ID. A primary VLAN ID can have multiple secondary VLAN IDs associated with it. Primary VLANs are Promiscuous, so that ports on a private VLAN can communicate with ports configured as the primary VLAN. Ports on a secondary VLAN can be either Isolated, communicating only with promiscuous ports, or Community, communicating with both promiscuous ports and other ports on the same secondary VLAN.

The graphics show it all…

To use private VLANs between a host and the rest of the physical network, the physical switch connected to the host needs to be a private VLAN-capable and configured with the VLAN IDs being used by ESXi for the private VLAN functionality. For physical switches using dynamic MAC+VLAN ID based learning, all corresponding private VLAN IDs must be first entered into the switch’s VLAN database.

Troubleshoot common issues with

- Storage and network – please refer to earlier sections.

- Virtual switch and port group configuration – same.

- Physical network adapter configuration – same.

- VMFS metadata consistency – Use vSphere On-disk Metadata Analyzer (VOMA) to identify incidents of metadata corruption that affect file systems or underlying logical volumes. You can check metadata consistency when you experience problems with a VMFS datastore or a virtual flash resource. (VMware KB article on using vSphere On-disk Metadata Analyzer.

A difficult chapter again, but necessary to know for the exam. Again, use the “vSphere Troubleshooting PDF” to go through.

Check our VCP6.5-DCV Study Guide Page.

Usually the holiday season offers more time for study (for those who are motivated to pass some exam), but at the same time it's twice more hard as you usually want to spend more time with your family and friends. But that's life, nothing is “free” and only a hard work gets results you dream of. A VCP certification is certainly one of the good starting points to put yourself on track to find better job, get recognition and perhaps make more money. I wish you good luck with the study. Stay tuned for other lessons.

More from ESX Virtualization

- VCP6.5-DCV Study Guide

- What Is VMware Virtual NVMe Device?

- What is Storage Replica? – Windows Server 2016

- What is VMware vSphere Hypervisor?

- VMware Transparent Page Sharing (TPS) Explained

- Ditch Your HDDs For ACloudA Gateway

Stay tuned through RSS, and social media channels (Twitter, FB, YouTube)