We continue to fill our VCP6.5-DCV Study guide page with one objective per day. Today's chapter is VCP6.5-DCV Objective 3.1 – Manage vSphere Integration with Physical Storage. There is no particular order when those chapters are published.

The latest vSphere 6.5 has now its certification exam. Not many guides are online so far, so we thought that it might be (finally) perhaps, a good idea to get things up.

I think the majority of new VCP candidates right now will go for the VCP6.5-DCV, because it's better to have the latest VMware certification exam on the CV, even if the VCP6-DCV seems less demanding. There are fewer topics to know.

Exam Price: $250 USD, there are 70 Questions (single and multiple answers), passing score 300, and you have 105 min to complete the test.

You might want to get the vSphere Storage PDF covering vSphere 6.5, ESXi 6.5 and vCenter Server 6.5 Storage Guide (PDF)

Check our VCP6.5-DCV Study Guide Page.

You can download your free copy via this link – Download Free VCP6.5-DCV Study Guide at Nakivo.

VCP6.5-DCV Objective 3.1 – Manage vSphere Integration with Physical Storage

- Perform NFS v3 and v4.1 configurations

- Discover new storage LUNs

- Configure FC/iSCSI/FCoE LUNs as ESXi boot devices

- Mount an NFS share for use with vSphere

- Enable/Configure/Disable vCenter Server storage filters

- Configure/Edit hardware/dependent hardware initiators

- Enable/Disable software iSCSI initiator

- Configure/Edit software iSCSI initiator settings

- Configure iSCSI port binding

- Enable/Configure/Disable iSCSI CHAP

- Determine use cases for Fiber Channel zoning

- Compare and contrast array thin provisioning and virtual disk thin provisioning

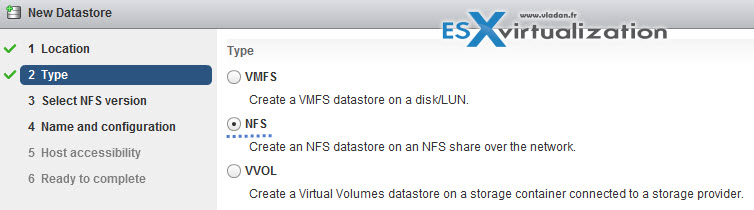

Perform NFS v3 and v4.1 configurations

NFS – Network file system, can be mounted by ESXi host (which uses NFS client). NFS datastores supports vMotion or SvMotion, HA, DRS, FT or host profiles.

- At first, you must go over to the NFS server where you'll need to set up an NFS volume and export it, so it can then be mounted on the ESXi hosts.

- You'll need: IP or the DNS (FQDN) of the NFS server, and also the full path, or folder name, for the NFS share.

- Each ESXi has to have configured VMkernel network port for NFS traffic

- For NFS 4.1, you can collect multiple IP addresses or DNS names to use the multipathing support that the NFS 4.1 datastore provides.

- In case you'll want to secure the communication between the NFS server and ESXi hosts, you might want to use Kerberos authentication with the NFS 4.1 datastore. You'll have to configure the ESXi hosts for Kerberos authentication.

Discover new storage LUNs

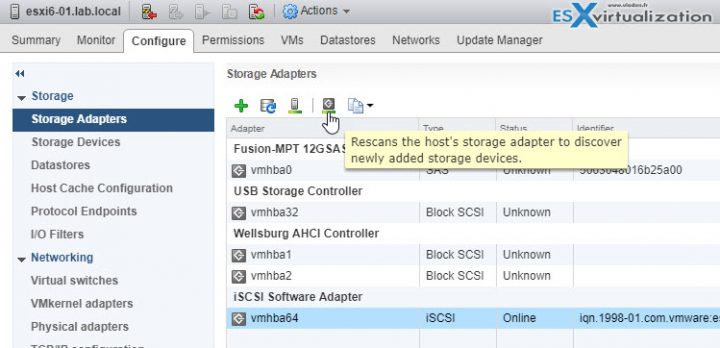

When doing changes to SAN config, you might need to rescan your storage to see the changes.

You can disable the automatic rescan feature by turning off the Host Rescan Filter. But by default, when you perform VMFS datastore management operations, such as creating a VMFS datastore or RDM, adding an extent, and increasing or deleting a VMFS datastore, your host or the vCenter Server automatically rescans and updates your storage.

Manual Adapter Rescan – If the changes you make are isolated to storage connected through a specific adapter, perform a rescan for this adapter. In certain cases, you need to perform a manual rescan. You can rescan all storage available to your host or to all hosts in a folder, cluster, and data center.

Perform the manual rescan if you need:

- Zone a new disk array on a SAN.

- Create new LUNs on a SAN.

- Change the path masking on a host.

- Reconnect a cable.

- Change CHAP settings (iSCSI only).

- Add or remove discovery or static addresses (iSCSI only).

- Add a single host to the vCenter Server after you have edited or removed from the vCenter Server a datastore shared by the vCenter Server hosts and the single host.

Where?

vShpere Web Client > Host > Configure > Storage > Storage Adapters > select adapter to rescan.

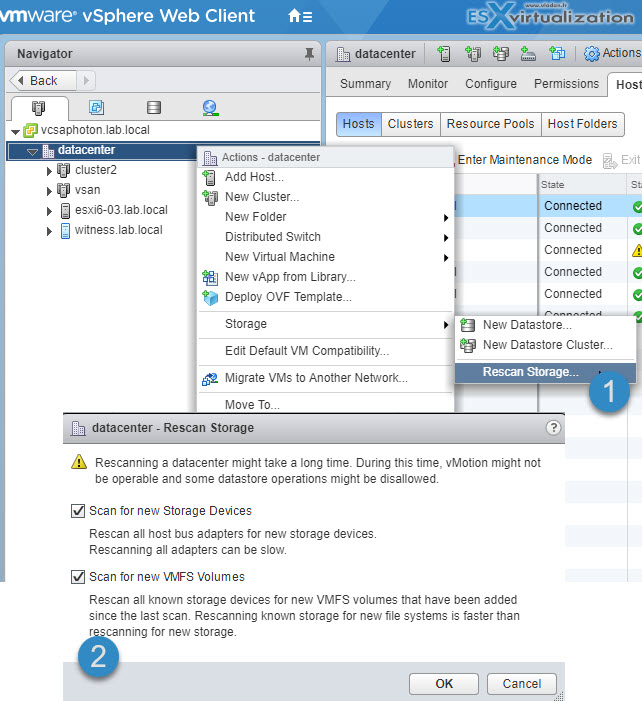

Storage Rescan – When you make changes in your SAN configuration you might need to rescan your storage. You can rescan all storage available to your host, cluster, or data center. If the changes you make are isolated to storage accessed through a specific host, perform the rescan for only this host.

Where?

vSphere Web Client > Browse to a host, a cluster, a data center, or a folder that contains hosts > Right-click > Storage > Rescan Storage .

Configure FC/iSCSI/FCoE LUNs as ESXi boot devices

Boot from SAN allows not using local storage of each server as a boot device. The host boots from shared storage LUN (one per host). ESXi support boot from FC HBAs, FCoE converged network adapters.

Benefits – It's easier to replace server because you can “point” the new server to the old boot location.

- Diskless servers are by design taking less space. You can avoid SPOF (single point of failure) because the boot disk is accessible through multiple paths.

- Improved management. Creating and managing the operating system image is easier and more efficient.

- Easier backup processes. You can backup the system boot images in the SAN as part of the overall SAN backup procedures. Also, you can use advanced array features such as snapshots on the boot image.

- Servers can be denser and run cooler without internal storage. (Cheaper).

(check page 50-57 from the vSphere 6.5 storage PDF).

The process basically starts with the storage device where you have to configure SAN LUN, the SAN components, and storage system.

BIOS configuration where you have to configure the Host bios to show the BIOS from the hardware card. After, point the boot adapter to the target boot LUN.

At first, you'll make the first boot from VMware installation media, then only you can configure the boot from SAN. Follow the steps on pages 50-52 of the vSphere 6.5 storage PDF.

Mount an NFS share for use with vSphere

- The IP address or the DNS name (IP or FQDN) of the NFS server and the full path, or folder name, for the NFS share.

- For NFS 4.1, you can collect multiple IP addresses or DNS names to use the multipathing support that the NFS 4.1 datastore provides.

- On each ESXi host, configure a VMkernel Network port for NFS traffic.

- If you plan to use Kerberos authentication with the NFS 4.1 datastore, configure the ESXi hosts for Kerberos authentication.

Create NFS mount. Right click datacenter > Storage > Add Storage.

You can use NFS 3 or NFS 4.1.

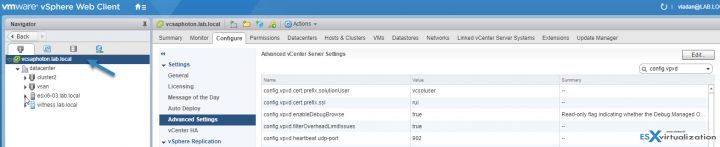

Enable/Configure/Disable vCenter Server storage filters

Storage Filtering – vCenter Server provides storage filters to help you avoid storage device corruption or performance degradation that might be caused by an unsupported use of storage devices. These filters are available by default.

Unsuitable devices are not displayed for selection. You can turn off the filters to view all devices. (but VMware support has to be contacted before ).

Where?

vSphere Web Client > Select vCenter Server Object > Configure > Settings > Advanced Settings> Edit > In the value text box enter “False” for the specified key.

Config.vpxd.filter.vmfsFilter (VMFS Filter) – Filters out storage devices, or LUNs, that are already used by a VMFS datastore on any host managed by vCenter Server. The LUNs do not show up as candidates to be formatted with another VMFS datastore or to be used as an RDM.

Config.vpxd.filter.rdmFilter (RDM Filter) – Filters out LUNs that are already referenced by an RDM on any host managed by vCenter Server. The LUNs do not show up as candidates to be formatted with VMFS or to be used by a different RDM. For your virtual machines to access the same LUN, the virtual machines must share the same RDM mapping files.

Config.vpxd.filter.SameHostsAndTransportsFilter (Same Hosts and Transports Filter)

Filters out LUNs ineligible for use as VMFS datastore extents because of host or storage type incompatibility. Prevents you from adding the following LUNs as extents:

- LUNs not exposed to all hosts that share the original VMFS datastore.

- LUNs that use a storage type different from the one the original VMFS datastore uses.

- For example, you cannot add a Fibre Channel extent to a VMFS datastore on a local storage device.

Config.vpxd.filter.hostRescanFilter (Host Rescan Filter) – Automatically rescans and updates VMFS datastores after you perform datastore management operations. The filter helps provide a consistent view of all VMFS datastores on all hosts managed by vCenter Server.

You do not need to restart the vCenter Server to apply changes. (Check page 172 of the Storage PDF).

Configure/Edit hardware/dependent hardware initiators

Hardware iSCSI initiators can be used for boot from SAN, for presenting remote storage as local disk, or using it as dedicated iSCSI SAN hardware card providing lower CPU usage and better throughput.

- Hardware iSCSI – Host connects to storage through a HBA capable of offloading the iSCSI and network processing. Hardware adapters can be dependent or independent.

- Software iSCSI – Host uses a software-based iSCSI initiator in the VMkernel to connect to storage.

The iSCSI adapter (hardware) is enabled by default. But to make it functional, you must first connect it, through a virtual VMkernel adapter (vmk), to a physical network adapter (vmnic) associated with it. You can then configure the iSCSI adapter.

After configuration of the hardware iSCSI adapter, the discovery and authentication data are passed through the network connection, while the iSCSI traffic goes through the iSCSI engine, bypassing the network.

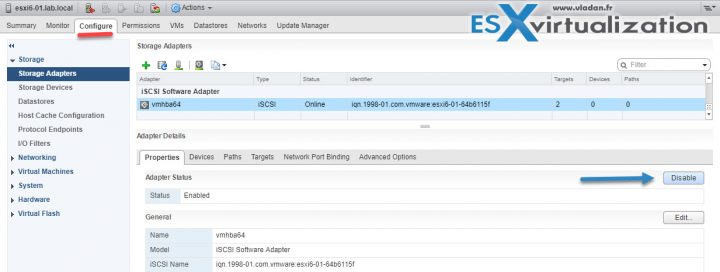

Enable/Disable software iSCSI initiator

Configure/Edit software iSCSI initiator settings

As on the image above, stay where you are. You can:

- View/Attach/Detach Devices from the Host

- Enable/Disable Paths

- Enable/Disable the Adapter

- Change iSCSI Name and Alias

- Configure CHAP

- Configure Dynamic Discovery and (or) Static Discovery

- Add Network Port Bindings to the adapter

- Configure iSCSI advanced options

Configure iSCSI port binding

Port binding allows to configure multipathing when :

- iSCSI ports of the array target must reside in the same broadcast domain and IP subnet as the VMkernel adapters.

- All VMkernel adapters used for iSCSI port binding must reside in the same broadcast domain and IP subnet.

- All VMkernel adapters used for iSCSI connectivity must reside in the same virtual switch.

- Port binding does not support network routing.

Do not use port binding when any of the following conditions exist:

- Array target iSCSI ports are in a different broadcast domain and IP subnet.

- VMkernel adapters used for iSCSI connectivity exist in different broadcast domains, IP subnets, or use

different virtual switches. - Routing is required to reach the iSCSI array.

Note: The VMkernel adapters must be configured with the single Active uplink. All the others as unused only (not Active/standby). If not they are not listed…

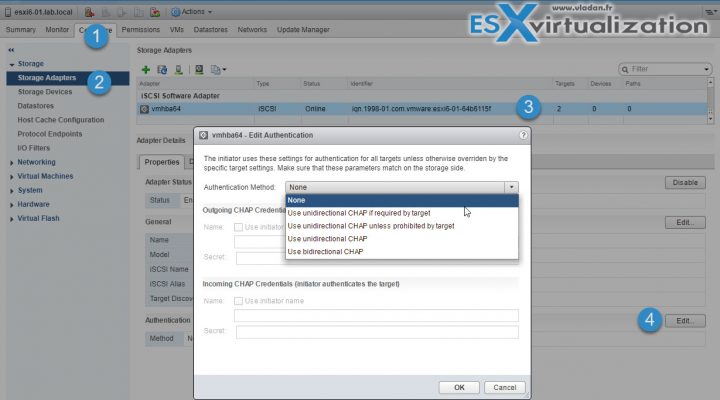

Enable/Configure/Disable iSCSI CHAP

Web Client > Host and Clusters > Host > Configure > Storage > Storage Adapters > Properties > Authentication section > Edit .

Page 95 of the vSphere 6.5 storage PDF guide.

Challenge Handshake Authentication Protocol (CHAP), which verifies the legitimacy of initiators that access targets on the network.

Unidirectional CHAP – target authenticates the initiator, but the initiator does not authenticate the target.

Bidirectional CHAP – an additional level of security enables the initiator to authenticate the target. VMware supports this method for software and dependent hardware iSCSI adapters only.

Chap methods:

- None – CHAP authentication is not used.

- Use unidirectional CHAP if required by target – Host prefers non-CHAP connection but can use CHAP if required by target.

- Use unidirectional CHAP unless prohibited by target – Host prefers CHAP, but can use non-CHAP if target does not support CHAP.

- Use unidirectional CHAP – Requires CHAP authentication.

- Use bidirectional CHAP – Host and target support bidirectional CHAP.

CHAP does not encrypt, only authenticates the initiator and target.

Determine use cases for Fiber Channel zoning

SAN Zoning – allows you to restrict server access to storage arrays whcih are not allocated to that server. Usually one create zones for a group of hosts that access a shared group of storage devices and LUNs. Zones basically define which HBAs can connect to which Storage Processors (SPs). Devices living outside a zone are not visible to the devices inside the zone.

Zoning is similar to LUN masking, which is commonly used for permission management. LUN masking is a process that makes a LUN available to some hosts and unavailable to other hosts.

Zoning is used with FC SAN devices:

- Allow controlling the SAN topology by defining which HBAs can connect to which targets. We say that we zone a LUN.

It Allows:

- Protecting from access non desired devices the LUN and possibly corrupt data.

- Can be used for separation different environments (clusters).

- Reduces the number of targets and LUN presented to host.

- Controls and isolates paths in a fabric.

Best practice? Single-initiator-single target.

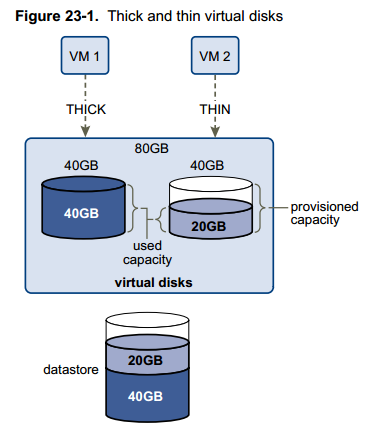

Compare and contrast array thin provisioning and virtual disk thin provisioning

Virtual disk thin provisioning allows to allocate only a small amount of disk space at the storage level, but the guest OS sees as it had the whole space. The thin disk grows in size when adding more data, installing applications at the VM level. So it’s possible to over-allocate the datastore space, but it brings a risk so it’s important to monitor actual storage usage to avoid conditions when you run out of physical storage space.

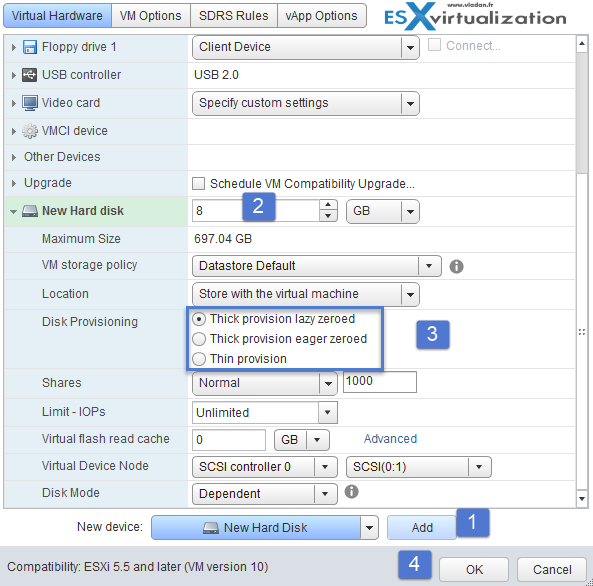

An image says thousands of words…

- Thick Lazy-Zeroed – default thick format. Space is allocated at creation, but the physical device is not erased during the creation proces, but zeroed-on-demand instead.

- Thick Eager-Zeroed – Used for FT protected VMs. Space is allocated at creation and zeroed immediately. The Data remaining on the physical device is zeroed out when the virtual disk is created. Takes longer to create Eager Zeroed Thick disks.

- Thin provision – as on the image above. Starts small and at first, uses only as much datastore space as the disk needs for its initial operations. If the thin disk needs more space later, it can grow to its maximum capacity and occupy the entire datastore space provisioned to it. A thin disk can be inflated (thin > thick) via datastore browser (right click vmdk > inflate).

Check the different VMDK disk provisioning options when creating new VM or adding an additional disk to existing VM

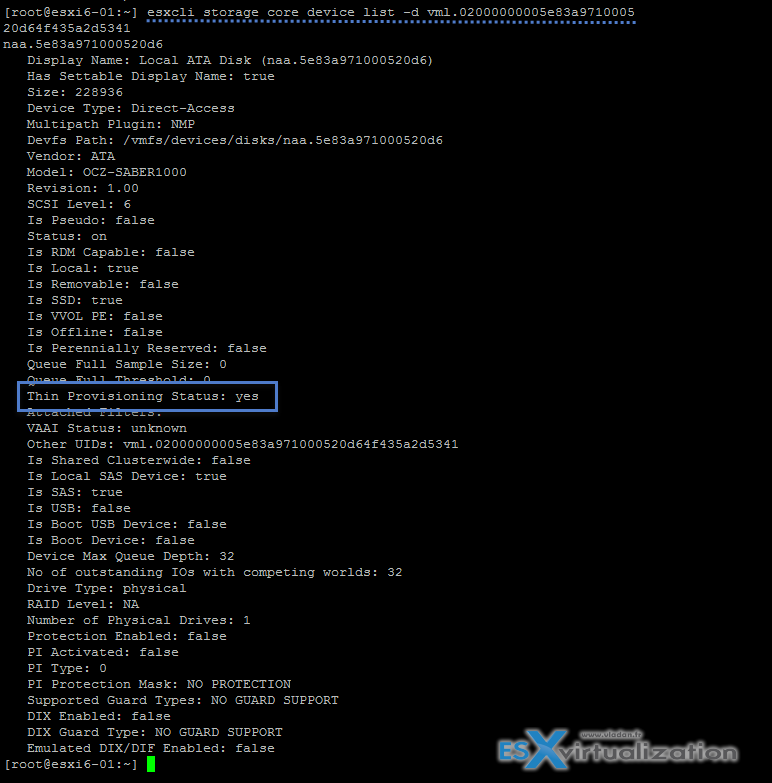

Thin-provisioned LUN

ESXi also supports thin-provisioned LUNs. When a LUN is thin-provisioned, the storage array reports the LUN’s logical size, which might be larger than the real physical capacity backing that LUN. A VMFS datastore that you deploy on the thin-provisioned LUN can detect only the logical size of the LUN.

For example, if the array reports 2TB of storage while in reality the array provides only 1TB, the datastore considers 2TB to be the LUN’s size. As the datastore grows, it cannot determine whether the actual amount of physical space is still sufficient for its needs.

Via Storage API -Array integration (VAAI) you CAN be aware of underlying thing-provisioned LUNs. VAAI let the array know about datastore space which has been freed when files are deleted or removed to allow the array to reclaim the freed blocks.

Check thin provisioned devices via CLI:

esxcli storage core device list -d vml.xxxxxxxxxxxxxxxx

VCP6.5-DCV Objective 3.1 – Manage vSphere Integration with Physical Storage is certainly a very large topic. Make sure that you download the vSphere 6.5 storage PDF (the link which is direct to the PDF might change over time, but do a quick research on Google and you'll find it).

Check our VCP6.5-DCV Study Guide Page.

More from ESX Virtualization

- What is VMware Cluster?

- What is VMware Storage DRS (SDRS)?

- What Is VMware ESXi Lockdown Mode?

- What is VMware VMFS Locking Mechanism?

- What is VMware vMotion?

- What is VMware Stretched Cluster?

Stay tuned through RSS, and social media channels (Twitter, FB, YouTube)