Today's topic of VCP6-DCV Study Guide is touching troubleshooting. In case something goes wrong and you loose connectivity to your application, you must probably troubleshoot the underlying VM first, the network second, but also a storage. When storage is under a pressure then your whole infrastructure just slows down and you might experience disconnections at the VM/application level. VCP6-DCV Objective 7.2 – Troubleshoot vSphere Storage and Network Issues is today's lesson.

You can also check vSphere 6 page where you'll find how-to's, news, videos concerning vSphere 6.x. Last but not least, my Free Tools page where are the post popular tools for VMware and Microsoft. Daily updates of the blog are taking time, but we do it in the goal to provide a guide which is helpful for the community and folks learning towards VCP6-DCV certification exam. If you find one of those posts useful for your preparation, just share.. -:).

vSphere Knowledge

- Verify network configuration

- Verify storage configuration

- Troubleshoot common storage issues

- Troubleshoot common network issues

- Verify a given virtual machine is configured with the correct network resources

- Troubleshoot virtual switch and port group configuration issues

- Troubleshoot physical network adapter configuration issues

- Troubleshoot VMFS metadata consistency

- Identify Storage I/O constraints

- Monitor/Troubleshoot Storage Distributed Resource Scheduler (SDRS) issues

—————————————————————————————————–

Verify network configuration

Start from one end. Either from the host level > physical switch > uplinks > switches > port groups > VMs

- Check the vNIC status – connected/disconnected

- Check the networking config inside Guest OS – yes it might also be one of the issues. Bad network config of the networking inside of a VM.

- Verify physical switch config

- Check the vSwitch or vDS config

- ESXi host network (uplinks)

- Guest OS config

Check for disabled/inactive adapters or other unused hardware (if Guest OS has been P2V)

In Windows VM do this:

Click on Start > Run > devmgmt.msc > click + next to network adapters > check if it's not disabled or not present

You can also check the network config like IP address, Netmask, default gateway and DNS servers. Make sure that those informations are correct.

- If a VM was P2V – check if there are no “ghosted adapters”. To check that:

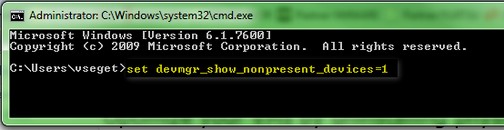

On your VM go to Start > RUN > CMD > Enter > Type “

set devmgr_show_nonpresent_devices=1

While still in the command prompt window type:

devmgmt.msc

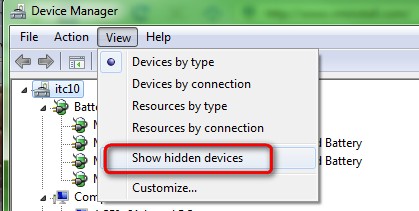

and then open Device Manager and click on the Menu go to View > Show Hidden Devices (like on the pic).

Then you should see which devices are marked like ghosted devices.They are grayed out. Those devices you can safely remove from the device manager.

- Check IP stack – It happened to me several times that the IP stack of a VM was corrupted. The VM has had intermittent networking connectivity, everything seems to be ok but isn't. You can clear the local cache by entering this:

ipconfig /renew

For Linux:

dhclient -r

dhclient eth0

Verify storage configuration

Check the documentation of vSphere storage, the basic concepts, iSCSI etc.

I've done few posts in configuring iSCSI and vSphere (not particulary related to vSphere 6 but those are step-by-steps:

- How to configure FreeNAS 8 for iSCSI and connect to ESX(i)

- How to configure ESXi 5 for iSCSI connection to Drobo

- Configuring iSCSI port binding with multiple NICs in one vSwitch for VMware ESXi 5.x and 6.0.x

Also check this VMware KB for Teaming and Failover Policy section in the vSphere Networking guide.

Troubleshoot common storage issues

Storage Issues – Check that the virtual machine has no underlying issues with storage or it is not experiencing resource contention, as this might result in networking issues with the virtual machine. You can do this by logging into ESX/ESXi or Virtual Center/vCenter Server using the VI/vSphere Client and logging into the virtual machine console.

Good doc – Troubleshooting Storage guide (p.55 – p.70) which talks about:

- Resolving SAN Storage Display Problems – page 56

- Resolving SAN Performance Problems on page 57

- Virtual Machines with RDMs Need to Ignore SCSI INQUIRY Cache on page 62

- Software iSCSI Adapter Is Enabled When Not Needed on page 62

- Failure to Mount NFS Datastores on page 63

- VMkernel Log Files Contain SCSI Sense Codes on page 63

- Troubleshooting Storage Adapters on page 64

- Checking Metadata Consistency with VOMA on page 64

- Troubleshooting Flash Devices on page 66

- Troubleshooting Virtual SAN on page 69

- Troubleshooting Virtual Volumes on page 70

Troubleshoot common network issues

Again, networking can be tricky to troubleshoot. But choosing one end to start with should help. Another tip is perhaps to check load balancing policies when more than 1 nic is connected to a VM.

Verify that the virtual machine is configured with two vNICs to eliminate a NIC or a physical configuration issue. To isolate a possible issue:

- If the load balancing policy is set to Default Virtual Port ID at the vSwitch or vDS level:

- Leave one vNIC connected with one uplink on the vSwitch or vDS, then try different vNIC and pNIC combinations until you determine which virtual machine is losing connectivity.

- If the load balancing policy is set to IP Hash:

- Ensure the physical switch ports are configured as port-channel. For more information on verifying the configuration on the physical switch, see Sample configuration of EtherChannel / Link aggregation with ESX/ESXi and Cisco/HP switches (1004048).

- Shut down all but one of the physical ports the NICs are connected to, and toggle this between all the ports by keeping only one port connected at a time. Take note of the port/NIC combination where the virtual machines lose network connectivity.

- Load balancing and failover policies – configure VM with 2 vNICs to eliminate physical NIC problems. Check esxtop using the n option (for networking) to see which pNIC the virtual machine is using. Try shutting down the ports on the physical switch one at at time to determine where the virtual machine is losing network connectivity.

- Check the vNIC's connection – check the status of the vNIC, (connected/disconnected) at the VM level AND also the NIC inside of the Guest OS (activated/deactivated).

Check more in this KB: Troubleshooting virtual machine network connection issues (1003893)

Verify a given virtual machine is configured with the correct network resources

I've invoked few areas already above. All or most of the possible problems can be found in this KB – KB 1003893

Troubleshoot virtual switch and port group configuration issues

- Same name for port groups – Make sure that the Port Group name(s) associated with the virtual machine's network adapter(s) exists in your vSwitch or Virtual Distributed Switch and is spelled correctly. Usually if this isn't done right on per-port group then you have connectivity problems

- VLANs – check VLANS on each standard switch

Troubleshoot physical network adapter configuration issues

Physical switch config is usually simple if “trunking” ports are used. Perhaps some of the issues might be if vNICs are not set to automatic (default) but fixed network speed, which do not match the speed of the physical switch… I doubt it…

If beacon probing is used, make sure that you have more than 2 pNICs in the team….

VMware KBs:

- 1005577 – What is beacon probing?

- 1004048 – Sample configuration of EtherChannel / Link Aggregation Control Protocol (LACP) with ESXi/ESX and Cisco/HP switches (1004048)

- 1001938 – Host requirements for link aggregation for ESXi and ESX

Troubleshoot VMFS metadata consistency

There is a VMware KB which explains what to do if:

- You have problems accessing certain files on a VMFS datastore.

- You cannot modify or erase files on a VMFS datastore.

- Attempting to read files on a VMFS datastore may fail with the error:

invalid argument

You can run file system metadata check by using VOMA.

Check it out – Using vSphere On-disk Metadata Analyzer (VOMA) to check VMFS metadata consistency (2036767)

Quote:

To perform a VOMA check on a VMFS datastore and send the results to a specific log file, the command syntax is:

voma -m vmfs -d /vmfs/devices/disks/naa.00000000000000000000000000:1 -s /tmp/analysis.txt

where naa.00000000000000000000000000:1 is replaced with the LUN NAA ID and partition to be checked. Note the “:1” at the end. This is the partition number containing the datastore and must be specified. See note below. As an advisory, if you run voma more than once, add the NAA ID and a time stamp to the output log file name. EG: -s /tmp/naa.00000000000000000000000000:1_analysis_<<hhmm>>.txtNote: VOMA must be run against the partition and not the device.

Identify Storage I/O constraints

Again, Good KB article to check – VMware KB 1008205.

Per LUN basis – To monitor storage performance on a per-LUN basis:

- Start esxtop > Press u to switch to disk view (LUN mode).

- Press f to modify the fields that are displayed.

- Press b, c, f, and h to toggle the fields and press Enter.

- Press s and then 2 to alter the update time to every 2 seconds and press Enter.

Per HBA – To monitor storage performance on a per-HBA basis:

- Start esxtop by typing esxtop > Press d to switch to disk view (HBA mode).

- To view the entire Device name, press SHIFT + L and enter 36 in Change the name field size.

- Press f to modify the fields that are displayed.

- Press b, c, d, e, h, and j to toggle the fields and press Enter.

- Press s and then 2 to alter the update time to every 2 seconds and press Enter.

Then the metrics to check out:

GAVG, DAVG, KAVG – latency stats.

You should check this community thread from which I quote the main part because I think that it's a very good work done by the community:

Latency values are reported for all IOs, read IOs and all write IOs. All values are averages over the measurement interval.

All IOs: KAVG/cmd, DAVG/cmd, GAVG/cmd, QAVG/cmd

Read IOs: KAVG/rd, DAVG/rd, GAVG/rd, QAVG/rd

Write IOs: KAVG/wr, DAVG/wr, GAVG/wr, QAVG/wrGAVG – This is the round-trip latency that the guest sees for all IO requests sent to the virtual storage device. GAVG should be close to the R metric in the figure.

Q: What is the relationship between GAVG, KAVG and DAVG?

A: GAVG = KAVG + DAVGKAVG – These counters track the latencies due to the ESX Kernel's command.

The KAVG value should be very small in comparison to the DAVG value and should be close to zero. When there is a lot of queuing in ESX, KAVG can be as high, or even higher than DAVG. If this happens, please check the queue statistics, which will be discussed next.

DAVG – This is the latency seen at the device driver level. It includes the roundtrip time between the HBA and the storage.

DAVG is a good indicator of performance of the backend storage. If IO latencies are suspected to be causing performance problems, DAVG should be examined. Compare IO latencies with corresponding data from the storage array. If they are close, check the array for misconfiguration or faults. If not, compare DAVG with corresponding data from points in between the array and the ESX Server, e.g., FC switches. If this intermediate data also matches DAVG values, it is likely that the storage is under-configured for the application. Adding disk spindles or changing the RAID level may help in such cases.

QAVG – The average queue latency. QAVG is part of KAVG.

Monitor/Troubleshoot Storage Distributed Resource Scheduler (SDRS) issues

Even when Storage DRS is enabled for a datastore cluster, it might be disabled on some virtual disks in the datastore cluster.

Check the vSphere, ESXi and vCenter server troubleshooting guide p.47 and p.52.

Scenarios like the one below are invoked there:

Storage DRS generates an alarm to indicate that it cannot operate on the datastore.

Problem – Storage DRS generates an event and an alarm and Storage DRS cannot operate.

Cause – The following scenarios can cause vCenter Server to disable Storage DRS for a datastore.

- The datastore is shared across multiple data centers – Storage DRS is not supported on datastores that are shared across multiple data centers. This

configuration can occur when a host in one data center mounts a datastore in another data center, or

when a host using the datastore is moved to a different data center. When a datastore is shared across

multiple data centers, Storage DRS I/O load balancing is disabled for the entire datastore cluster.

However, Storage DRS space balancing remains active for all datastores in the datastore cluster that are

not shared across data centers. - The datastore is connected to an unsupported host – Storage DRS is not supported on ESX/ESXi 4.1 and earlier hosts.

- The datastore is connected to a host that is not running Storage I/O Control. The datastore must be visible in only one data center. Move the hosts to the same data center or

unmount the datastore from hosts that reside in other data centers. - Ensure that all hosts associated with the datastore cluster are ESXi 5.0 or later.

- Ensure that all hosts associated with the datastore cluster have Storage I/O Control enabled.

Tools

- vSphere Networking Guide

- vSphere Storage Guide

- vSphere Troubleshooting Guide

- vSphere Server and Host Management Guide

- vSphere Client / vSphere Web Client