Today’s VCP6-DCV goal is to talk about VCP6-DCV Objective 3.3 – Configure vSphere Storage Multi-pathing and Failover. VMware VCP exam is a gold standard of VMware certification exams. VMware vSphere 6 brings new certification exam.

VCP exam is the most known VMware exams, even if it’s not the highest technical level. But it’s most recognized. By a future employer, by industry as a whole. We will cover VCP6-DCV exam certification based on VMware latest VMware VCP6-DCV blueprint. Check VCP6-DCV page for all objectives.

vSphere knowledge

- Configure/Manage Storage Load Balancing

- Identify available Storage Load Balancing options

- Identify available Storage Multi-pathing Policies

- Identify features of Pluggable Storage Architecture (PSA)

- Configure Storage Policies

- Enable/Disable Virtual SAN Fault Domains

—————————————————————————————————–

Configure/Manage Storage Load Balancing

The goal of load balancing policy is to give equal “chance” to each storage processors and the host server paths by distributing the IO requests equally. Using the load balancing methods allows to optimize Response time, IOPs or MBPs for VMs performance.

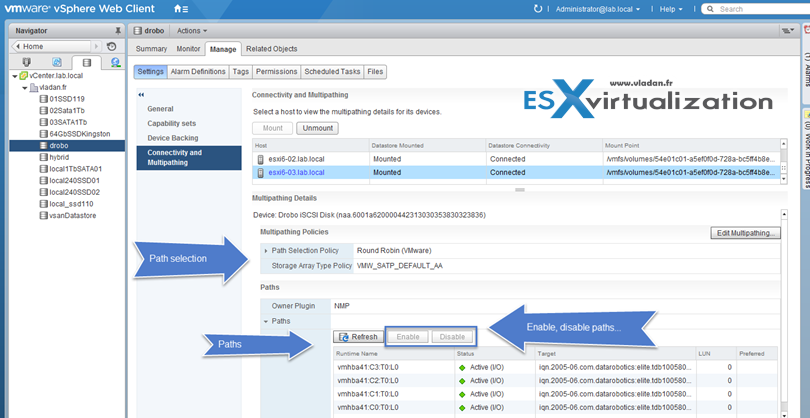

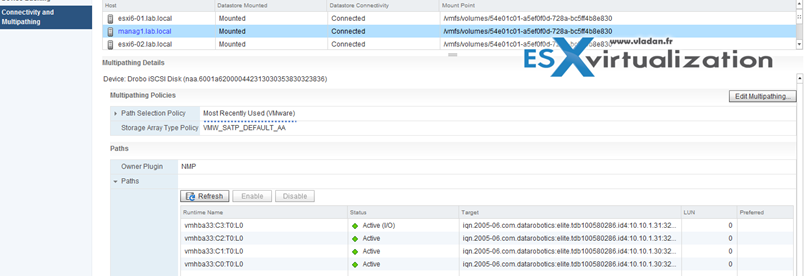

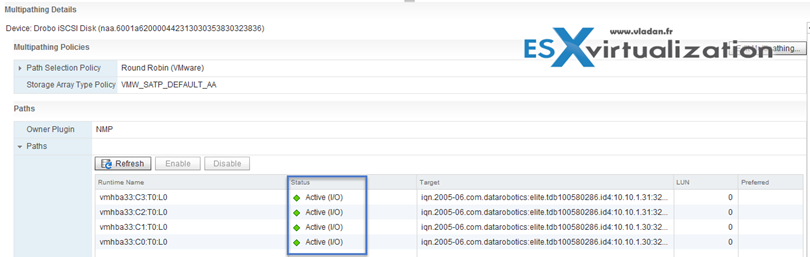

To get started, if you're using block storage – check the Storage > Datastore > Manage > Settings > Connectivity and Multipathing

Identify available Storage Load Balancing options

You can manage multipathing using the vSphere Client, the esxcli command, or using the following commands. Use the HostStorageSystem.multipathStateInfo property to access the HostMultipathStateInfo.

SAN storage systems require continual redesign and tuning to ensure that I/O is load balanced across all storage system paths. To meet this requirement, distribute the paths to the LUNs among all the SPs toprovide optimal load balancing.

Multipathing allows you to have more than one physical path from the ESXi host to a LUN on a storage system. Generally, a single path from a host to a LUN consists of an iSCSI adapter or NIC, switch ports, connecting cables, and the storage controller port. If any component of the path fails, the host selects another available path for I/O. The process of detecting a failed path and switching to another is called path failover.

Path information:

- Active – Paths available for issuing I/O to a LUN. A single or multiple working paths currently used for transferring data are marked as Active (I/O).

- Standby – If active paths fail, the path can quickly become operational and can be used for I/O

- Disabled – path disabled, no transfer possible.

- Dead – impossible to connect to the disk via this path.

Identify available Storage Multi-pathing Policies

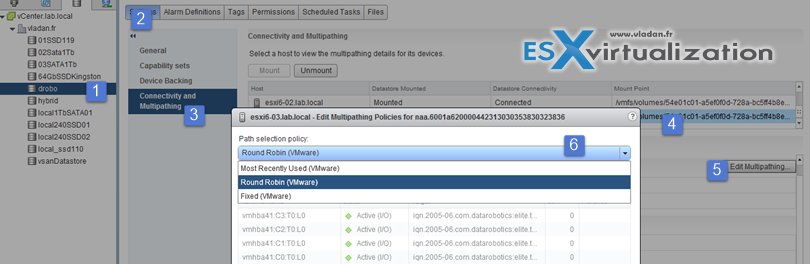

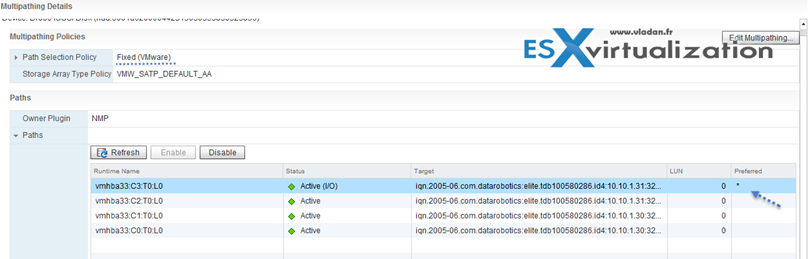

You can select different path selection policy from the default ones, or if you have installed a third party product which has added its own PSP:

- Fixed – (VMW_PSP_FIXED) the host uses designated preferred path if configured. If not it uses first working path discovered. Prefered path needs to be configured manually.

- Most Recently Used – (VMW_PSP_MRU) The host selects the path that it used most recently. When the path becomes unavailable, the host selects an alternative path. The host does not revert back to the original path when that path becomes available again. There is no preferred path setting with the MRU policy. MRU is the default policy for most active-passive arrays.

- Round Robin (RR) – VMW_PSP_RR – The host uses an automatic path selection algorithm rotating through all active paths when connecting to active-passive arrays, or through all

available paths when connecting to active-active arrays. RR is the default for a number of arrays and can be used with both active-active and active-passive arrays to implement load balancing across paths for different LUNs.

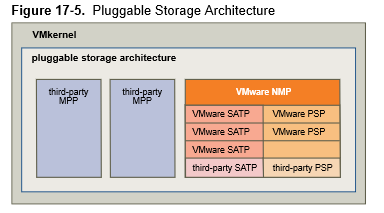

Identify features of Pluggable Storage Architecture (PSA)

- VMware NMP – default multipathing module (Native Multipathing Plugin). Nmp plays a role when associating the set of physical paths with particular storage device or LUN, but delegates the details to SATP plugin. On the other hand the choice of path used when IO comes is is handled by PSP (Path Selection Plugin)

- VMware SATP – Storage Array Type Plugins runs hand in hand with NMP and are responsible for array based operations. ESXi has SATP for every supported SAN, It also provides default SATPs that support non-specific active-active and ALUA storage arrays, and the local SATP for direct-attached devices.

- VMware PSPs – Path Selection Plugins are sub plugins of VMware NMP and they choose a physical path for IO requests.

The multipathing modules perform the following operations:

- Manage physical path claiming and unclaiming.

- Manage creation, registration, and deregistration of logical devices.

- Associate physical paths with logical devices.

- Support path failure detection and remediation.

- Process I/O requests to logical devices:

- Select an optimal physical path for the request.

- Depending on a storage device, perform specific actions necessary to handle path failures and I/O

command retries.

- Support management tasks, such as reset of logical devices.

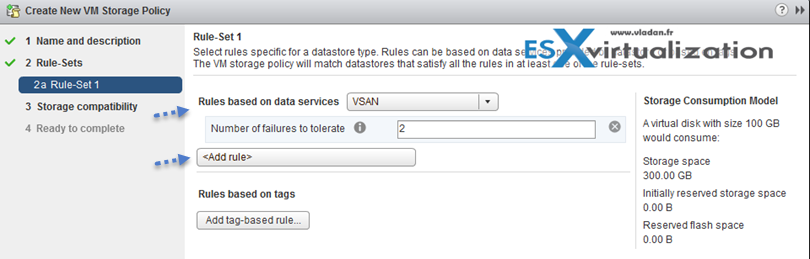

Configure Storage Policies

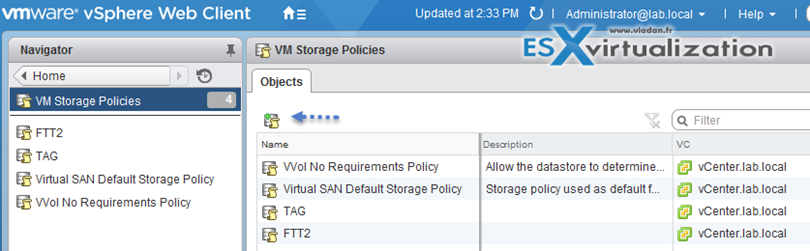

A storage policy can include multiple rule sets. Storage-Specific Data Service rules and Tag based rules can be combined in the same storage policy. VM Storage Policies, where?

Home > VM Storage Policies

Guide: vSphere Storage Guide on p. 225

Storage rules based on:

- Rules based on storage-specific data service – VSAN and VVOLs uses VASA to surface the storage capability to VMstorage policies’s interface. To supply information about underlying storage to vCenter Server, Virtual SAN and Virtual Volumes use storage providers, also called VASA providers. Storage information and datastore characteristics appear in the VM Storage Policies interface of the vSphere Web Client as data services offered by the specific datastore type.

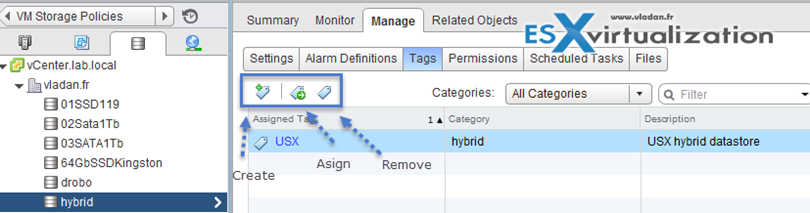

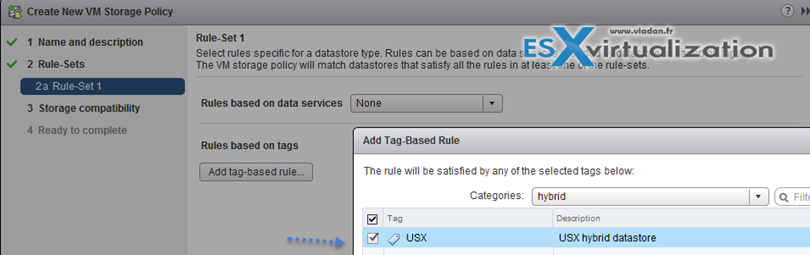

- Rules based on TAGs – by tagging a specific datastore. More than One tag can be applied per datastore.

First you must tag a datastore

Then you go back to a VM storage policy > Add new policy icon > put some meaningful name > click Add tag-based rule > choose your rule from the category drop down menu > click Next > choose a compatible datastore

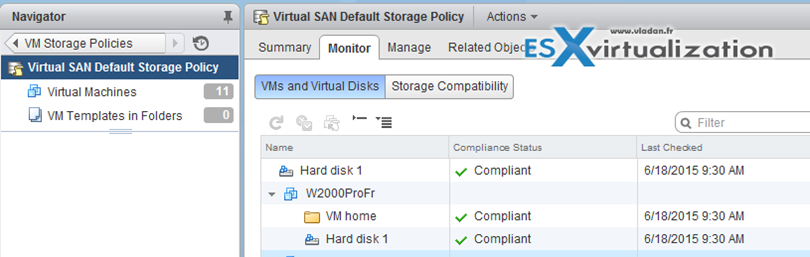

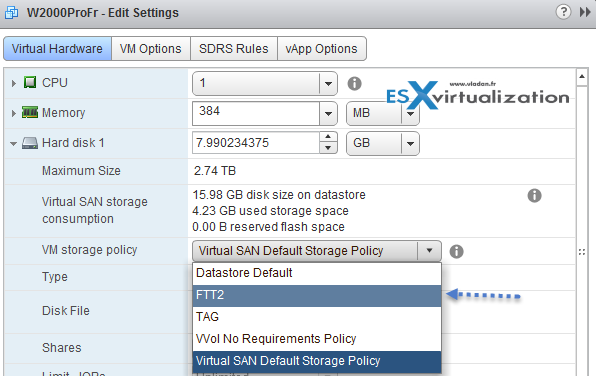

Check compliance via VM storage Policies > Storage policy > monitor

If you want to change from default storage policy to newly created one, you must first change it at the VM level and then check back at VM storage Policies > Storage policy > monitor

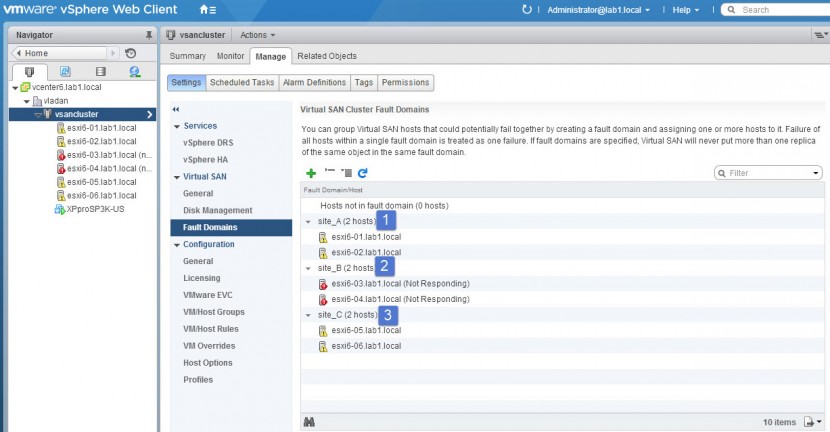

Enable/Disable Virtual SAN Fault Domains

VMware fault domains in VSAN environment allows to spread the replicas over different locations (different racks) in order to “not to put all eggs in the same basket” – literarly. Let's say you have 4 hosts per rack and you want to achieve a redundancy in case of failure multiple components within single rack. VSAN considers each fault domain as single host.

Virtual SAN Fault Domains ensures replicas of VM data is spread across the defined failure domains. Fault domains provide the ability to tolerate:

- Rack failures

- Storage controller

- Network failures

- Power failure

Image courtesy of VMware

Where to manage VSAN fault domains?

Hosts and Clusters > Cluster > Manage > Settings > Virtual SAN > Fault Domains

If a host is not a member of a fault domain, Virtual SAN interprets it as a separate domain.

VMware recommends to configure minimum 3 or more fault domains in the VSAN cluster, and also you should assing the same number of hosts per fault domain. It's not necessary however assign all hosts to fault domains.

Note: If a host is moved to another cluster, VSAN hosts retain their fault domain assignements.

Tools

- vSphere Installation and Setup Guide

- vSphere Storage Guide

- Multipathing Configuration for Software iSCSI Using Port Binding

- vSphere Client / vSphere Web Client

I’m really appreciating these topics on vSphere 6. Easy chunks to information to deal with, and preparing me for my deployment.