VMware VCP certification exam for vSphere 6 is now available and you can register for the exam. We'll start to cover VCP6-DCV sections to help out folks learning towards VCP6-DCV VMware certification exam. Today’s topic is VCP6-DCV Objective 3.1 – Manage vSphere Storage Virtualization. It's quite large chapter but it' broken into several sections, always with screenshots. We will use vSphere Web Client only (I know not everyone's favorite, but new features aren't exposed to the old C# client anymore…).

Due to VMware re-certification policy the VCP exam has now an expiration date. You can renew by passing delta exam while still holding current VCP or pass VCAP. For whole exam coverage I created a dedicated VCP6-DCV page. Or if you’re not preparing to pass a VCP6-DCV, you might just want to look on some how-to, news, videos about vSphere 6 – check out my vSphere 6 page.

vSphere Knowledge

- Identify storage adapters and devices

- Identify storage naming conventions

- Identify hardware/dependent hardware/software iSCSI initiator requirements

- Compare and contrast array thin provisioning and virtual disk thin provisioning

- Describe zoning and LUN masking practices

- Scan/Rescan storage

- Configure FC/iSCSI LUNs as ESXi boot devices

- Create an NFS share for use with vSphere

- Enable/Configure/Disable vCenter Server storage filters

- Configure/Edit hardware/dependent hardware initiators

- Enable/Disable software iSCSI initiator

- Configure/Edit software iSCSI initiator settings

- Configure iSCSI port binding

- Enable/Configure/Disable iSCSI CHAP

- Determine use case for hardware/dependent hardware/software iSCSI initiator

- Determine use case for and configure array thin provisioning

—————————————————————————————————–

NEW:

Download FREE Study VCP7-DCV Guide at Nakivo.

- The exam duration is 130 minutes

- The number of questions is 70

- The passing Score is 300

- Price = $250.00

Identify storage adapters and devices

We will be heavily using one document – vSphere 6 Storage Guide PDF.

VMware vSphere 6 supports different classes of adapters: SCSI, iSCSI, RAID, Fibre Channel, Fibre Channel over Ethernet (FCoE), and Ethernet. ESXi accesses adapters directly through device drivers in the VMkernel.

Note that you must enable certain adapters (like the software iSCSI), but this isn't new as it's been the case already in previous release.

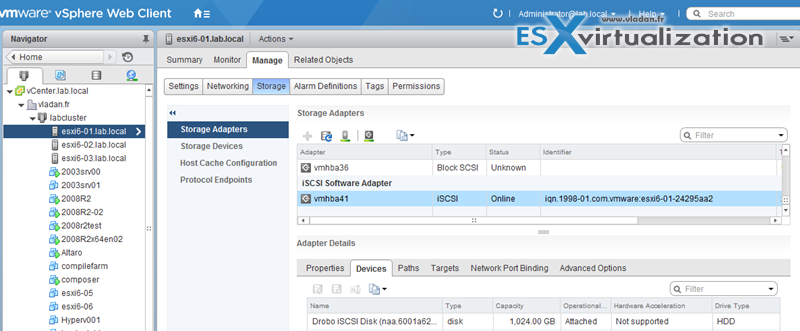

Where to check storage adapters?

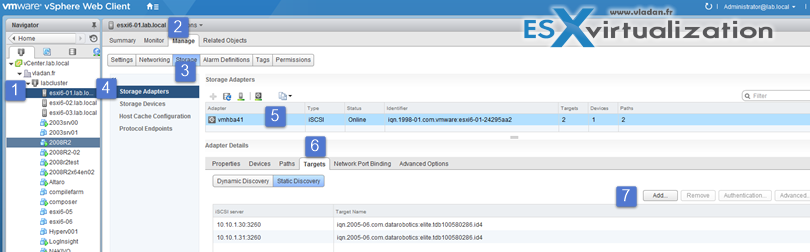

Web Client > Hosts and clusters > host > manage > storage > storage adapters

You can also check storage devices there which shows basically all storage attached to the host…

Identify storage naming conventions

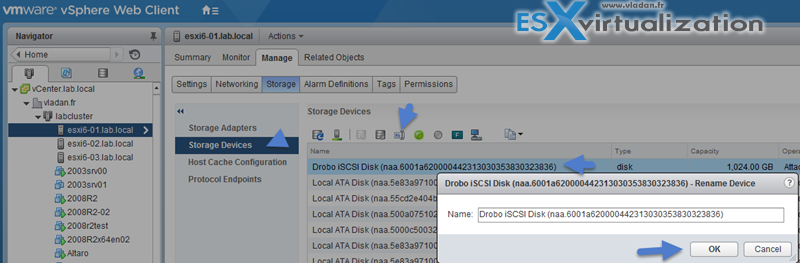

When you select the device tab (as on the image above), you'll see that there is a storage device(s) that are accessible to the host. Depending of the type of storage, ESXi host uses different algorithms and conventions to generate an identifier for each storage device. There are 3 types of identifiers:

- SCSI Inquire identifiers – the host query via SCSI INSUIRY command a storage device. The resulting data are being used to generate a unique identifier in different formats (naa.number or t10.number OR eui.number). This is because of the T10 standards.

- Path-based identifiers – ex. mpx.vmhba1:C0:T1:L3 means in details – vmhbaAdapter is the name of the storage adapter. Channel – Target – LUN. MPX path is generated in case the device does not provide a device identifier itself. Note that the generated identifiers are not persistent across reboots and can change.

- Legacy identifiers – In addition to the SCSI INQUIRY or mpx. identifiers, for each device, ESXi generates an alternative legacy name. The identifier has the following format:

vml.number

The legacy identifier includes a series of digits that are unique to the device.

Check via CLI to see all the details:

esxcli storage core device list

Note that the display name can be changed – web client Select host > Manage > Storage > Storage Devices > select > click rename icon.

There are also:

Fibre Channel targets which uses World Wide Names (WWN)

- World Wide Port Names (WWPN)

- World Wide Node Names (WWNN)

Check vSphere Storage Guide p.64 for iSCSI naming conventions

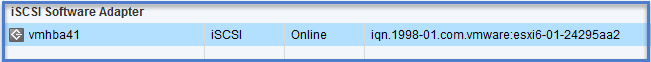

Basically similar to the WorldWide Name (WWN) for FC devices. iSCSI names are formatted in two different ways. The most common is the IQN format.

iSCSI Qualified Name (IQN) Format

iqn.yyyy-mm.naming-authority:unique name,

where:

- yyyy-mm is the year and month when the naming authority was established.

- naming-authority is usually reverse syntax of the Internet domain name of the naming authority. For

example, the iscsi.vmware.com naming authority could have the iSCSI qualified name form of iqn.

1998-01.com.vmware.iscsi. The name indicates that the vmware.com domain name was registered in

January of 1998, and iscsi is a subdomain, maintained by vmware.com. - unique name is any name you want to use, for example, the name of your host. The naming authority

must make sure that any names assigned following the colon are unique, such as:- iqn.1998-01.com.vmware.iscsi:name1

- iqn.1998-01.com.vmware.iscsi:name2

- iqn.1998-01.com.vmware.iscsi:name999

OR

Enterprise Unique Identifier (EUI) naming format

eui.16 hex digits.

Example: eui.16hexdigits ie eui.0123456789ABCDEF

Identify hardware/dependent hardware/software iSCSI initiator requirements

Two types of iSCSI adapters.

- Hardware based – add-On iSCSI cards (can do boot-on-lan). Those types of adapters are also capable of offloading the iSCSI and network processing so the CPU activity is lower. Hardware adapters can be dependent or independent. Compared to Dependent, the Indpendent adapters do not use VMkernel adapters for connections to the storage.

- Software based – activated after installation (cannot do boot-on-lan). Brings a very light overhead. Software based iSCSI uses VMkernel adapter to connect to iSCSI storage over a storage network.

Dependent adapters can use CHAP, which is not the case of Independent adapters.

Compare and contrast array thin provisioning and virtual disk thin provisioning

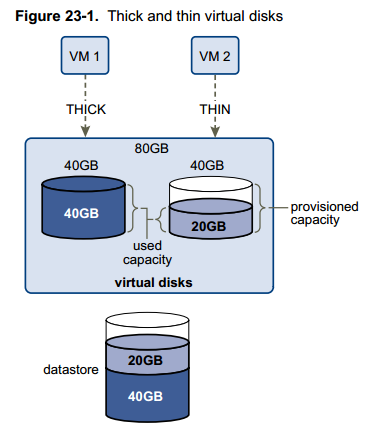

Virtual disk thin provisioning allows to allocate only small amount of disk space at the storage level, but the guest OS sees as it had the whole space. The thin disk grows in size when adding more data, installing applications at the VM level. So it's possible to over-allocate the datastore space, but it brings a risks so it's important to monitor actual storage usage to avoid conditions when you run out of physical storage space.

Image says thousands words… p.254 of vSphere Storage Guide

- Thick Lazy Zeroed – default thick format. Space is allocated at creation, but the physical device is not erased during the creation proces, but zeroed-on-demand instead.

- Thick Eager Zeroed – Used for FT protected VMs. Space is allocated at creation and zeroed immediately. The Data remaining on the physical device is zeroed out when the virtual disk is created. Takes longer to create Eager Zeroed Thick disks.

- Thin provission – as on the image above. Starts small and at first, uses only as much datastore space as the disk needs for its initial operations. If the thin disk needs more space later, it can grow to its maximum capacity and occupy the entire datastore space provisioned to it. Thin disk can be inflated (thin > thick) via datastore browser (right click vmdk > inflate).

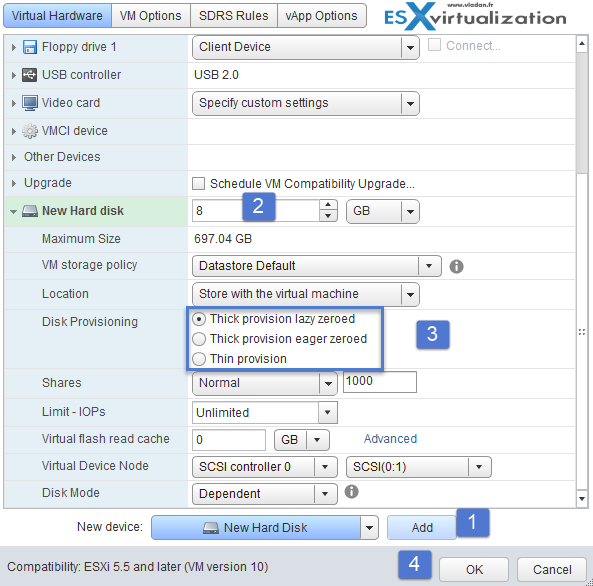

Check the different VMDK disk provisioning options when creating new VM or adding an additional disk to existing VM

Thin-provissioned LUN

Array Thin Provisioning and VMFS Datastores on p. 257.

ESXi also supports thin-provisioned LUNs. When a LUN is thin-provisioned, the storage array reports the LUN's logical size, which might be larger than the real physical capacity backing that LUN. A VMFS datastore that you deploy on the thin-provisioned LUN can detect only the logical size of the LUN.

For example, if the array reports 2TB of storage while in reality the array provides only 1TB, the datastore considers 2TB to be the LUN's size. As the datastore grows, it cannot determine whether the actual amount of physical space is still sufficient for its needs.

Via Storage API -Array integration (VAAI) you CAN be aware of underlying thing-provisioned LUNs. VAAI let the array know about datastore space which has been freed when files are deleted or removed to allow the array to reclaim the freed blocks.

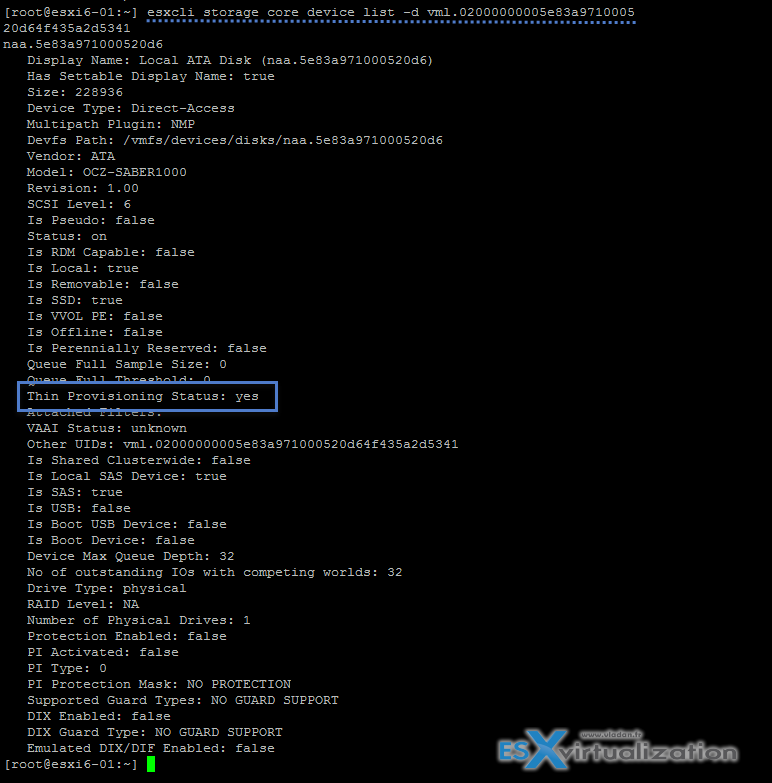

Check thin provissioned devices via CLI:

esxcli storage core device list -d vml.xxxxxxxxxxxxxxxx

Describe zoning and LUN masking practices

Zoning is used with FC SAN devices. Allow controlling the SAN topology by defining which HBAs can connect to which targets. We say that we zone a LUN. Allows:

- Protecting from access non desired devices the LUN and possibly corrupt data

- Can be used for separation different environments (clusters)

- Reduces number of targets and LUN presented to host

- Controls and isolates paths in a fabric.

Best practice? Single-initiator-single target

LUN masking

esxcfg-scsidevs -m — the -m

esxcfg-mpath -L | grep naa.5000144fd4b74168

esxcli storage core claimrule add -r 500 -t location -A vmhba35 -C 0 -T 1 -L 0 -P MASK_PATH

esxcli storage core claimrule load

esxcli storage core claiming reclaim -d naa.5000144fd4b74168

Unmask a LUN

esxcli storage core claimrule remove -r 500

esxcli storage core claimrule load

esxcli storage core claiming unclaim -t location -A vmhba35 -C 0 -T 1 -L 0

esxcli storage core adapter rescan -A vmhba35

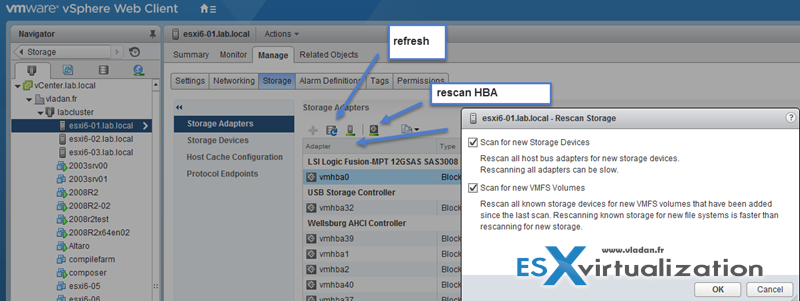

Scan/Rescan storage

Perform the manual rescan each time you make one of the following changes.

- Zone a new disk array on a SAN.

- Create new LUNs on a SAN.

- Change the path masking on a host.

- Reconnect a cable.

- Change CHAP settings (iSCSI only).

- Add or remove discovery or static addresses (iSCSI only).

- Add a single host to the vCenter Server after you have edited or removed from the vCenter Server a datastore shared by the vCenter Server hosts and the single host.

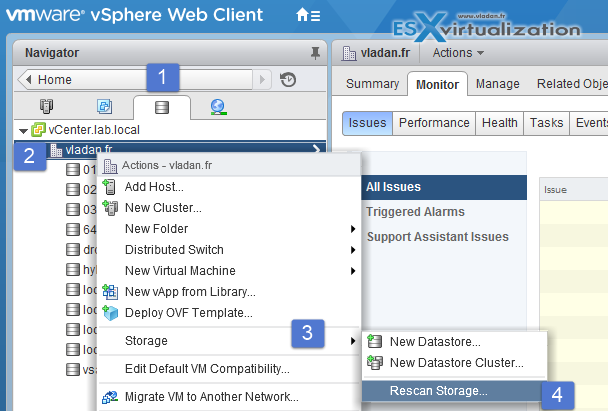

You can scan at the Host level or at the datacenter level (storage > select datacenter > right click > Storage > Rescan storage.

Click host > manage > storage > storage adapters

- Scan for New Storage Device – Rescans HBAs for new storage devices

- Scan for New VMFS Volumes – Rescans known storage devices for VMFS volumes

Configure FC/iSCSI LUNs as ESXi boot devices

Few requirements. As being said, only the hardware iSCSI can boot from LUN.

Boot from SAN is supported on FC, iSCSI, and FCoE.

- 1:1 ratio – Each host must have access to its own boot LUN only, not the boot LUNs of other hosts.

- Bios Support – Enable the boot adapter in the host BIOS

- HBA config – Enable and correctly configure the HBA, so it can access the boot LUN.

Docs:

- Boot from FC SAN – vSphere Storage Guide on p. 49

- Boot from iSCSI SAN – p.107.

- Boot from Software FCoE – P.55

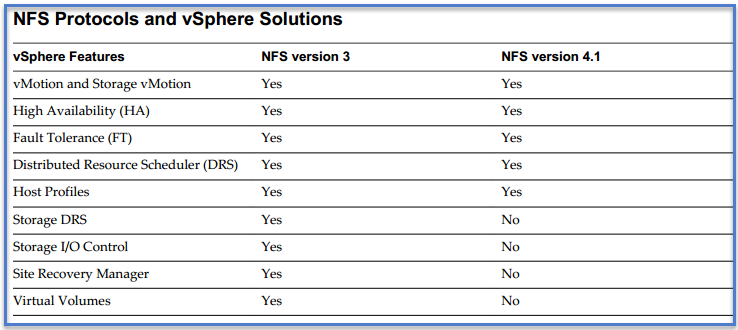

Create an NFS share for use with vSphere

An NFS client built into ESXi uses the Network File System (NFS) protocol over TCP/IP to access a designated NFS volume that is located on a NAS server. The ESXi host can mount the volume and use it for its storage needs. vSphere supports versions 3 and 4.1 of the NFS protocol.

How? By exporting NFS volume as NFS v3 or v4.1 (latest release). Different storage vendors have different methods of enabling this functionality, but typically this is done on the NAS servers by using the no_root_squash option. If the NAS server does not grant root access, you might still be able to mount the NFS datastore – but read only.

NFS uses VMkernel port so you need to configure one.

v3 and v4.1 compare:

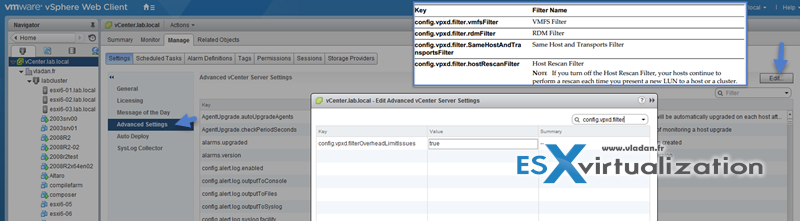

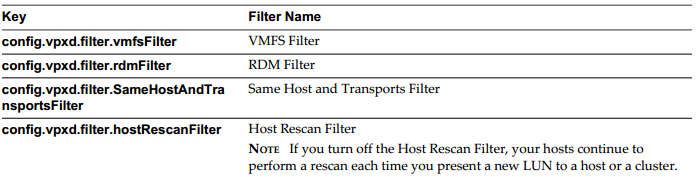

Enable/Configure/Disable vCenter Server storage filters

When you perform VMFS datastore management operations, vCenter Server uses default storage protection filters. The filters help you to avoid storage corruption by retrieving only the storage devices that can be used for a particular operation. Unsuitable devices are not displayed for selection. p. 167 of vSphere 6 storage guide.

Where? Hosts and clusters > vCenter server > manage > settings > advanced settings

In the value box type False for apropriate key.

From the vSphere Storage Guide:

Configure/Edit hardware/dependent hardware initiators

Where?

Host and Clusters > Host > Manage > Storage > Storage Adapters.

It's possible to rename the adapters from the default given name. It's possible to configure the dynamic and static discovery for the initiators.

It's not so easy to find through Web client, as before we use to do it eyes closed through a vSphere client…

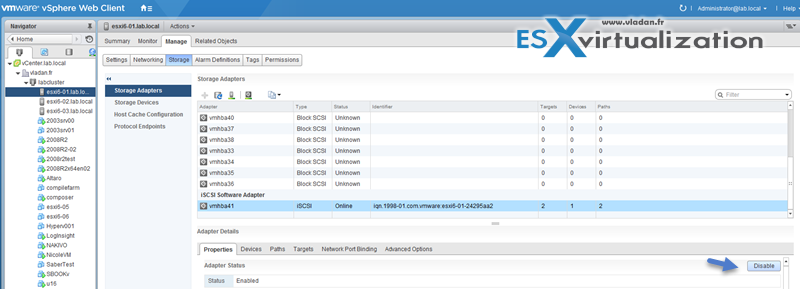

Enable/Disable software iSCSI initiator

Configure/Edit software iSCSI initiator settings

As being said above, to configure and Edit Software iSCSI initiator settings, you can use Web client or C# client. Web Client > Host and Clusters > Host > Manage > Storage > Storage Adapters

And there you can:

- View/Attach/Detach Devices from the Host

- Enable/Disable Paths

- Enable/Disable the Adapter

- Change iSCSI Name and Alias

- Configure CHAP

- Configure Dynamic Discovery and (or) Static Discovery

- Add Network Port Bindings to the adapter

- Configure iSCSI advanced options

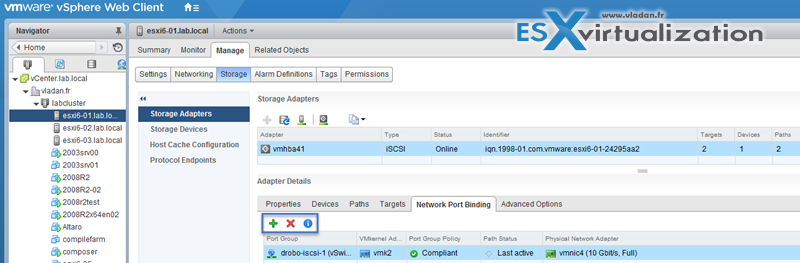

Configure iSCSI port binding

Port binding allows to configure multipathing when :

- iSCSI ports of the array target must reside in the same broadcast domain and IP subnet as the VMkernel

adapters. - All VMkernel adapters used for iSCSI port binding must reside in the same broadcast domain and IP

subnet. - All VMkernel adapters used for iSCSI connectivity must reside in the same virtual switch.

- Port binding does not support network routing.

Do not use port binding when any of the following conditions exist:

- Array target iSCSI ports are in a different broadcast domain and IP subnet.

- VMkernel adapters used for iSCSI connectivity exist in different broadcast domains, IP subnets, or use

different virtual switches. - Routing is required to reach the iSCSI array.

Note: The VMkernel adapters must be configured with single Active uplink. All the others as unused only (not Active/standby). If not they are not listed…

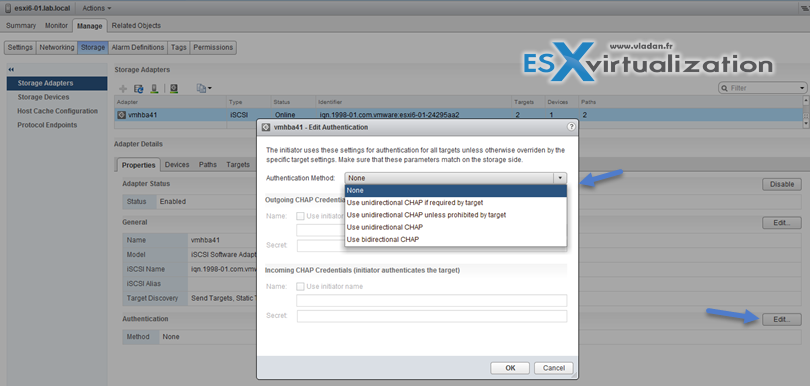

Enable/Configure/Disable iSCSI CHAP

Where?

Web Client > Host and Clusters > Host > Manage > Storage > Storage Adapters > Properties > Authentication (Edit button).

p. 98 of vSphere 6 Storage Guide.

Challenge Handshake Authentication Protocol (CHAP), which verifies the legitimacy of initiators that access targets on the network.

Unidirectional CHAP – target authenticates the initiator, but the initiator does not authenticate the target.

Bidirectional CHAP – an additional level of security enables the initiator to authenticate the target. VMware supports this method for software and dependent hardware iSCSI adapters only.

Chap methods:

- None – CHAP authentication is not used.

- Use unidirectional CHAP if required by target – Host prefers non-CHAP connection but can use CHAP if required by target.

- Use unidirectional CHAP unless prohibited by target – Host prefers CHAP, but can use non-CHAP if target does not support CHAP.

- Use unidirectional CHAP – Requires CHAP authentication.

- Use bidirectional CHAP – Host and target support bidirectional CHAP.

CHAP does not encrypt, only authenticates the initiator and target.

Determine use case for hardware/dependent hardware/software iSCSI initiator

It's fairly simple, as we know that if we use the software iSCSI adapter we do not have to buy additional hardware and we're still able to “hook” into iSCSI SAN.

The case for Dependent Hardware iSCSI Adapter which is dependant on the VMKernel adapter but offloads iSCSI processing to the adapter, which accelerates the treatment and reduces CPU overhead.

On the other hand, the Independent Hardware iSCSI Adapter has its own networking, iSCSI configuration, and management interfaces. So you must go through the BIOS and the device configuration in order to use it.

Determine use case for and configure array thin provisioning

Some arrays do support thin provissioned LUNs while others do not. The benefit is to offer more capacity (visible) to the ESXi host while consuming only what's needed at the datastore level. (attention however for over-subscribing, so proper monitoring is needed). So at the datastore level it's possible to use thin provisioned virtual disk or on the array using thin provisioned LUNs.

Tools

- vSphere Installation and Setup Guide

- vSphere Storage Guide

- Best Practices for Running VMware vSphere® on iSCSI

- vSphere Client / vSphere Web Client

Thanks for all the effort and time to help us understand VMware 🙂

Only small remark, it seems that the pictures are not displaying 🙁

All seems ok after I posted the message, probably my super fast internet connection 😐 🙂

Hi All

When I try to reclaim the LUN using the command esxcli storage core claiming unclaim, I receive a message error “Unable to unclaim path. Some paths may be left in a unclaimeb state. You will need to claim them manually using the appropriate commands or wait a periodic path claiming to reclaim them automatically”. All previous commands are made successfully. Could you help me?

Best Regards.

Vladan :

I too am getting the same error (Unable to unclaim path …). The only workaround I have found so far is to unmount the datastore from the host using vCenter before starting the masking process. Otherwise there are lots of processes attached to the device (https://communities.vmware.com/message/2426294#2426294).

The video here (https://www.youtube.com/watch?v=pyNZkZmTKQQ) makes masking and unmasking look easy, but this is for ESXi 5.0. This other video (https://youtu.be/j4_Gt1lf5HI?t=1170) claims that ESXi (version 6.5) will not allow the last path to be masked.

Is there something missing from the example?

Thanks.