Another vendor who will jump into the hyper-converged wagon – Microsoft. Storage Spaces Direct is in preview in Windows server 2016 which is upcoming server software from Microsoft. Storage spaces direct has the same principle as VMware VSAN – leverages local disks of each host (DAS storage) in order to create a shared pool of clustered storage visible by all the hosts in the cluster. And this shared storage can be presented as a VM's datastore.

Storage spaces direct is an evolution of Storage spaces based on ReFS. We have looked at ReFS in our article here – The resilient file system (ReFS) and those ReFS formatted volumes used in conjunction with Storage spaces uses striping and data mirroring. ReFS has built in scanner which is used for auto-healing. This proactively running scanner called scrubber which scans the volume for errors periodically in a background and allows proactively monitor the volume for errors and correct them.

With Windows Server Technical Preview there is newly introduced Storage Spaces Direct, where you can now build HA Storage Systems using storage nodes with only local storage. Disks devices that are internal to each storage node only (no shared enclosure).

The current Windows server 2012 R2 storage spaces in order to provide this function needs shared JBOD which is basically an external enclosure. This will not be needed in Server 2016 and Storages Spaces direct. Storage Spaces Direct uses SMB3 for internal communication. SMB Direct and SMB Multichannel (low latency and high throughput storage).

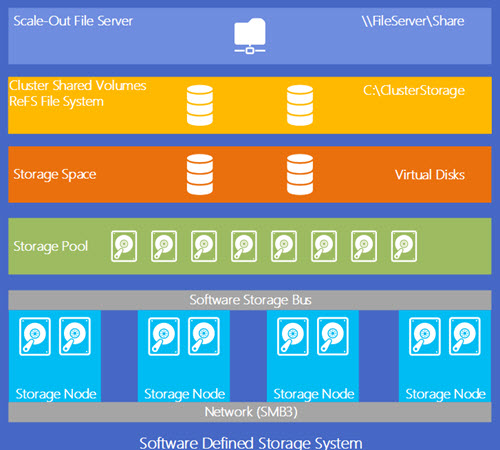

Let's look at the whole picture (courtesy of MSDN):

Quote from MSDN:

The Storage Spaces Direct stack includes the following, starting from the bottom:

Hardware: The storage system consisting of a minimum of four storage nodes with local storage. Each storage node can have internal disks, or disks in an external SAS connected JBOD enclosure. The disk devices can be SATA disks, NVMe disks or SAS disks.

Software Storage Bus: The Software Storage Bus spans all the storage nodes and brings together the local storage in each node, so all disks are visible to the Storage Spaces layer above.

Storage Pool: The storage pool spans all local storage across all the nodes.

Storage Spaces: Storage Spaces (aka virtual disks) provide resiliency to disk or node failures as data copies are stored on different storage nodes.

Resilient File System (ReFS) ReFS provides the file system in which the Hyper-V VM files are stored. ReFS is a premier file system for virtualized deployments and includes optimizations for Storage Spaces such as error detection and automatic correction. In addition, ReFS provides accelerations for VHD(X) operations such as fixed VHD(X) creation, dynamic VHD(X) growth, and VHD(X) merge.

Clustered Shared Volumes: CSVFS layers above ReFS to bring all the mounted volumes into a single namespace.

Scale-Out File Server This is the top layer of the storage stack that provides remote access to the storage system using the SMB3 access protocol.

The new storage system will be focused towards Hyper-V.

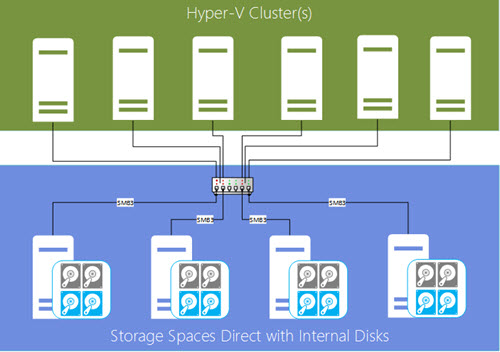

A full architectural overview?

From the storage perspective, you'd have Windows Server 2016 providing the Storage spaces direct function AND on the other hand you'd have Hyper-v cluster.

The configuration in the preview shall be done through PowerShell, not GUI of the server manager (who knows why?). Wait and see…

You can test the setup by following the guide on MSDN.

If I'm getting it right, Windows Server 2016 (with full GUI), if plays the role of hypervisor at the same time as it provides the storage services, it will obviously not be as “slim” as it should. That's why the architecture picture above shows the separation between the storage spaces direct cluster from the hyper-v cluster perhaps?

What I would imagine is that there is a bunch of Hyper-V hosts (at least 4 as I could read), and those hosts participate at the same time on the storage layer (like VMware VSAN does). You manage it via Server manager from remote server host and that's it.

If one would have to construct the Storage spaces direct cluster first and then only to “hook” the hyper-V cluster it looses a bit the beauty of a simplicity… Don't you think?

Source: Storage Spaces Direct

My understanding of reading the guide and having a certification in current (server 2012r2 + system center 2012r2) Microsoft Virtualization (70-409 exam), is that it is Microsoft best practices to keep their storage spaces clustering and hyper-v clustering separate. While there was nothing preventing you from building a scale out fileserver cluster and installing the hyper-v role and running VMs on your SMB3 shares, in all of the guides that Microsoft worked with vendors (Cisco, NetApp, and EMC) for, they broke this out.

The only place where i would theorize that it could become a problem is if you had one host of your four node cluster running as the primary coordinator node for your scale out fileserver cluster, you had your main SMB3 CSV path being served from that location, and you also had VMs running on that box, and the box died. When doing internal tests with separate clusters, we could lose storage systems with no impact, and we could lose hyper-v hosts with minimal impact (VMs would get stunned when moving to another hyper-v box in the cluster), however, it may not work the same way if services were hosted on the same system.

Even to get SMB3 multichannel and SMB3 continuous availbility working properly, you required multilple VLANs on your systems and NICs to be tagged the right way in the cluster, and i cannot see how that would be able to function if the box was local.

Admittedly, bootstrapping an ESXi cluster to run vCenter on it without having a VSAN datastore operational is not the most elegant solution either. 🙂

Windows Server 2016 does not install by default with a GUI. In fact, the different scenarios (Storage Spaces Direct cluster, Hyper-V compute cluster or both combined in single hyper-converged cluster) are available with the new and very slim Windows Server 2016 Nano Server deployment. The recommendation is to run your management tools remotely, from a separate administration computer configured.

Thanks to clear things a bit. Yes, that’s what I thought. Deploy a bunch of nano’s with hyper-V and storage spaces direct roles to create a hyper-converged system which is then managed remotely.

Ok, now that’s clear. The question now is how many consoles am I gonna to juggle with and how long the setup guide for that? Here are the next improvements to do, IMHO.

Another question would be perhaps concerning licensing? If we suppose that Hyper-V will stay free, how about storage spaces direct?

(Assuming S2D would be available on Hyper-V core/Free), will then dedup be available?