Software-Defined Storage (SDS) solutions are using cache to speed up the reads and writes operations. Usually, some of the cache (L1) is RAM and some of the caches is (L2) SSDs drives. Some users might be questioning whether to set write-through or write-back cache policy and what's the advantage of the one over the other (and vice versa). In today's article, we'll discuss Software-Defined Storage (SDS) – StarWind Write-Back or Write-Through cache.

In our case, we'll discuss StarWind VSAN options. As you know, StarWind uses RAM as a write buffer and L1 cache to absorb writes, while SSD flash memory is used as an L2 cache. StarWind has L1 and L2 caches using the same algorithms (shared library). As such, most of the notes apply to both types of caches, but there are still some differences in their work. We'll cover it separately.

When the cache is full, the algorithm must be chosen in order to discard (delete) old items in the cache in order to accommodate new ones. StarWind proceeds that via the Least Recently Used (LRU) algorithm. As such, when the new data should be written and there is no more space in the device, the oldest accessed blocks are deleted.

Three States of Cache

- Empty – all cache blocks are “empty” at the beginning – the allocated memory holds no data, and cache blocks are not associated with disk blocks. They start being loaded with data during the working process.

- Dirty – The cache block is considered “dirty” if user data was written to it, but has not been flushed to disk yet.

- Clean – data were flushed to disk or the cache block was filled up as a result of disk read operations.

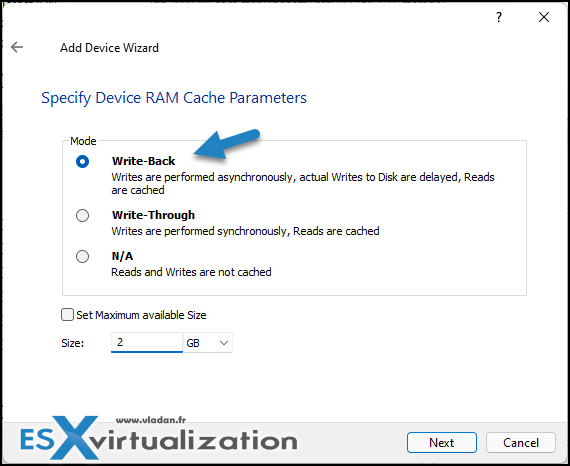

Write-back policy

When this policy is activated, data are written to the cache and confirms the I/O back to the host immediately. If the cache contains clean or empty blocks, the writing speed is similar to the RAM-drive read/write speed.

The cache is filled up with data mainly during the write operations. During the read operations, the data enters the cache only if the latter contains either empty memory blocks or the lines that were allocated for these entries earlier and have not been fully exhausted yet.

Example from StarWind:

If the writes have not been made to the “dirty” cache line for a certain period of time (now the default is 5 seconds), the data is flushed to the disk. The block becomes “clean”, but still contains the copy of the data.

If all cache blocks are “dirty” during the write operation, the data stored in the oldest blocks is forcibly copied to the disk, and the new data is written to the blocks. Therefore, if the data is continuously written to different blocks and once the WB cache memory is exhausted, the performance falls to values comparable to the speed of the uncached device: new data can be written only after the old data is copied to the disk.

What happens after a power outage?

In case you have a power outage, your small remote office wasn't protected with good UPS and the server shuts down hard, the data which was stored in the “dirty” blocks and not been yet de-staged to the backing store will be lost. For ImageFile-based HA this means the device needs full synchronization once it is turned on.

When you remove the device or turn off the service, all the dirty blocks of the WB cache must be flushed to the disk. If case the cache size is too large (gigabytes), this process may take some time.

StarWind gives an extimate:

cache flushing time = cache size / RAM write speed.

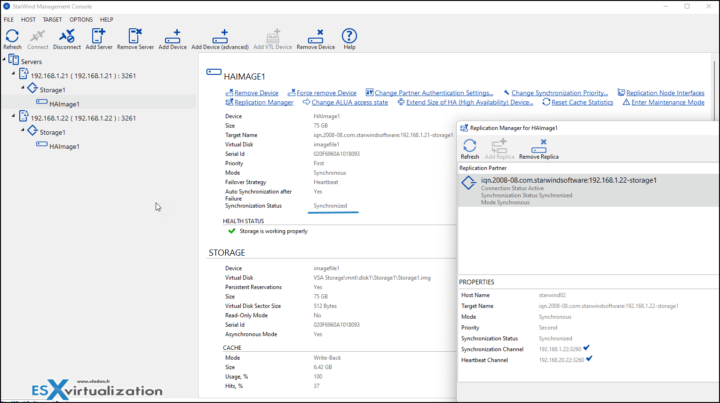

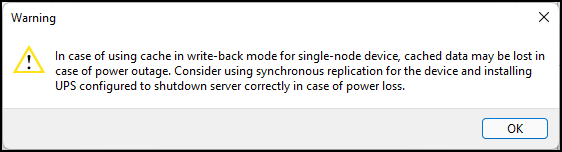

If you plan to use only a single-node device (without replica), you'll get a warning in case you chose write-back cache option…. StarWind is making sure you're aware that you might lose some data if you not planning to have Highly Available (HA) storage with synchornnous replication.

To fulfill this, you'll need 2-nodes minimum.

When it's good to use the Write-Back policy?

When you have load fluctuations. For example, you have load spikes and then normal writes. In this case, the data are gradually copied to the main storage when the workload goes down.

Another example is a continuous rewriting of the same blocks. In this case, the blocks are rewritten only in memory and several writes to the cache correspond to only one disk write.

Remember, the data is copied from the WB cache to the disk only if there are no requests and the dirty blocks have no data written to them for more than 5 seconds (this value is changeable via header file).

StarWind recommended caching policy for RAM cache

The WB cache policy is the one you should use for the L1 cache and is currently blocked for L2 cache (L2 cache is always created in WT mode).

Write-through policy

This policy accelerates only read operations. The data is cached when being read from the disk. So the next time this data is requested, it will be read from the cache memory instead of reading the disks and the underlying storage.

The new data is written synchronously both to the cache and to the underlying storage.

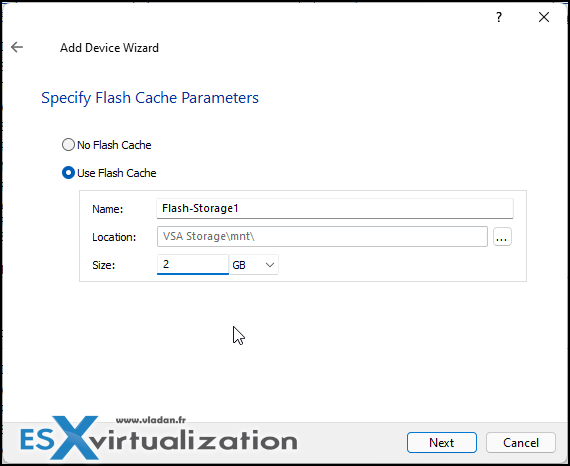

The WT mode is the default mode (and the only mode) for the L2 cache. For SSD devices. Cached data does not need to be offloaded to the backing store when the device is removed or the service is stopped. That’s why the shutdown is carried out faster than in case with the same-sized WB cache.

If you go more into details, via the WT policy, the cache blocks are always “clean” since the new data is immediately written to the disc.

As you can see, during the new device creation wizard when at Flash Cache, we have no option to choose from. The system uses the default (write-through) policy.

StarWind Recommendations

StarWind uses RAM for L1 cache and flash memory for L2 cache to speed up the processing of disk requests. StarWind recommends to use L1 RAM cache in writeback mode and L2 in the write-through mode in order to achieve high performance and additional protection.

Using L1 in WB mode is able to unify small random writes into one big sequential write, and also it is able to compensate for workload fluctuations. The in-memory caching of the working data set is much faster when used with LSFS.

For the L2 cache, it accelerates the read operations Write-Through mode and is also very effective in case the working data set fully fits the L2 cache size.

More posts about StarWind on ESX Virtualization:

- StarWind VTL allows fight ransomware by setting up Virtual Tape Library stored on Disk drive

- Fibre Channel StarWind VSAN Configuration Advantages

- How to configure automatic storage rescan in StarWind VSAN for VMware vSphere

- Free StarWind iSCSI accelerator download

- VMware vSphere and HyperConverged 2-Node Scenario from StarWind – Step By Step(Opens in a new browser tab)

- StarWind Storage Gateway for Wasabi Released

- How To Create NVMe-Of Target With StarWind VSAN

- Veeam 3-2-1 Backup Rule Now With Starwind VTL

- StarWind and Highly Available NFS

- StarWind VVOLS Support and details of integration with VMware vSphere

- StarWind VSAN on 3 ESXi Nodes detailed setup

- VMware VSAN Ready Nodes in StarWind HyperConverged Appliance

More posts from ESX Virtualization:

- VMware vCenter Converter Discontinued – what’s your options?

- How to upgrade VMware VCSA 7 Offline via patch ISO

- vSphere 7.0 U3C Released

- vSphere 7.0 Page [All details about vSphere and related products here]

- VMware vSphere 7.0 Announced – vCenter Server Details

- VMware vSphere 7.0 DRS Improvements – What's New

- How to Patch vCenter Server Appliance (VCSA) – [Guide]

- What is The Difference between VMware vSphere, ESXi and vCenter

- How to Configure VMware High Availability (HA) Cluster

Stay tuned through RSS, and social media channels (Twitter, FB, YouTube)