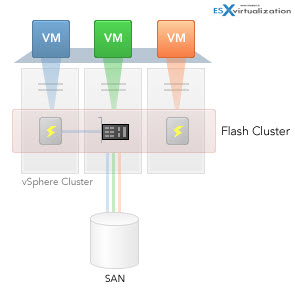

I've been testing PernixData FVP since some time now. FVP dramatically accelerates shared storage used by virtual infrastructures running VMware vSphere as it offloads the writes destined to the SAN by using local SSD storage in each host. FVP creates a pooled flash layer which allows to write to locally attached SSDs before sending those writes to the backend storage.

Each host can have one or several flash devices, which does not have to be the same capacity or have the same connection interface. You can mix SATA, SAS or PCIe devices in the cluster.

The FVP from PernixData is transparent concerning vMotion, sVMotion, DRS, HA and other VMware technologies. Nothing has to be changed to integrate PernixData FVP. The end user or the VMware admin doesn't have to reconfigure anything, not even touch the networking configuration as the FVP uses existing vmkernel interface configured for vMotion traffic for for replication purposes.

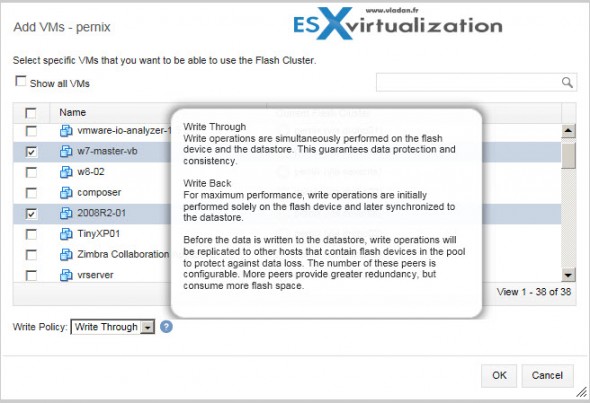

There are basically two working modes, where you can choose to accelerate individual VMs or the whole datastore(s). The two possible working modes are:

Write through – the writes operations are done at the same time to the SSD and to the back end SAN device to assure redundancy.

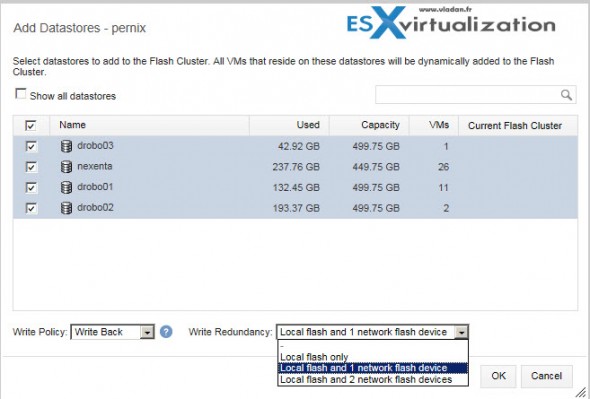

Write back – offers maximum performance, with writes executed directly to the SSD, and then later synchronized to the datastore. The replication to other peers (hosts) in the cluster assures the redundancy in case of failure of an SSD.

The Hardware architecture.

So specially for this article I was able to setup FVP running on 3 physical hosts, where each of those physical hosts has one SSD flash drive each, attached locally via SATA II and SATAIII.

The flash disks are not the same capacity (2x 128Gb and 1x 256Gb) as also all my hosts are built from consumer parts, with consumer based motherboards. See more on my lab. I also recently upgraded my lab with Haswell based whitebox, to have 3 physical hosts. Concerning shared storage I have two iSCSI devices. Drobo Elite and Home Brewed Whitebox with Nexenta, where this box was previously running hybrid ESXi/Nexenta – details here, but now is a dedicated storage box with SSD drives only. So here we'll be accelerating Flash on Flash… -:).

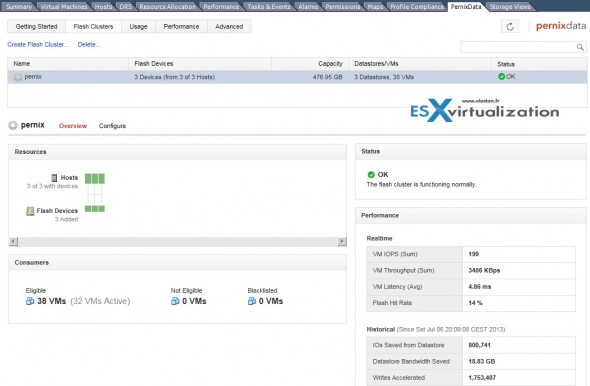

As you can see on this overview snapshot, there is a real time section and historical section, each one allowing to see detailed informations about how many IOPs has been saved locally and how many datastore bandwidth has been saved, together with how many writes has been totally accelerated… You can click to enlarge.

The FVP Setup

I won't go much into a details, but I'll rather talk about the individual components which are used to form the whole FVP, as there is several ways to do the setup. The setup has no disruption on existing services, like HA, DRS, vMotion …. and it's not difficult. Once you know that the configuration of the FVP must be done at the VMware cluster level, not by selecting the individual hosts, you're good to go…

The kernel module – it's the core module of FVP, which is installed on each ESXi host. I've used SSh, but it's possible to use VUM for large scales deployments.

The FVP Management Server module – this module needs Windows Server. In my lab I'm running All-in-one W2008R2 server which runs all vCenter server components on single VM. I've installed FVP management server there, where also the SQL Express sits there and is used by FVP.

FVP Plugin for vSphere Windows client – it's plugin for vSphere Windows client. FVP is added as a

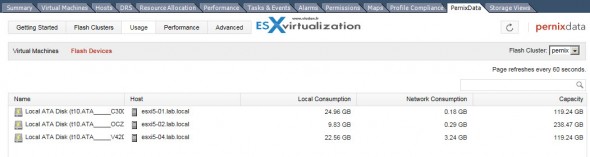

Now back to FVP. As you'll find out later in this article, there is no filesystem on those SSD drives used by FVP, but FVP uses a proprietary technology which is optimized for flash. While the cluster is running you can see the capacity used by each of those flash devices. I had the feeling that in the beginning the usage of those SSDs were quite low, but with the time the consumption of the flash storage grows, and more and more flash capacity is used.

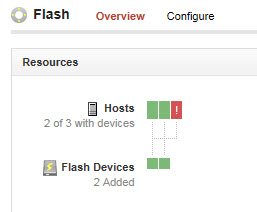

The Overview interface shows informations about how many hosts participates in the pernix cluster, with how many SSD flash devices each. It's graphically represented with green color. In case there is a host without local SSD storage within the cluster, the red color shows it off. I took a screenshot during my setup, to show you that. It's just to show little bit of the technology used behind this nice and clean graphical user interface. Now I'm not using the final product, as the build which is currently in public beta might differ slightly on what you will find in the final release of FVP.

The Overview interface shows informations about how many hosts participates in the pernix cluster, with how many SSD flash devices each. It's graphically represented with green color. In case there is a host without local SSD storage within the cluster, the red color shows it off. I took a screenshot during my setup, to show you that. It's just to show little bit of the technology used behind this nice and clean graphical user interface. Now I'm not using the final product, as the build which is currently in public beta might differ slightly on what you will find in the final release of FVP.

On the right hand side you can see Real time performance numbers, with below historical data showing IOs saved from datastore, how many bandwidth has been saved or how many writes has been accelerated. I'm running the cluster only about a day or so and you can see the saves already.

Next, when clicking the Performance TAB, you have the possibility to choose one of the four different sections, each one of those showing different informations:

- VM IOPS (Sum)

- VM Latency (Avg)

- VM Throughput (Sum)

- Hit Rate & Eviction Rate (%)

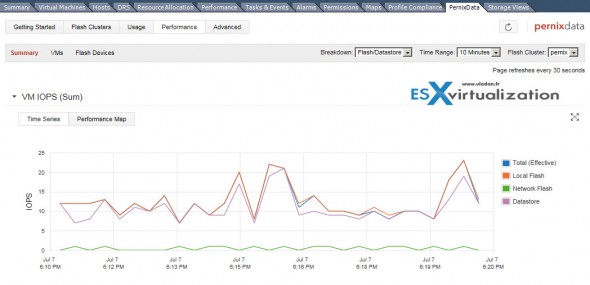

Let's take a look at the first – VM IOPS, while selected the Summary menu.

You can see (each with different color) the Total writes, local flash, network flash or Datastore. The performance graphics can be updated from 10 min, 1 hour, 1 day, 1 week, 1 month or personalized.

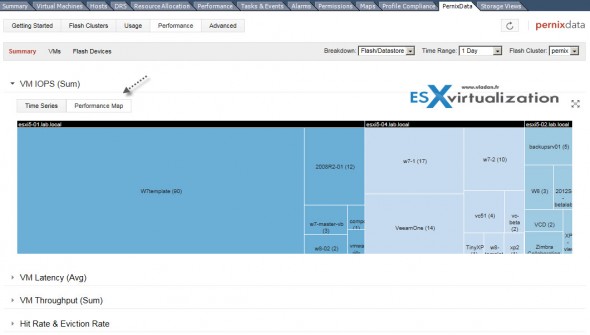

Now when you click the performance Map, you'll end up with an interface which is similar to tools used in virtualization management. Tools like vCOPs from VMware or vOps Server from Dell do present with dashboards with similar views.

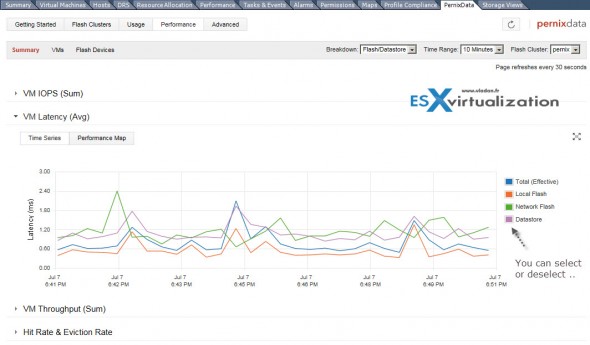

If you choose Latency, you get the same views with different numbers. But you get the picture on how it works….

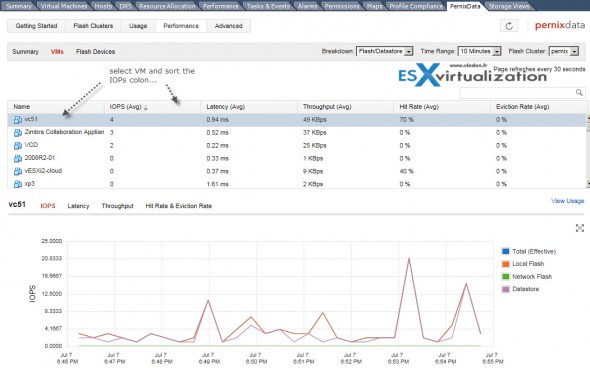

Now if you choose VMs instead of Summary, you can track individual VMs, concerning IOPs, Latency or throughput, from the VM perspective. If you choose Flash devices, you'll get the view about performance on each of the SSD drive.

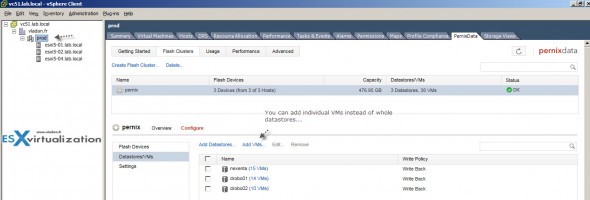

Now If I wanted to show you the way I configured my datastores, I would show you this picture from my lab:

At the cluster level you select the VMware cluster, and go and select the PernixData tab. There you'll find Flash Clusters > Configure > Datastores/VMs… beautiful

Now if you tick the check box next to the datastore, you'll get the Edit menu un-grayed, and you can change the write policy from Write through to write back (or the other way around). If you choose Add VMs, you can add individual VMs that each will get accelerated individually. Not at the datastore level.

By selecting an individual host, you are able to see different informations, but you can't change any configuration settings… You can see the write back peers and the VMkernel interface used for the replication.

Wrap up.

This article showed some screenshots from my lab which is running on consumer hardware, so I did not do any performance tests. But you get the picture on how the solution works, and what's the capabilities. The kernel module based solution offers the fastest path to the data, since the core service “sits” at the hypervizor level. The access to the flash device is always local, and not through another layer or chain of other devices. (hbas, switches, storage adapters…)

PernixData is new company, but the people which are working there have some serious experience on the matter. They were the ones that created VMware VMFS file system. Performance matters everywhere so acceleration of workflows with this new technology by using local flash will be interesting for any VMware admin or customers with shared storage solutions based on VMware environment.

Update: Another screenshot from my lab, showing the redundancy options for writes. Three host lab allows to have two pears for replication.

Hi Vladan,

1st of all big thanks to ur ongoing blogging, like what ur doin’ here.

so while FVP is still in beta questions raised @ me :

is a GA in sight and or or are there information about pricing / cost model so far?

Will there be a vSphere Web Client integration due to VMwares outlined strategy on vSphere client dev?

Thanks so far

Matt

Matt,

Perhaps better ask them directly, as I do not work for PernixData. I have no idea. -:)