The first time I ever touched this amazing (and cheap) network technology called Infiniband, it was a while ago when setting up a back-end storage network (without an IB switch) between two hosts. You could probably see the exploit in my article here – Homelab Storage Network Speedup with …. Infiniband, together with a short video. The HCAs I'm using do have 2 ports 10Gb. You imagine having 10Gigs traffic in a homelab? Quite exciting. So this post's title is Infiniband in the homelab.

Great, but without a switch, it does not really scale up. Two host cluster is good to start with but who would not want to have 3 hosts today, to play with VSAN for example. That's why the idea for a cheap Infiniband switch was born. A fellow blogger Erik Bussink gave me some tips which switch to look for and so I took an option with a Topspin 120 Infiniband switch. (Note that this switch is also referenced as Cisco SFS 7000 as Topspin was bought by Cisco a while back I believe ).

It's a 24 ports switch which looks like this.

Unfortunately, my attempt to change the fans for quieter ones failed. Read the article here. I think that I must get a real 3 pins 40×40 quiet fans. The ones I got had 2 pins and then an adapter which goes to 3 pins, but it's not good as the switch closely monitors the speed of the fans and obviously the 2 wires fans can't adapt their speed. You need 3 wires, that's it.

With Infiniband what's important is the Subnet Manager. With some switches (and firmware level) you have it in the box, while with others you don't. My switch came with firmware 2.3, but Erik was kind enough to provide me with an ISO for the Firmware 2.9 release which has the Subnet manager functionality.

Update: (Link to download the 2.9 release here).

Update 2: The switch has finally received a new Noctua fans (Noctua nf-a4x10) which do have 3 wires. But for my particular situation; I had to change the order of those wires.

For the upgrade, you need console cable, and then you need a TFTP server installed on your management workstation. I used my laptop. I had to get one of those – FTDI USB to SERIAL / RS232 CONSOLE ROLLOVER CABLE FOR CISCO ROUTERS – RJ45 – as my laptop did not have a serial port. (Do some laptops still have one ???)

The firmware upgrade brings the Subnet manager so, on the vSphere side, you don't have to use the VIB provided by @hypervisor_fr. In case your switch does not allow you to use the “hardware subnet manager” the VIB would be the only option. You can check the details how to install the VIB in my post Homelab Storage Network Speedup….

So what's the network setup for the vSphere Storage?

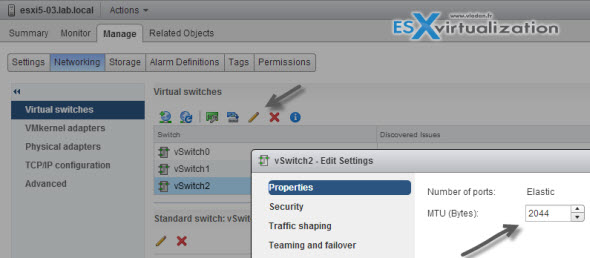

I created a standard switch and changed MTU to 2044, because that's the maximum which is supported. If your switch supports 4092 just go for it. But make sure that you set the MTU at the vSwitch level AND port group level. At first, I forgot to setup the MTU at the vswitch level and changed it on the port group. vMotion did not work, obviously… But once all in place it works like a charm…

I followed Eric's post concerning installing the Mellanox Drivers in vSphere 5.5, with the difference that I haven't used the OpenSM VIB. (have tried the newer, 1.9.9 version of Mellanox drivers, but the HCA card shows up only with single port in the vSphere UI).

Note that you must uninstall the original Mellanox drivers first. The drivers which are “baked in” the vSphere 5.5 original iso. Just follow Eric's post on that. Here are the most important commands from Erik's post, which I used:

- unzip mlx4_en-mlnx-1.6.1.2-471530.zip

- esxcli software acceptance set –level=CommunitySupported

- esxcli software vib install -d /tmp/mlx4_en-mlnx-1.6.1.2-offline_bundle-471530.zip –no-sig-check

- esxcli software vib install -d /tmp/MLNX-OFED-ESX-1.8.2.0.zip –no-sig-check

For the moment the HCA cards do have only single IB cable plugged in each as I'm waiting to get more IB cables. For now I can enjoy high speed vMotion traffic going through the 10Gb HCA ports. The plan is to use one port for vMotion traffic and the second port for VSAN traffic. And concerning my VSAN journey, I'm waiting for the customs to to finally get my package with some more hardware parts so I can beef my existing hosts with SSDs and spinning disks.

So the plan is to get VSAN running and I already received a disk controller cards – Dell PERC H310 (an entry level cards which are on the VMware HCL – you can get one for roughly $90 at eBay). Those cards aren't the most performant, trully not. But they will do the job for the lab. I know that they're not the fastest ones as especially for VSAN you would possibly chose a one with bigger queue depth, but it's just a lab which got an upgrade.

When I chose to replace the older boxes I possibly go for something beefier from the RAM and CPU perspective, including IO card which is on the VSAN HCL and has . Possibly Supermicro like Erik Bussink, who knows… Stay tuned via RSS

VSAN in the Homelab – the whole serie:

- My VSAN Journey – Part 1 – The homebrew “node”

- My VSAN journey – Part 2 – How-to delete partitions to prepare disks for VSAN if the disks aren’t clean

- Memory Channel per Bank getting non active when using PERC H310 Contoler card – Fixed!

- Infiniband in the homelab – the missing piece for VMware VSAN – (This post)

- Cisco Topspin 120 – Homelab Infiniband silence

- My VSAN Journey Part 3 – VSAN IO cards – search the VMware HCL

- My VSAN journey – all done!

- How-to Flash Dell Perc H310 with IT Firmware To Change Queue Depth from 25 to 600

Love the write-up. I have two hosts that connect to a storage server in a 3-way ring in my home lab for now. This is obviously not the best way, but since I jst got an sfs-7000p off ebay for a good price I am going to be changing the setup to bridged links to the switch.

My problem is that the switch came with an old firmware (2.4). If you could send me the 2.9 firmware?

So far I really like Infiniband over 10Gbps Ethernet and Fibre-channel. Fast, easy, cheap and the guys at Mellanox have been very helpfull in providing easy support to problems with HCA firmware (My HCA’s had oem firmware that did not like each other.

I hope the industry realizes how important this technology really is and how it can help the bottleneck issue that seems to be looming over all networks.

Thanks

Sure, no problem. Thanks for your comment Doug.

I got the Topspin 120. I think it’s just the same model re-branded by Cisco when they bought Topspin company, a way back. I’ll e-mail you a link for the download.

Thanks to your blogposts I started creating my own Infiniband setup. At the moment I am waiting for the delivery of the Topspin 120 I bought.

Can you send me a link to the 2.9 fiirmware file?

Keep on writing these nice blogposts about Infiniband. They are very much appreciated!

Sure,

just send me an email to contact (at) vladan.fr and I’ll send you back the link to download the ISO.

Welcome to the club…-:)

Hi

Thanks a lot for great article.

Few question.

1) Can you pls send me a link to the 2.9 firmware file?

2) What firmware version and hardware revision of your Infiniband cards?

I purchased the same HP cards, updated firmware to the last Mellanox 2.9.1000 version (the last HP branded version is 2.8), but VMware crash after drivers installation with “lint1/nmi motherboard interrupt” error. I installed Windows 2008 R2 x64 on the same servers (IBM x3550 M2) with last Mellanox windows driver and they works just great – on 20 (16) GB/sec link with direct without switch connection and 10 (8) GB/sec with switch. Did you see problem like this?

3) Can you provide screenshot of your subnet manager configuration?

Thanks a lot again 😎

E.

Hi the post got updated and I send you an e-mail with further details.

By the way, here is another link for the Topspin 120 image – > http://h20565.www2.hp.com/portal/site/hpsc/template.PAGE/public/psi/swdDetails/?spf_p.tpst=swdMain&spf_p.prp_swdMain=wsrp-navigationalState%3Didx%253D1%257CswItem%253DMTX_f4db3782873e4066b7777d0be5%257CswEnvOID%253D%257CitemLocale%253D%257CswLang%253D%257Cmode%253D4%257Caction%253DdriverDocument&javax.portlet.begCacheTok=com.vignette.cachetoken&javax.portlet.endCacheTok=com.vignette.cachetoken

This would save you some dropbox bandwidth 🙂

By the way any ideas on where to find some documentation about the topspin 120 ? Mine boots into recovery image and I cant find any way to make it work as it should.

Thanks in advance.

Thanks for sharing the other link.

The whole documentation is in the ISO I’m sharing through Dropbox (20 PDFs).

What is the recommended approach to have this running on ESXi 6?

I get:

esxcli software vib remove -n=net-mlx4-en -n=net-mlx4-core

[DependencyError]

VIB Mellanox_bootbank_scsi-ib-srp_1.8.2.4-1OEM.500.0.0.472560 requires com.mellanox.mlx4_core-9.2.0.0, but the requirement cannot be satisfied within the ImageProfile.

VIB Mellanox_bootbank_net-mlx4-ib_1.8.2.4-1OEM.500.0.0.472560 requires com.mellanox.mlx4_core-9.2.0.0, but the requirement cannot be satisfied within the ImageProfile.

VIB Mellanox_bootbank_net-ib-ipoib_1.8.2.4-1OEM.500.0.0.472560 requires com.mellanox.mlx4_core-9.2.0.0, but the requirement cannot be satisfied within the ImageProfile.

Please refer to the log file for more details

Info about ESXi 6 and vSphere 6:

https://community.mellanox.com/thread/2084

“In the meantime I took one of the servers out of production and installed a clean version of ESX 6.0.

If you would like to use the infiniband driver package then remove the inbox ethernet drivers with

# esxcli software vib remove -n=nmlx4-core -n=nmlx4-en -n=nmlx4-rdma

and install the last driver package for 5.*

# esxcli software vib install -d /MLNX-OFED-ESX-1.8.2.4-10EM-500.0.0.472560.zip –no-sig-check

“

Wouldn’t it be cheaper and easier to just Daisy Chain some Mellanox dual port SFP+ fiber Cards?

It’s an old post. Possibly now…

What are people doing for storage on ESXi 6.5? My research seems to indicate the 1.8.x.x drivers are not working on it and therefore no SRP. Is this correct?

These cards will not working with ESX 6.5 or above. I have them working with 6.0 U3 but any attempt to install them in 6.5 has been unsuccessful, anyone know of a good replacement for 6.5? preferable inexpensive 🙂

Hello Vladan,

I’ve follow all command as you and Erik Bussink indicate, all is ok until I copy the partitions.conf, in my scratch directory there is no opensm directory. So I have done the ibstat mlx4_0, the result is link down. I took the node gui as ref to create a directory where to put ib-opensm. When I check on my net adaptor I don’t see anymore my connectx2 card but when I look in storage part I see a ref related to my connectx2.

What did I do wrongly?

Thanks for your support in advance

Great article thanks!, but will the openSM / OFED .vib compilled by the talented people of (http://www.hypervisor.fr/?p=4662) will work installed on a esxi6.5 host ?