When I first built my homelab few years ago I never thought that one day I'll be using anything else than 1Gig NICs for storage network. I always thought that the FC or 10 gigs ethernet technology is out of range most of the individuals and a lot of the SMBs for years to come. But I was wrong. The storage network does not have to be the bottleneck…

Update – The whole serie:

My VSAN Journey – Part 1 – The homebrew “node”

My VSAN journey – Part 2 – How-to delete partitions to prepare disks for VSAN if the disks aren’t clean

Memory Channel per Bank getting non active when using PERC H310 Contoler card – Fixed!

Cisco Topspin 120 – Homelab Infiniband silence

My VSAN Journey Part 3 – VSAN IO cards – search the VMware HCL

My VSAN journey – all done!

How-to Flash Dell Perc H310 Storage controller card with IT Firmware to obtain queue depth 600

Puting a host in maintenance mode and evacuating VMs elsewhere takes not minutes, but seconds. Until few weeks back I ignored some Infiniband technology, or at least I haven't thought of it as a viable alternative to 1gig ethernet, from the cost perspective at least. Recently I've stumbled on an article by Raphael Shitz, a fellow blogger at Hypervisor.fr who has done this exploit by using Infiniband technology.

He (with a help of few others folks) did compiled a VIB package that can be installed on ESXi host. It's unsupported by VMware, as the triangle topology without switch (two ESXi hosts + 1 Nexenta storage box), but hey, it's a home lab, it's cheap (eBay) and it's fast. For supported configuration you would have to add an Infiniband switch, which are still costly. There might be cheaper alternatives through eBay too, but for this moment I went just with the simplest and cheapest triangle topology.

As you'll see further down in the post, those Mellanox Infiniband NICs are showing through the vSphere client as 20Gb Nics. A driver from the Mellanox website is necessary to install in vSphere.

But Infiniband technology isn't that simple. You can't just connect two cards together and hope that they'll be transporting traffic and communicate together. No, a critical component called Subnet Manager must run on each subnet. No problems if you want to connect Linux or Windows boxes, but how about ESXi? The link between those two ESXi hosts, on each one of them there must be this SM running. Ok great. That's where Raphael has done great job and compiled a vib package which can be installed on ESXi host. On the VMware site there is a driver which needs to be installed so the NICs can get recognized by ESXi, and there is another driver on the Mellanox website which needs to get installed as well. There are certainly limit to this setup, but hey, you want to vMotion at a speed that a lot of SMBs can dream of? The price of the parts brought this technology to a price level that's almost equivalent of 1Gig network.

The (simple) Storage Architecture:

A simple triangle architecture where those two ESXi hosts are connected back-to-back, plus the second port is connected to the NexentaStor box. There is a way to have 3 hosts in the cluster, connected to the storage, but for this you'll need more cards and 2 PCIe slots in each of those boxes. In my case I stick with 2 hosts + storage as my storage box uses micro ATX board with single PCIe slot.

List of parts used:

- HP 452372-001 Infiniband PCI-E 4X DDR Dual Port Storage Host Channel Adapter HCA ( 3 pieces)

- MOLEX 74526-1003 0.5 mm Pitch LaneLink 10Gbase-CX4 Cable Assembly 2m (1m) – ( 6 pieces )

Total (approximate) Cost: €187 ($243)

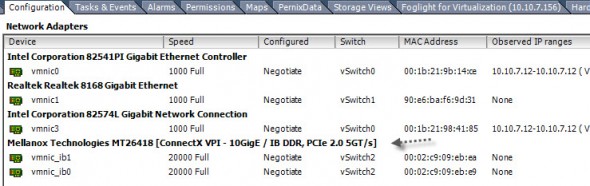

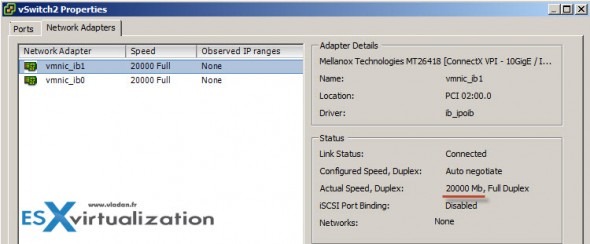

In each ESXi host there is dual port infiniband card, and the third card is installed on my Nexenta box. On the screenshot below, the HP 452372-001 Infiniband PCI-E 4X DDR Dual Port Storage Host Channel Adapter HCA which shows through the ESXi vSphere client as a 20Gb NIC.

The Installation Steps:

The Installation Steps:

- Download Mellanox driver (file called mlx4_en-mlnx-1.6.1.2-offline_bundle-471530.zip) from VMware: VMware ESXi 5.0 Driver for Mellanox ConnectX Ethernet Adapters

- Download the Mellanox OFED driver – MLNX-OFED ESXi 5.X Driver from Mellanox website here. (file called MLNX-OFED-ESX-1.8.1.0.zip)

- Download the OpenSM vib from Raphael's blog post here. (file called ib-opensm-3.3.15.x86_64.vib )

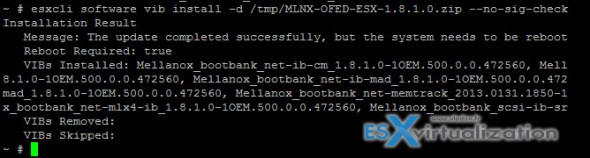

- Put your host into maintenance mode, upload the zip files to the TMP folder on your ESXi (via WinSCP for example) and Install the first file by connecting via SSH (use Putty for example ) and invoking this command:

esxcli software vib install -d /tmp/ mlx4_en-mlnx-1.6.1.2-offline_bundle-471530.zip –no-sig-check

- Do the same for the second file…

- Then for the 3rd file ib-opensm-3.3.15.x86_64.vib proceed with installation by invoking this command:

esxcli software vib install -v /tmp/ib-opensm-3.3.15.x86_64.vib –no-sig-check

So You should end up with a screenshot like this after you reboot your ESXi host. You should see the NIC like that. If one of the links is down, then probably you haven't installed the OpenSM yet.

ESXi configuration:

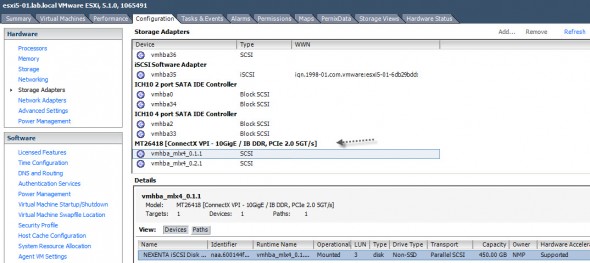

First check that you can see the new storage adapter. Go to Configuration > Storage Adapters. You should see the Mellanox storage adapter there.

See the NICs

I've simply created a new vSwitch where I attached each of the NIC's ports and created a port group for storage traffic. Then picked the NIC to be used for storage traffic. (put the other NIC as unused). Then I created another port group for vMotion and did the opposite (same for FT). MTU is to change for 4092 at the portgroup level plus the partitions.conf file (from Hypervisor.fr) is to define the network topology. The file (partitions.conf) is to put to /scratch/opensm/{LID}/. It's in the manual of the Mellanox OFED drivers, you can check that there.

The Details from the Mellanox driver's page concerning the technology:

- LAN and SAN functionality is available over unified server I/O using high bandwidth InfiniBand connectivity. This reduces cabling complexity and reduces I/O cost (50 – 60%) and power (25-30%) significantly

- LAN performance (from VMs) of close to 8X Gigabit Ethernet has been achieved

- LAN and SAN performance scales linearly across multiple VMs

- Ability to expose multiple network and storage adapters over a single physical InfiniBand port allowing the same ‘look and feel' of traditional storage and network devices

- Completely transparent to VMs as applications in VMs continue to work over legacy NIC and HBA interfaces that have been already qualified on

- All VMware functionality including migration, high availability and others are available over the InfiniBand fabric

- All VMware ESXi Server supported guest operating systems are supported

- Management and configuration using VMware VirtualCenter maintains the look an feel of configuring NICs and HBAs, making it easier for IT managers to configure the allocation and management of LAN and SAN resources over InfiniBand

Configuration on the Nexenta storage box.

- Connect to your Nexenta box as a root, and go to root shell:

option expert_mode = 1

!bash

- Then invoke this command:

svcadm enable ibsrp/target

Documentation and links:

- To define the MTU to 4092, follow the User Manual from Mellanox and use partitions.conf (from Hypervisor.fr)

- to activate ibsrp/target on Nexenta 3, follow the Basic COMSTAR Quick-Start Guide for SRP (from Hypervisor.fr)

- Forum Nexenta thread on Infiniband

- Mellanox InfiniBand OFED Driver for VMware vSphere 5.x User Manual

- Mellanox forum thread – for the network cards

- Mellanox HCL here.

- Mellanox Firmware Tools page (the 2.6 and 2.7 works, where the 2.8 and 2.9 are bugged). See this thread on Serverthehome.com

- Mellanox Firmware Download page.

- Interesting paper – RDMA on vSphere: Update and Future Directions shows testing by VMware on the enormous performance benefits for vMotion when using RoCEE – RDMA over Converged Enhanced Ethernet.

Performance:

There is some screenshot available from the performance tests on Hypervisor.fr blog here, by using VMware IO analyzer . What I did is rather a short video showing the vMotion speed in action. I put a host into a maintenance mode and you'll see the concurrent vMotion operations happening, but in a speedy way! The best way to watch is in HD and full screen.

Credits:

This article would not be possible without a help of @hypervisor_fr and @rochegregory

Update: Please note that the original article reflect the version of vSphere being current at that time. Also the Nexentastor version wasn't the latest one either. SO, I cannot guarrantee that the drivers used in this post are still working as I moved into a full VMware VSAN based shared storage since then.

The Installation Steps:

The Installation Steps:

Good read and thank’s for the mention Vladan.

Next step? Find a cheap switch, to scale up.. -:)

Very nice. I do have a question. Been following your blog for a while. Is your Nexenta running on physical hardware or is it still in a vm? If its on a hardware, can you share your specs?

Thanks

Alex

No, I’ve moved it to bare metal. I do now have 3 hosts to play with + the Nexenta. It’s a test bed, the datastore on the Nexenta runs out of single Samsung 840 Pro 512Gb SSD. Now with the Infiniband storage network it’s super fast. No redundancy, but I’m backing it daily with Veeam.. -:).

Thank you. What hardware are u running it on? Trying to decide if i should do homebrew or go for synology

Thanks

Alex

The hardware for the Nexenta: http://www.vladan.fr/homelab-nexenta-on-esxi/ It runs on bare metal now as a dedicated storage.

There are cheap IB switches on eBay, starting at 350Euro for a 12-port…

Unless you need some sort of IB ‘managed’ switch, do you?

Thanks for sharing Didier,

Not really, the only concern is: Silence | Budget | Compatibility …. Yes, probably that will be my next step. But I’m really surprised that this technology isn’t “popularized” a bit more as many SMBs could benefit from.

IB has (had?) is momentum in HPC and not in classy SMB market which runs traditional ethernet switched topology.

On a side note, will you be at VMworld Barcelona this year?

Hmm. But still, up to now I’ve seen only ethernet or FC, but IB was something new. Well, never get bored when working in the IT. -:)

For Barcelona, yes planning…

Hi Vladan,

Where did you get the Molex cables from if I may ask? eBay hasn’t got any reasonable lengths available – only ridiculously long ones like 4 or 6 metres long… Seems like a right nightmare to get hold of them to be honest.

Adrian

Adrian,

Here is the link for 1m lenght: http://www.ebay.fr/itm/MOLEX-74526-1002-0-5-mm-Pitch-LaneLink-10Gbase-CX4-Cable-Assembly-1m-/310575941550?

Hope it helps.

Sadly only 3m and 10m lengths are left! Let me ask the seller if they will stock 1m again. Thanks for your reply…

Hmm. I would wait a week or so, and look at the regional eBay sites as well (Fr, Ger, etc..) because those items re-appearing quite regularly. I don’t know What I would you do with 3m cables? -:)

Love these post on IB. I only wish I could read french. Time google translate!

Like others I feel IB switch is the way to go – what you spend on the switch could be saved on the additional cards, plus its much simpler setup than having to daisy-chain everything together. The trouble is what’s an appropriate switch type to go for on ebay?

The other worry I have is the driver support. I’m a bit old skool and used to ESX just recognising HW out of the box with drivers on the media. The thought of having to bundle VIBs doesn’t daunt me – but the prospect that Mellanox might drop support with future versions of ESX does. I had to put the upfront investment into IB infrastructure only to find it redundant in a year or two – that’s something homelabber wouldn’t have to face with conventional gigabit. Assuming yr nics on your whitebox/shuttlePC/IntelNUC/AppleMacMini don’t disappear at the next upgrade! 😀

Finally, this Nexanta stuff looks good. Sounds better than OpenFiler, and scales better than say a Synology/IOMega. The only thing is VAAI support – does it support VAAI. I’m not sure. It seems a waste of effort to setup 10GB IB to have it chewed up by non-offload SCSI primitives…

Thoughts?

That’s the plan. To get an IB switch for the backend storage network, even if, for 2 ESXi hosts you can start with this setup. Sure, at the moment I’m not sure that the Mellanox driver will work with the ESXi 5.5 GA built.

Having a backend storage network would be great not only for Nexenta (VAAI apparently works, and will be certified for NFS, but not iSCSI), but also for VMware VSAN, which will leverage storage network for the back end data synchronization, keeping most of the hot blocks locally. I’m a big fan of the hyperconvergence. Systems like Nutanix or Simplivity are the future, but they don’t sell without the hardware… -:) which is not good for homelabs. I liked Chad’s video talking about how “The pendulum is swinging back from the networked attached storage systems (NAS, SAN) back to Server Attached” …. and he says that “you can’t fight against it”… It’s the 3rd video on this post: http://www.vladan.fr/vmworld-2013-highlights/

So clearly the SDS (I know its over-used those days) is the way to go, even (and especially) for homelabs. And the need having faster storage network is even more logical step. But how? The question remains…

Sadly, most of the homelabers must face another problem, which is licensing. Being a part of the vExpert group I don’t have that problem concerning vSphere, but many folks do, and it’s a big problem for them too. Fortunately VMware goes to the right direction with ESXi 5.5 Free by taking out the 32GB RAM limit out, so you can built Whiteboxes with more RAM than 32Gb and go All Nested.

So the ultimate homelab quest continues, with updates not only on the software side (building and rebuilding), but also on the hardware side (less frequently)….-:)

100% ok with you Vladan

Hi Vladan i have a question for you:

Is possible to connect two hosts esxi 5.1 through infiniband card directly without using an external storage unit? … So it is possible a configuration with only two hosts?

Thanks!

Michele,

sure it is. That’s what I’m using actually. As you can see on the picture where I’m having the “triangle” setup. One of the links goes across the two hosts, so two hosts are connected directly (without a switch).

Then you can use local storage of the each of the ESXi host for the VMs, or use a VSA.

Perfect … I start work then.

Monday I start shopping for modification of my lab …

Thanks again for the useful information that you give us every day.

Bye

Great experience sharing Vladan, could you advice the Host HW requirement for HCA, shall we use while box Mother board to do that ?THX

-Eric

I don’t know what you mean. THE HCA uses PCIe ports, so make sure that the MB you’re planning to get, do provide some. Then you should be ok…-:)

Hi all,

I don’t now where to find the HP 452372-001 Infiniband PCI-E 4X DDR Dual Port Storage Host Channel Adapter HCA adapter….on ebay only used…There are alternatives to this product?…

Michelle,

You did not really read through the post.. -:)…. “HP 452372-001 Infiniband PCI-E 4X DDR Dual Port Storage Host Channel Adapter HCA ( 3 pieces – £26 each on Ebay)”

Hi Vladan,

I found the article you listed on ebay but Italy is not among the countries to which it is to be shipped, I’m deciding to take this other article that, in fact, it costs a lot, but I hope it will do to my case … it is the network card 10Gbit NC552SFP ….. I would take two to directly connect two hosts, it is possible to do such a thing? In terms of performance, in the backup and migration of virtual machines, I go near the performance that you show us in your video? Thank you.

I got mine shipped from Taiwan I believe… Not sure about the card you referring to. But the list of available parts/countries changes frequently. Just checked this one, is from UK… http://www.ebay.fr/itm/HP-452372-001-Infiniband-PCI-E-4X-DDR-Dual-Port-Storage-Host-Channel-Adapter-HCA-/360657396651?pt=UK_Computing_ComputerComponents_InterfaceCards&hash=item53f8db23ab

Is the same product that i look….the ship in Italy is not included…what a shame…

thank you anyway Vladan…

Great article Vladan. I plan to follow your steps and set up a similar InfiniBand network on my home lab.

Regards

Steve

Reading through your post you did not specify if you setup IP addressing on each host. Did you just install a subnet manager on all three nodes and everything just worked?

Yes, you just need to make sure that each vmkernel adapter has an IP address to see each other… The same way as normally with 1GbE adapters.

Hello Vladan,

Have you installed “HP 452372-001 Infiniband PCI-E 4X DDR Dual Port Storage Host Channel Adapter HCA” in a Windows Server 2012 R2 server?

If yes, I would like to install and set up this card in Windows Server 2012 R2 environment.

Thanks.

No, don’t have physical W2012 system in my lab.. -:( Check Mellanox for drivers, that’s the only advice I can give.

Hi vladan,

I’m not understand if there is one opensm on each host or not.

If yes, there are 2 different subnet?or 2 opensm coexist on the same subnet?

It would be nice redound

If not, if the host with opensm is poweroff..or broken.Everything stops 🙁

AFAIK there is opensm per host. As the host dies, the opensm do too, unfortunately. But the IB network runs as there are opensm present on the other hosts in the cluster.

Did you run into any limitations on the MTU sizes? Having a simple host-to-host connection errors when I attempt to change or create a vSwitch on anything larger than 1500MTU.

I think it depends on the vSphere version. Try to get in touch with Eric Bussink as I currently have my lab unavailable.

Hi,

thanks for the great tutorial! 🙂 I’ve just discovered how cost-effective and awesome infiniband could be. But I ran into an odd problem : Can’t seem to set the MTU higher than 2044 and transmit at high speeds. At MTU=2044 I get [ 4] local 192.168.13.36 port 5001 connected with 192.168.13.37 port 53336

[ ID] Interval Transfer Bandwidth

[ 4] 0.0-10.0 sec 5.03 GBytes 4.32 Gbits/sec

*measured with iperf by hypervisor.fr

When I set the MTU higher than 2044 all I get is poor performance, clocking at ~800Mbits/s . And if I try to set the MTU = 4092, the Vsphere complains and doesn’t let me do it, because the infiniband vmnic doesn’t allow it, which is odd, since I’ve set up the mtu_4k parameter to true.

Has somebody run into a similar problem and what was the workaround.

Thanks in advance!

🙂

Alex

so its interesting. I have these cards and they work in Ethernet mode in ESXI 6.7 but will not go in IB mode for the 20gb but only 10gb.

anyway you still have this hardware to give a stab and see if you can “Magically” make it work?

I know so little that trying to follow the commands you gave, was difficult but I got somewhat there. I just know it will take custom tweaks, if possible and not straight out of box to work in ESXI 6.7

Hi

Can you please provide additional information how did you run these cards with 6.7? What exact card models (just to be sure), what drivers, how you configured it etc.