Difficult to ignore the Intel's latest CPU release. Intel codename Haswell. The K-series parts lack the support for transactional memory extensions and VT-d device virtualization included with standard Haswell CPUs, so obviously I've turned out to other than the K-series. If you are into overclocking, then the 4770K would probably be your choice.

For my home lab builds I always picks consumer parts. Price and WAF is mostly the first criteria. The second criteria was an energy consumption. That's why I picked up the CPU with the S-series which is the energy efficient model. And I also picked up a cheap Haswell based board for €90 only. The board supports VT-D, the CPU also.

For my home lab builds I always picks consumer parts. Price and WAF is mostly the first criteria. The second criteria was an energy consumption. That's why I picked up the CPU with the S-series which is the energy efficient model. And I also picked up a cheap Haswell based board for €90 only. The board supports VT-D, the CPU also.

There are many homelab options, with many different needs and acceptance levels, and other factors which one can consider. Depending of those needs you'll probably do another build completely different way. But I'm showing here what I've done, how it works for me, what's the configuration and the approximative power consumption.

Update: Check my new ESXi Home Lab host – Building Power Efficient ESXi Server – Start with an Efficient Power Supply

The hardware components

I'm going “green” and picked up the energy efficient i7-4770S model from Intel, which has VT-d, VT-x and vPro, and at the same time has only 65w TDP. The 4 cores, with 8Mb of cache and hyper threading gives me enough power and flexibility to play with. The only “limiting factor” is finally the memory as I would prefer having 64Gb (with prices being so low at the moment).

I'm going “green” and picked up the energy efficient i7-4770S model from Intel, which has VT-d, VT-x and vPro, and at the same time has only 65w TDP. The 4 cores, with 8Mb of cache and hyper threading gives me enough power and flexibility to play with. The only “limiting factor” is finally the memory as I would prefer having 64Gb (with prices being so low at the moment).

The board I picked is mATX board from ASRock, and has in addition of two PCIe slots also two standard PCI slots, which seems to disappear on many boards. If not there is nothing fancy there, and I'm not an overclocker fan… What I needed was VT-x to be able to install ESXi and do a passthrough to play with VT-d. I found both requirements with this cheap ASRock board.

The board I picked is mATX board from ASRock, and has in addition of two PCIe slots also two standard PCI slots, which seems to disappear on many boards. If not there is nothing fancy there, and I'm not an overclocker fan… What I needed was VT-x to be able to install ESXi and do a passthrough to play with VT-d. I found both requirements with this cheap ASRock board.

I picked up quad Memory pack – G.Skill F3-10666CL9Q-32GBXL (220€). As being said, the 32Gb is the maximum that the CPU (and the board) can support. But even with 32 GB “only” it will be another with this much ram as the two other i7 (Nehalem) boxes I'm using in my lab are maxing at 24 Gb.

I picked up quad Memory pack – G.Skill F3-10666CL9Q-32GBXL (220€). As being said, the 32Gb is the maximum that the CPU (and the board) can support. But even with 32 GB “only” it will be another with this much ram as the two other i7 (Nehalem) boxes I'm using in my lab are maxing at 24 Gb.

Update: As a storage “rig” I'm using Nexenta. A build I've done few months ago. It's a micro-ITX box that I revamped with an Intel i5 3470-S and 16Gb of RAM. The box runs NexentaStor Community edition (with some flash storage). So no more “hybrid” approach and Nexenta as a VM.

Today's build has a mini-ATX sized motherboard. And I also took a smaller size box from Zalman. It's an ordinary, budget friendly box for approximately €30. I quite liked the place for the internal storage (I'm not planning to fill it with spinning magnetic disks). There is a place for two 3.5′ disk and one 2.5′ SSD (or hdd). The whole system is powered by Antec NEO ECO 620C 620W, which was one of the PSU certified on Haswell. Yes, one must pay attention to get a Haswell compatible PSO since the CPU drains almost no power at idle time.

Today's build has a mini-ATX sized motherboard. And I also took a smaller size box from Zalman. It's an ordinary, budget friendly box for approximately €30. I quite liked the place for the internal storage (I'm not planning to fill it with spinning magnetic disks). There is a place for two 3.5′ disk and one 2.5′ SSD (or hdd). The whole system is powered by Antec NEO ECO 620C 620W, which was one of the PSU certified on Haswell. Yes, one must pay attention to get a Haswell compatible PSO since the CPU drains almost no power at idle time.

Quote from an article from TechReport.com, where the folks got a detailed informations concerning the details about PSU Haswell compatibility. This is a reply from PSU manufacturer Corsair:

According to Intel's presentation at IDF, the new Haswell processors enter a sleep state called C7 that can drop processor power usage as low as 0.05A. Even if the sleeping CPU is the only load on the +12V rail, most power supplies can handle a load this low. The potential problem comes up when there is still a substantial load on the power supply's non-primary rails (the +3.3V and +5V). If the load on these non-primary rails are above a certain threshold (which varies by PSU), the +12V can go out of spec (voltages greater than +12.6V). If the +12V is out of spec when the motherboard comes out of the sleep state, the PSU's protection may prevent the PSU from running and will cause the power supply to “latch off”. This will require the user to cycle the power on their power supply using the power switch on the back of the unit.

ESXi Network adapters.

ESXi Network adapters.

While writing those lines, the internal gigabit LAN port, which is the Intel I217V, on ESXi 5.1 U1, isn't recognized yet and I did not find an available driver which I could “slip stream” to the ISO or install as a VIB. But this isn't really a problem since I've slotted in 2 PCI Intel 82541PI Gigabit NICs I had a spare, so I can currently run this host with 2 NICs, which is ok. I'm using VLANs on my SG300-10 switch and I'm using VLANs so the first NIC is configured with management network, vmotion network, FT, VM network – each of those on separate VLAN. The second NIC is configured for iSCSI traffic.

This might change on next VMware major release, since the internal gigabit lan port is an Intel one and from my experience, most of Intel LAN adapters just works out of the box.

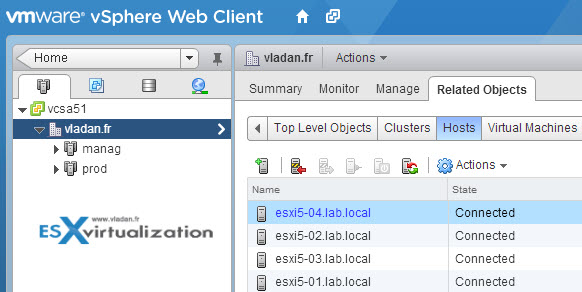

ESXi configuration.

I'm running this build of ESXi from USB stick. It's a convenient way to boot ESXi host, without the need for locally attached disks. In production environments you probably put two local disks in RAID 1 to have some redundancy, but for homelabing I feel comfortable. No noise next to my desk… -:).

In case you're new to virtualization, or you don't know the way to install ESXi on an USB stick, if you don't have CD drive, then you can read my post How to create bootable ESXi 5 USB memory stick with VMware Player. I do have shared storage already in my lab so I did not need to put any local disks inside. I might put some hard drive inside just to install W7 with VMware Workstation, in order to do some testing.

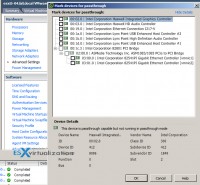

The ESXi can leverage the passthrough capability present on the CPU and the motherboard. Here is a screenshot showing the devices which are available for passthrough.

Another thing that I haven't write is the fact that the CPU fan, which is bundled with Intel CPUs and which is usually crappy and noisy, isn't any more. It's rather good news. And in addition, I've selected “silent mode” in the Bios to lower down the RPM so no need to replace for another fan as I use to do in my previous builds … -:)

The local SATA controler called Lynx is also recognized by the ESXi 5.1 and here is the screenshot. I've tested with one SSD I had in my hand today, at it got recognized without trouble. There is six SATA III storage controllers on the board. But I'm not using any local disks in that whitebox as most of my VMs runs from shared storage which runs Nexenta and Drobo.

The local SATA controler called Lynx is also recognized by the ESXi 5.1 and here is the screenshot. I've tested with one SSD I had in my hand today, at it got recognized without trouble. There is six SATA III storage controllers on the board. But I'm not using any local disks in that whitebox as most of my VMs runs from shared storage which runs Nexenta and Drobo.

Power Consumption:

The whole Haswell box drains between 50-70 W only, which is excellent compared to the older i7 Nehalem boxes which takes between 120-130w each. The whole lab consumes around 440 – 470w, which is quite good considering there is 4 hosts, 2 switches and 1 NAS box … It starts to be big, but is big enough a lab totaling 96 Gb of RAM? To run all the VMware vCloud suite, Horizon, View, Zimbra… Some Microsoft and Veeam to backup all that -:)

The whole Haswell box drains between 50-70 W only, which is excellent compared to the older i7 Nehalem boxes which takes between 120-130w each. The whole lab consumes around 440 – 470w, which is quite good considering there is 4 hosts, 2 switches and 1 NAS box … It starts to be big, but is big enough a lab totaling 96 Gb of RAM? To run all the VMware vCloud suite, Horizon, View, Zimbra… Some Microsoft and Veeam to backup all that -:)

Used Parts:

- Intel i7-4770s

- G.Skill F3-10666CL9Q-32GBXL (4x8Gb)

- ASRock H87m PRO4

- Antec NEO ECO 620C 620W

- Zalman ZM-T1U3

The whole ESXi box costs around €650 and will be used in my lab, for learning purposes. Haswell as architecture, as you know, brings not much computing increase power (around 10% at most), but brings very good energy efficiency and quite good built in graphics.

The i7-4770s has a Intel 4600 graphics card built in, so in case that you want to use such a box for something else than ESXi host, you can. Just slot in an SSD to obtain a killer gaming station – with energy efficiency as a bonus…. Or use it as a box with VMware Workstation, and “vLab-in-a-box” configurations. You might want to get my e-book which guides you through – How-to build a nested vSphere Lab on PC or laptop with limited resources?

I hope that you found this post useful. Feel free to share, comment and subscribe to our RSS feed.

You'll certainly like this! ESXi 5.5 Free Version – no more hard limit 32GB of RAM

Some older Homelab articles:

- How to build a low cost NAS for VMware Lab – introduction

- How to build low cost shared storage for vSphere lab – assembling the parts

- VMware Home Lab: building NAS at home to keep the costs down – installing FreeNAS

- Performance tests with FreeNAS 7.2 in my homelab

- Installation Openfiler 2.99 and configuring NFS share

- Installing FreeNAS 8 and taking it for a spin

- My homelab – The Network design with Cisco SG 300 – a Layer 3 switch for €199.

- Video of my VMware vSphere HomeLAB

- How to configure FreeNAS 8 for iSCSI and connect to ESX(i)

Hey Vladan, very nice article, thanks again! To add some extra info to your Hasswell PSU issue: there’s a very simple solution to the “Haswell/PSU” issue, just a simple and silent case fan. This will keep power flowing, even in idle-mode (advanced power mode) which is why the PSU will not shut itself down. Coolermaster is recommending it for older PSU’s: http://coolermaster-usa.com/press_release/haswell/haswell.html … maybe a buck-saver for those upgrading their whiteboxes? Keep up the good work!

Hey Vladan, such a nice build for home lab. Mine is running an i7-3930K from the previous haswell generation (ivy bridge) with 64Gb of ram. my major concern is about the storage, i used to deal with the 4x sata 3 and 2x sata 2 internal ports until i ran out of ports and added a pci sata 3 card. i added a backplane (2x 3.1/2 optical drive bay) to add 8 more 2.5′ HDD/SSD (not sure if i will put ssd or only hdd into it). Currently i run nested VM inside workstation on top of 2012 server as an os but I don t know if i won t put directly ESXi on host (supported NIC will be the issue, got an intel onbaord network controler but think it won t be detected by esxi) and then run nested esxi on top of the host.

regards,

v

Hey Valentin,

Nested lab, through VMware Workstation is definitely good for learning. But when you want to push some perfs, then the bare metal is the way to go. You can still do nested ESXi(s) there….

Update: The Intel I217V onboard NIC works with the VIB provided by davec.

The Mobo’s NICs if they’re not recognized, it’s a shame, but it should not stop you. Intel desktop LAN adapters are pretty cheap. Depending if there are PCI or PCI-e slots on the MoBo.

Even a “hybrid” approach like the one I run with my Nexenta – http://www.vladan.fr/homelab-nexenta-on-esxi/ – is good, as at the same I have fast shared storage, I keep that host as a base of my “management cluster”. I can easily “break” the other two hosts without breaking the whole lab … But I won’t go a Mini-ITX again. Beside the limiting 16Gb of RAM, I got “fat fingers” … -:)

Best,

Vladan

Hi Vladan,

Another fan of your articles. This one actually inspired me to finally enter in process of building home virtualization lab. I was considering to make it for a long time, but scanning all options and possibilities, going from one extreme to another, it got postponed.

So basically, I am (comparing to virtualization experts) a beginner in this area (ok, I am doing “light” virtualization on my notebook as much as resources are allowing, but I hit the wall long time ago). After reading your article I opted to proceed with some basic investment for such a lab.

As it will be my first lab (and my first build), I prefer to take some time to scan everything before continuing. Purpose of the home lab would be to extend my knowledge in virtualization with accent to VMware hypervisor ESXi. I could run VMware workstation, but type 1 hypervisor simulates the “real” life better I guess. Considering VMs, I expect to run 5-10 VMs (not always simultaneously), with DC and additional servers to simulate some BI environment I am working at. As the whole IT area is like a candy shop for me, in this moment I don’t know where this road will take me. It might end up with obtaining some VCP certificates as well.

So, from your experience, will the Haswell ESXi lab built from components you described be sufficient for such purpose? Energy saving matters here, so i7 4770S looks like a good choice. Concerning storage, I already have 512GB SSD disk. If necessary I will extend it (which is why I would opt for a different box, which supports more 2.5” bays). I am aware that both, CPU and MoBo limit in this build is 32GB, but that should be sufficient for my needs. I am not looking for some extreme/enterprise like performance here.

From the budget point of view, this build is acceptable, but say, if you could spend 100-200€ more, where would you invest it in (storage excluded)?

Sorry for a longer post and keep up with good work.

P.S. I have no WAF criteria 🙂

Cheers,

Damir

Damir,

Thanks for your comment. If you already have an SSD, that’s very good, since the SSD’s are still very expensive. You can run easily 10 VMs on the box considering you’ll be over allocating the memory, but ESXi can handle that memory overcommit. Of course you won’t be able to have any “monster VMs”…

You can also learn through single box to pass your VCP, where you’ll be able to run some nested ESXi. As the box isn’t extensible on memory, I’d consider adding another SSD, which would be useful to run some VSA to have a shared storage (Nexenta, FreeNAS, or even a Windows box with Free Starwind….).

If you start investing to other physical components, like me, then €300 is probably good start for a L2/L3 switch… but it really depends of your needs and the direction you want to take with your learning.

Best,

Vladan

Hi Vladan,

I am one of long time follower of your articles.

Thanks for sharing your haswell based esxi whitebox configuration. I have built a similar configuration but used gigabyte z87m-d3h and i7-4770 haswell chipset. though onboard NIC is not intel and the board has realtek nic it is still not detceted by esxi 5.1 which is bit disappointing for me. I am upadting my blog with the lessons learnt using this motherboard in my blog so others can be benifitted. one of the strange thing is, the nic can be shared through vt-d and works fine with a windows vm by installing the realtek driver within windows.

Thanks for sharing. Perhaps trying ESXi customizer tool and making custom ESXi ISO image would help – http://www.vladan.fr/esxi-customizer-tool-to-customize-esxi-install-isos/ (if there is a driver)

But it’s already good thing that the internal SATA controllers works for you as you can easily add a PCI or PCIe NICs as you’d need more than one NIC anyway. ESXi 5.x supports the Realtek 81xx chipsets. So, for <$20 on Ebay you're ready to go...

Hi,

I have updated about my Haswell build experience at my blog and given reference to your posts wherever applicable. Found the AHCI controllers cannot be passedthrough VT-d which may be supported in future ESXi releases.

Hi Vladan,

thank you very much for writing about your experience here – it helped me a lot! I build up nearly the same environment than you:

Intel i7-4770s, ASRock H87m PRO4, 2x 8GB RAM and some SATAIII SDD+HDD in a Lian Li PC-V355B case.

With a picoPSU and an external power supply it consumes ~28 W with all VMs nearly idle – great!

Hi Alex,

Would you, please, tell me what power supply do you use for this configuration?

Thank you

Hi All

I am new to ESXI.

I want to setup a home Lab server. The Haswell i7 4770 CPU is suitable budget to me.

1. Does this motherboard gigabyte b85m – hd3 support ESXI?

2. Can I just use on board lan card?

3. Does ESXI need at least two Network card?

4. Does ESXI need SCSI harddisk controller? Can I just use one SATA3 harddisk/ssd?

Sorry for my stupid question. I am really new to ESXI

Hope anyone here can help.. thx~~

Hi Galileo,

Gigabyte GA-B85M-HD3 has a Intel B85 Express Chipset and a Realtek onboard NIC.

I am not sure if ESXi supports B85 chipset which is important for using SATA III controller – anyone else might confirm that or not.

Some Realtek NICs with r8168/r8169 chipset may be supported by ESXi out-of-the-box or with 3rd party drivers, but I would not recommend them due to often reading about probs with them.

Better buy an additional NIC, e.g. Intel 82574L PCIe (EXPI9301CT), at about 20€ which is supported out-of-the-box.

Why not consider a ASRock H87m PRO4 mainboard instead?

It’s also micro-ATX and it’s H87 chipset along with 6x SATA III is confirmed working with ESXi. 32 GB RAM possible, too.

Furthermore PCI passthrough (VT-d) can be confirmed working.

Onboard NIC Intel I217V is currently not supported, but I am quite confident that it will be in a future ESXi release. Meanwhile buy an extra EXPI9301CT NIC.

One NIC is fine to start with – you don’t need more at the beginning.

regards,

Alex

ohh…Thx for your reply…

I will do some research for B85 chipset. And thx for other advise. It helps me much

Best Regards,

Galileo

Hi Galileo,

Please keep us posted your findings about B85 chipset. IMO, it is the cost effective motherboard for running ESXi whitebox when ESXi is connected to dedicated NAS device running in different machine. As mentioned by Alex, vladan and in my blog, on board LAN controllers are not supported as on date. You may have to use add-on network controllers.

Alex, on your “Onboard NIC Intel I217V is currently not supported” comment.

You can get the I217V running by adding this VIB to a customized install iso.

http://shell.peach.ne.jp/~aoyama/wordpress/download/net-e1000e-2.3.2.x86_64.vib

http://www.vladan.fr/esxi-customizer-tool-to-customize-esxi-install-isos/

http://shell.peach.ne.jp/aoyama/archives/2907

Or by installing it in an already setup esxi instance, as so.

http://virtual-drive.in/2012/11/16/enabling-intel-nic-82579lm-on-intel-s1200bt-under-esxi-5-1/

Nice find. Thanks for sharing… -:)

Many thanks for sharing I217 VIB here!

I am currently a bit cautious with installing the VIB, because it will also replace the driver of my onboard NIC Intel 82574L. Can you confirm it working with 82574L and I217?

What do You think about this build?

CPU i5 4570

MOBO GigaByte GA-Z87M-D3H

Case. NOX NX-1 Evo

PSU LC Power 550W 6550GP V2.2 120mm

RAM 2 x Corsair Vengeance 8GB DDR3 1600 PC3-12800

HDD 2 x Seagate 1TB Barracuda 7200rpm 64MB SATA 6.0Gb/s

Cooler ARTIC COOLING FREEZER 7 PRO REV.2

Thanks

Alex,

The Intel I217V works after installing the VIB. I’m using it with a pair of Intel 82541PI Gigabit NICs. Those are PCI based 1gb NICs.

Hi Vladan

Do you know if ESXI 5.5 Free will have native support for the Intel I217V NIC ?

The e1000e driver has supported the i217v for a year now, since release 2.0. One would hope that ESXI 5.5 contains an up to date version of the e1000e driver.

I guess we need to wait and see.

No don’t know at the moment, but the vib provided by davec in one of the comment threads works just fine.

Same here – I217V VIB works fine! Finally I installed it after once the original e1000e driver got stuck and the vnic has been deconfigured by ESXi.

I have just installed ESXI 5.5 and I’m sorry to report that ESXI 5.5 does not have native support for the I217V NIC.

The version of the e1000e driver installed as standard in ESXI 5.5 is ‘VMware net-e1000e 1.1.2-4vmw.550.0.0.1331820’, which is pretty old.

The community will have the install a custom VIB to have support for the I217V NIC in ESXI 5.5.

Well it’s not a big deal, the Vib is available for download and to include a vib in an iso is also piece of cake.. -:). With the right tools. Check out a post from Ivo here http://www.ivobeerens.nl/2013/09/20/enable-the-intel-i217-v-network-card-in-vmware-esxi/ which shows you the steps to make a custom ISO.

Hope it helps.

I am planing to replace my HP proliant l 350 g5 with a withebox similar to this, but want to have my NAS/nexenta internaly.

I have two questions:

RAM:

32GB is a bit to little I would like to have the option to install 64-96 och even 128MB ram. What Motherboard and cpu would you recommend? Low power consumption is desired.

To storage:

storage DRS i something I have not tested yet. If I have a volume for for VM´s on a regular Sata disks and 1 SSD, all in nexenta. Will storage DRS always try to put the most active VM’s on my SSD? Or is a more enterprise solution needed for SDRS?

Nice, I built something similar myself. http://www.keymoo.info/trading/archives/2631

Nice build with good learning curve. With that much CPU power and RAM, you can nest a couple of ESXi too….. Enjoy.

Hello Vladan, very interesting choices you have going on here. Personally like many of your visitors I am looking at building my own lab for studying. My requirements would be to have approximately 15 servers running simultaneously (mainly Windows Server 2008R2/2012R2). Besides that I would want to run a Cisco virtualized backbone in GNS3. Would this setup in particular be powerful enough to run this kind of lab?

I also looked at a dual Opteron setup, but that is a whole different ballgame moneywise…

Looking forward too your opinion.

If you’re having the budget for dual socket CPU, why not…. There is soooooo many possibilities… And depending also if you’re want to go consumer based parts, or server based parts. Will you be able to build the system by yourself, or your’re prefering to leave it to someone, and just install the hypervizor….

It depends also where you lives. If you’re US based, then you probably want to go the Supermicro’s way and build a Supermicro based motherboard system, get an ILO for playing with remote based access and so on…

But If you’re on a budget, you might want to go for a Haswell based box like I did, plus add an SSD to it instead of spinning disks. 15 VMs should still be fine with 32Gb of RAM…-:)

Hope to give you some answers at least…

I have spent a lot of time now trying to find a Vsphere whitebox with these spec:

– small, small, small

– cheap

– low power

– 64 GB ram (would have liked ECC)

– quiet

Here is what I probably will get if I do not find a deal breaker.

Gigabyte GA-X79-UP4

Intel Xeon E5-2603v2 1,8GHz Socket 2011 Box

Cooler Master Seidon 120M

OCZ Vector 128GB

Sunbeam Tech UFO Acrylic Cube Case ACUF-T (Transparent)

Corsair CX500M 500W

Team Group Xtreem Vulcan DDR3 PC17000/2133MHz CL11 4x8GB

Sapphire Radeon HD5450 Passive HDMI 2GB

This cost 8 592 sek or 1 318 U.S. dollars (with 25% Vat).

Will add 5 3,5″ 3TB sata drives for storage the SDD will be a part of this storage in nexenta. I might buy a fanless PSU, this will add 707 sek / 108 USD

I felt I needed 64GB so Nexenta could get a decent amount of memory

Yeah, the 2011 was gonna be my choice for 64Gb of RAM too. But then I went the LGA 1150 way… Just make sure that your board supports that CPU. For quiet whitebox there is no place for spinning disks. For my future VSAN build I’m thinking seriously of moving that cluster out of the room where I’ll be working… -:).

Hi,

We all know that Asrock supports vt-d but how is with the newest models in particular ‘Z87 Extreme6’ or ‘Z87 Extreme9’. I want to build new home lab based on i7 Haswell. Did any one test it?

Just my experience:

Asrock Z87 Extreme6

Intel I7 4770

Adaptec 2405 for RAID1

2 x WDC 2 TB Digital RED

32 GB with 2 x Kingston KHX1600C10D3B1K2/16G

esxi on 128 GB ssd OCZ Vertex

I was able to inject the vib file posted by davec into esxi 5.5 ISO image using ESXi Customizer 2.7.1.

It works perfectly, the only drawback is that only one NIC is recognized by the driver, while the Asrock mobo is equipped with a dual GBE NIC.

Thanks for sharing.

The “dual Onboard NICs” aren’t the same model:

1 x Giga PHY Intel® I217V, 1 x GigaLAN Intel® I211AT

That’s why one of them isn’t recognized (the second one). Perhaps the community has a driver compiled, try to look around. Good luck!

Thanks Kranz.

Do you know, is there and HDD size limit? I’m plannig to buy 4TB WDC Black hard drive.

in Vsphere 5.5 there is a limit of 62TB for a VMDK. No limit for how large a physical disk can.

What do you mean? The VMDKs on the datastore can now be as large as 62 TB. Concerning individual drive size, it depends the RAID controller sitting underneath (supported? No?) Not all RAID controlers supports 4TB drives. If you’re using hardware from HCL then there is “no surprise”….

Vladan,

I plan on building a Haswell ESXi Whitebox using the specs you listed (thnak you for doing so), and wanted to get your thoughts on running VM Workstation on it to create the multiple ESXi hosts and the other VMs.

Thx,

Jeff

Yes, It’s a nice quiet and powerfull box -:). Plus there is a graphic card on the mobo with Haswell architecture so it’s consuming less energy and stays cooler.

Hey Vladan,

great blog you’ve got here…!

I’m up to building a whitebox ESXi host myself to replace a Supermicro H8QME-2 based 4-socket Opteron system (4* Shanghai, 128 GByte RAM) which consumes way to much power…

I’m not so much concerned about computing power…but I definitely need 64 GByte RAM…what board/RAM combination would you advise me to buy?

Thanks alot & best Regards,

Jonathan

Hi,

quite a pity to see that you deleted my question – may I ask why? I would very like to base on your build, but I NEED 64 Gbyte RAM. Is that still only possible using Socket 2011?

J.

Here is the parts I am using, still waiting for CPU So I will not be done until next weekend.

http://www.prisjakt.nu/list.php?l=2348770&view=l

Missing here is a controller card I will buy on Ebay.

And yes socket 2011 and a cpu that supports 32Gb+ is needed. Xeon E5 series does this.

PS can anyone recommend a decent GPU? nothing special is needed. I will use it for 1080p/Ultra HD video and test Xendesktop/view on an Ipad.

I will post my results when I am done.

Hi Jonathan 🙂

I’m using a 64GB Ram build based on an Intel i7-3930k (6 cores 12 threads). The mobo is an ASUS P9X79 (2011 socket). Don’t think there are 1150 socket Mobo that accept 64GB of RAM for now.

Regards,

Vpourchet

I think your comment wasn’t approved yet. That’s why it did not appeared. I haven’t deleted it.

Okay, sorry.

So what about my original question – is there any possibility to get a Haswell CPU up and running with 64 GBytes?

Thanks Fredrik – may I ask you why you decided to buy a Xeon CPU (Xeon 2609v2=2.4Ghz) instead of an Core i7, e.g. the i7-4820K (=3.7Ghz)?

lower watt one the main issue when I decided what cpu to get sins I plan to run it 24/7.

Hardware is not my strongest aria.

I mainly work wit Citrix xenapp on a daily bases.

Hi Vladan,

Very good article as the rest of them, I found your blog last week and I subscribed immediately. Keep up the good work.

I’m on a crossroad now and I’ll really appreciate your thoughts on that as you already went through what I’m gonna ask. My goal is to have a media/storage server for pics, movies and stuff and also powerful ESXi box where I’m planning to run a lot of VMs, but maybe just couple of them to be active at a time. I’ll be running mainly Oracle database along with few more Oracle products for testing purposes, CPU won’t be an issue but their will require some memory. Of course the main concerns here are the noise (it will run the same room where I work) and the price, WAF as well 🙂

So I’m wondering few questions here:

1. Should I build one big server (to support more than 32GB RAM) or start with smaller one and then add more servers if I need to ? There are pros and cons – for a big server I’ll need to spent more money initially but then I’ll have just one server in my office and maybe it would be cheaper in long term. Otherwise I have to buy a switch as well and connect all together, although I don’t mind experimenting with Infiniband. 🙂

2. Should I use my VM server to host the storage server as well or build separate machine and leave more power for the VMs ? I’ve seen environments where Nexenta is running on the USB drive along with the ESXi and then shares the drives over iSCSI to the ESXi. The downside of that is when you reboot the ESXi you need to bring the Nexenta first and then the rest, but I can cope with that.

On the other hand wouldn’t be better to build dedicated storage box with all the drives I may need, SSDs, network and leave all the power of the ESX for the VMs ?

Thanks in advance.

Best regards.

Depends on your budget. If you calculate every piece, for physical lab with a couple of whiteboxes, switch, cabling, separate physical shared storage, you will probably want to start with single box…-:). There is no single answer, but 32Gb of RAM allows quite a lot.

Nice post Vladan.

Dear Sir,

Thanks for sharing great information.

With above configuration i will able to evaluate all feature such as

FT

VMOTION

Virtual SAN

vCenter Site Recovery Manager

vSphere Data Protection Advanced

Virsto

vSphere Storage Appliance

Thanks,

Pravin

Hi Vladan,

That was a fast reply, sorry for not getting back. I was really busy and unfortunately building the box wasn’t a priority 🙁

I guess then I’ll start small and grow big 🙂

Regards.

Hi Vladan,

Many thanks for your blog. I have come to this setup:

Intel Core i7 4770S Boxed

ASRock Z87 Pro4

G.Skill F3-2400C11Q-32GZM

Samsung 840 EVO 250GB

Works great.

Hi Vladan,

Is there a reason for selection of a i7 proccessor

i’ve been making the comparison between the

intel i7 4770 and intel i5 4570

What is the difference and why select the i7?

Thanks

The i5 4570 does not have hyperthreading. 4cores yes, but without hyperthreading…

Dear Sir,

Thanks for sharing great information.

With above configuration i will able to evaluate all feature such as

FT

VMOTION

Virtual SAN

vCenter Site Recovery Manager

vSphere Data Protection Advanced

Virsto

vSphere Storage Appliance

Thanks,

Pravin

I tried the board that is mentioned in this post. i.e ASRock H87m PRO4. Every thing works as explained, except disk throughput. I struggled to get disk throughput and nothing helped. All what I could achieve was between 8-20MB/sec writes on a SATA III HDD. Issue seems to be you need to add a RAID controller with a battery backup, which I did not want to do as I was trying to do a low cost low power home lab build. I finally ended up with first 1 listed below. But all 3 below I can confirm work well. (VT-d/IOMMU works and disk throughput is good. Do not buy ASUS boards as they do not seems to boot from USB when esx is installed)

1. Gigabyte GA-B85M-D3H (Low cost)

2. Gigabyte Z87 MX-D3H (Enable BIOS setting “Dynamic-Storage-Accelerator” to get high throughput)

3. Gigabyte F2A88XM-D3H (FM2 – AMD APU if you want to use, IOMMU supported)

Asus Z87M-Plus boots ESXi free v5.1 and v5.5 from USB (Bios 100x).

The problem is the Asus BIOS is awful and won’t boot from USB unless there are no other boot option. eg. if you have an OS on any of the drives it’ll boot from that before the USB.

Still playing with this as it doesn’t seem to make much sense.

Trying to get USB pass through though and am having trouble determining which controller (Lynx Point USB enhanced controller #1 or #2) to passthrough to a VM.

Anyone have any idea’s ?

Although Pass through do seems to show up as available options. It do not seems to work, when it comes to a windows VM. Guest VM simply wont boot, stop with an error message. Don’t know the situation with others. I tried a PCIe graphic card, to passthrough, that worked, but VM (windows7) ended up with BSOD.

Also it is interesting to note Non-VT-d CPUs (ex. i4570K, i4770K) do not seems to work at all, get stuck at initializing IOD. Not sure anything to do with my Motherboard (Gigabyte GA-B85M-D3H) or common with any motherboard and esxi. If someone can confirm would help everyone.

Never buy a “k” processor if you want to use visualization, vt-d is not available on these CPUs.

sh0ck-wave: I had to try port after port until i got it right. Remember that Keyboard and mouse ins not forwarded to a guest Os if you use the USB hub option, you must use Vm direct path to give a VM an entire USB controller.

Boru: re GPU. what gpu are you using? Have you seen anyone else posting a successful attempt in using that model?

I use Radion hd 7750. This worked fine until Friday when I installed Vcenter server. Now if I start my win 7 VM the host dies. Will try to fix it this evening.

Update (if anyone’s interested 🙂 ..

To select which USB controller run PuTTY and SSH to your ESXi server.

Then run ‘lsusb -v | grep -e Bus -e iSerial’

The controller with the USB memory stick is the one you DON’T want to passthrough. Great write-up here :

http://www.progob.nl/robmaaseu/index.php/vmware-esxi-determine-which-usb-bus-to-pass-through/

Got USB passthrough going to a Windows 7 server, you need to ensure you create the server with the ‘EFI’ setting for Bios (right click | edit settings and set this before installing the OS).

If you have installed it with ‘BIOS’ I think your screwed and will have to re-create the VM. (I only got the ‘!’ beside the device in device manager if it was set to BIOS and could not get it to work)

Installed an ATi HD 7750 (Gigabyte OC 2GB card) and passthrough went straight in for the Windows 7 / EFI set system. I reserved the memory but did not need to any other commands. Just put in the disk and loaded the drivers that came with the card after Windows had come up. Restarted, went straight onto my TV.

Run 4x other systems (2003 R2, 2008 R2, Ubuntu 12.4.4) and they all ran using the onboard GFX card just fine at the same time.

Still playing with this as have not tried sound or USB at the same yet and I want to ‘only’ push it to the TV as currently it extends over a console screen aswell .. which messes up the mouse.

I installed a 16GB MLC SATA SSD drive (APacer / HP model) into the server and will look at installing VMware onto that rather then using USB as I think I’ll need all the controllers available in order to passthrough everything ok.

This also fixes up the boot issue.

The boot system on this Asus board seems to be wacked, but to get around this just connect the SATA ports on the motherboard (as listed in the manual) in the order that you want them to boot :

SATA connection 1 : CDROM

SATA connection 2 : ESXi boot device

SATA connection 3 : Storage 1

SATA connection 4 : Storage 2

.. etc ..

Hope this helps someone 🙂

Hi All, this is an awesome blog 🙂

I have this build:

GA-Z87X-D3H

i7 4771 3.5Ghz

32GB (4x8GB) Patriot Viper 3 Series, Saphire Blue

2x250GB Samsung EVO SSD

I was able to add the driver for the lan I217V with ESXI Customizer on the ISO of ESXi 5.5 U1 and it works fine.

I have configured the BIOS with RAID 1 for the SSDs but when I start with the ESXi installation and get to storage device option I can only chose each SSD as a seprate local storage, so the raid configuration isn’t recognized by the ESXi installer. Does this means that I must add a hardware raid controller or there is a workaround on the issue? Please advise.

Thanks,

Yes you need a hardware raid card (if you want redundancy) which is on the HCL as VMware does not recognize the built-in raid controller on the Gigabyte card.

Dear Sir,

Will there be any uptime issues with i7 4770s?

i7 4770S is designed for low voltage, can keep these systems running for 24 x 7?

Will there be any performance impact with i7 4770s compare to i7 4770?

Thanks,

Pravin

for lab environment those consumer based Intel CPUs are just fine. They got HT and supports Intel VT extensions. In addition they consume max 65W which is very valuable when designed to run 7/7. Check the deatils at Intel ARK site here: http://ark.intel.com/products/75124/Intel-Core-i7-4770S-Processor-8M-Cache-up-to-3_90-GHz

Dear Sir,

Thanks for your reply.

Confuse with E3 or i7

i7-4770s / H87 / 16GB

E3-1225V3 / C226 / 16GB (UnBuf. ECC)

With your current LAB, can we test all feature? or some feature need Server Grade Processors ?

Thanks,

Pravin

Pravin, I can test every feature, including FT. But as part of being cool, I don’t really need FT in a lab.-:)

Dear Sir,

Thank you guidance.

Regards,

Pravin

Dear Sir,

I am a newbie in the world of virtualization. in our company we have all the systems on a virtualized blade server fujitsu with vmware.

To improve my skills, I would like to create a home lab consists of two nodes and storage.

I was thinking of buying this hardware:

Nodes:

2x Asrock h87m pro4

2x i7 4770s or E3 1245 v3

4x 8gb ddr3 1600

2x suesonic flex atx 300w

2x Mellanox InfiniHost III Ex MHGA28-XTC

Storage

1x asrock h87mpro4

1x i5 4670s or E3 1225 V3

1x Mellanox InfiniHost III Ex MHGA28-XTC

2x 4gb. Or 2x 8gb

2x 256gb ssd Samsung 840 pro

4x 1Tb sata 3

1x flex atx 300w

you can give me some advice on the proposed configuration?? xeon or i7? What do you think of the format m-itx?

Thanks

Polo

Struggling to understand the reason to set up a separate VMware for storage.

Get the first box with enough storage and configure that one as required.

As a corp / enterprise you wouldn’t run a VMware for storage anyway, you’d run a SAN (or NAS depending on your budget).

Rather then a 2nd box I’d use the money to buy VMware essentials so you can at least use the storage API’s and backup your systems (eg. trial Veeam).

Also I’d go the 4771 over the 4770s. The 4770s may only be 65w, but it runs hotter. 4771 has all the bits you need and still runs very cool (the stock fan on mine hardly ever ‘goes loud’, even when I’m running a game on the passthrough’d GFX card with multiple cores).

Also, no point setting up those boxes unless your using multiple LAN ports, so get an Intel Pro 1000-t dual port and a decent GB switch. The more LAN ports the better as once the virtual router and firewall plug-ins become available everyone’ll be wanting to play with those .. and you’ll need more ports.

Lastly make sure you really wanna go MATX on the boards as having extra 4x PCIe ports is very handy. eg. most ‘decent’ LAN and RAID cards are 4x, and most MATX boards only come with 1x 16, 1×8 and two 1x PCIe ports .. which pretty much limits you to 2x ‘server grade’ cards. An Asus Gryphon for example is one of the few MATX boards that allows three 4x or greater PCIe cards.

During the time of writing (22. June 2013) the only Haswell option was the 4770s as the low power CPU.

The reason for separate box for storage (NAS) is simple: vMotion, DRS, HA…. all those clustered features which are only available with shared storage.

The lab is getting rebuilt for VMware VSAN – adding Infiniband for the back end storage (2x 10Gb) and IO cards PERC H310 6Gbps PCI SAS SATA RAID plus one SSD per node.

The lab cluster will have 4 nodes where 1st cluster (management) with single node will be used only for management (vCenter, DC, DNS, BackupServers, …..) Second cluster will be VSAN cluster with 3 hosts.

Vladan,

I am building a similar build.

What model of usb thumb drive did you buy to install ESXi on ?

Any model, 1 Gig min size.

I successfully setup a new home lab ESXi 5.5, using Gigabyte GA-H97-D3H and customized ESXi from http://www.v-front.de/2013/11/how-to-make-your-unsupported-sata-ahci.html

i5 4690

32GB RAM

Samsung 840 SSD

several 5x old 500G SATA disks

Intel 82574L NIC

Vladan,

Here is my build :

Asrock H87M Pro4

i7 4770

3x2GB 6GB

256gb SSD Samsung 850 pro

Patriot Supersonic Boost XT 8GB

600W Silverstone ST60F-PS

Here is the link to the article on my blog:

http://www.michons.us/2014/11/24/esxi-whitebox/

I did some pretty good testing for USB Passthrough and PCI passthrough for the video card. I will post this very soon.

Hello Sir,

PCI Intel 82541PI Gigabit NICs network adapter work OK with VMware 5.5?

Will it support NSX function? I am planning to build new lab with

i7 4790s

AsRock H97M Pro4

Thanks for sharing knowledge.

With Best Regards,

Pravin1

Thank you for the article and information very good stuff! I wish I had found it before I built my ESXI 5.5 box as I did not realize the short comings of the i7-4770k for a ESXI host.

Of the two differences you mentioned, transactional memory extensions and VT-d, if possible could you please explain what the impact of not having transactional memory extensions on a ESXI 5.5 box is?

Thanks for your insight,

Stuart

thanks for all your time and effort, this has been one of the guides I’ve followed to build my new rigg:

core i7 4790

asrock z97 extreme 4

32 gb ddr3 RAM 1600 kingston hyper x savage

crucial mx 256gb SSD

NIC Intel Gigabit CT Desktop Adapter PCI-e (EXPI9301CT 1000)

PSU corsair gold CS650M

I’ve also got an old asus geforce GTS250 and an older ati radeon x1500. I will use one of them for the host if I choose the XEN or KVM option.

I’m also looking for a cheap hba SATA card for storage passthrough

and, of course, a new GPU. I will choose the gpu (an nvidia GPU, I don’t like amd GPUs) when I decide which hypervisor is the best for my needs.

I’m still trying to decide between vmware sphere, xen or KVM

My idea is to use a hypervisor for something like a Multi-headed VMWare Gaming Setup ->

http://www.pugetsystems.com/labs/articles/Multi-headed-VMWare-Gaming-Setup-564/

or something similar with Xen or KVM, plus two ar three more VMs:

-linux “main” VM (office, video, websurfing, development…)

-windows 8.1 gaming VM

-NAS4free file server (a BSD distro used for file sharing)-> I already use it as iSCSI target for VMware (or other hypervisor) in a dedicated old box, I’d like to implement it as a VM as well in the same rigg

-windows 7 VM (for websurfing, software testing…).

I’ve read that the performance of them three is, nowadays, quite good in VGA passthrough, but I still don’t know which of them (vmware sphere, xen or KVM) will fit my need, or is the best for my hardware/network.

I’ve got a GBE network at home (controlled by an asus DSL-N16U modem-router), but many VMs will be used through wifi n300 or even n150. The most demanding VMs will be used through wired gigabite network (the main linux VM and the windows 8.1 gaming VM).

Between the benefits of vmware sphere I see people report it has a very good performance in VGA passthrough, and it’s the hypervisor I’ve used the most.

But in the other hand, it has no support for geforce GPUs on passthough, only AMD or nvidia quadro cards (or modified geforces). And all the operating systems act as VMs, no “usable” local host like KVM or ZEN (dom0), -> so one more VM that needs to be used through the home network from another computer.

Any suggestion about which hypervisor should I use?

Any suggestion about a good+cheap nvidia GPU for passthrough?

or, if I choose vmware sphere or XEN, I will not spend my money in a multiOS quadro card, so perhaps I will choose an AMD gpu ..any suggestion? should be a cheap one, passthrogh capable, and not powerhungry (my PSU is “only” 650w, and if I have to choose an amd card for the windows 8.1 gaming VM, that card will have to be in the same motherboard with my power-hungry old asus nvidia geforce GTS250 used by the host).

thanks

PD: sorry for my poor english

Please let me a good economical board which support i7-4790 LGA 1150 and bare metal esxi 5.5

Hmm, it’s kinda a lotto. Most of the time the built-in NIC isn’t recognized, which is not a problem as you can plug-in an Add-on PCI-e Intel NIC for cheap… RAM limit is important on consumer builds. I would not build anything less with 64 Gb of RAM…

Hi vLadan,

I set up Gigabyte mobo B85M-D3H with Hasswell CPU G3220. But I cannot get vmotion to work (I know the other requisites, they are fine). I can not also enable EVC on this hardware. Did you try EVC? Any suggestions for me, like anything to enable i BIOS?

Thanks.

Unfortunatly I cannot tell as I don’t have access to this hardware. The haswell built I’ve done last year did work. Perhaps check if you can enable VT (virtualization technology).

ote: Intel Virtualization Technology (Intel VT), Intel Trusted Execution Technology (Intel TXT), and Intel 64 architecture require a computer system with a processor, chipset, BIOS, enabling software and/or operating system, device drivers, and applications designed for these features. Performance varies depending on your configuration. Contact your vendor for more information.

Note: Intel EM64T requires a computer system with a processor, chipset, BIOS, operating system, device drivers, and applications enabled for Intel EM64T. The processor does not operate (including 32-bit operation) without an Intel EM64T-enabled BIOS. Performance varies depending on your hardware and software configurations. Intel EM64T-enabled operating systems, BIOS, device drivers, and applications may not be available. For more information, including details on which processors support Intel EM64T, see the Intel 64 Architecture page or consult your system vendor for more information.

Ensure that your processor supports EM64T and VT.

Check the VMware CPU identification utility tool: https://www.vmware.com/support/shared_utilities

Hi Vladan. I built my current home esxi lab using this guide. I boom my boxes from USB flash and it’s been working great for years. I just tried installing esxi 6.5 but it fails on the pink screen with very little clue why. Have you been able to run 6.5 on this Asrock H87m Pro4 motherboard by chance?

Hi Frank,

No, I haven’t tried that as this box got “converted” to physical Hyper-V host where I run Veeam Backup.

Thanks for following up Vladan. Just in case anyone else is in the same predicament, I swapped out my original Intel Pro 1000 MT nic with an Intel Pro 100 VT and the installation completed fine. The servers are running great on 6.5.0-4944578. The onboard Realtek nic is working fine too.

BTW, I tried swapping out .vib drivers for the MT nics but never got any to work.