The main problem with an ESXi home lab running 7/7 is usually one factor – Power consumption as a primary ongoing cost. Especially when you run a lab with a several hosts. Additional factors like cooling or noise can be usually solved by moving the server(s) to the separate room with natural airflow, but the power consumption is something that you have to plan ahead and you'll be dealing with during the lifetime of the lab. So in this post we'll look at some possibilities to build an Energy Efficient ESXi Home Lab Host. The post is part of an article series starting here.

With this in mind I started to look around for parts that would fit as foundation bricks for the homelab. I checked the latest power efficient CPUs, but ones which would be able to deliver enough power for an ESXi lab host, but the same time I wanted to break the 32/64Gb RAM barrier. Also IPMI was one of the features I wanted to get in my lab evein if Supermicro's IPMI is a bit tricky…. (Java….) . Supermicro with IPMI based boards provides Integrated IPMI 2.0 and KVM with dedicated LAN.

I wanted to get something which is:

- Easy to install ESXi (no hacking of drivers or VIBs)

- Break the 64 Gb RAM barrier so It's possible to go and have some nested ESXi and stay on single host.

- Well designed, robust case

- Power efficient PSU

- Power efficient platform (DDR4, Xeon E5-2630L)

So that's why I opted for socket 2011 R3 and low power Intel Xeon E5-2630L v3 CPU which has only 55W TDP. You should read my previous post which details the choice of the motherboard – Supermicro Single CPU Board for ESXi Homelab – X10SRH-CLN4F.

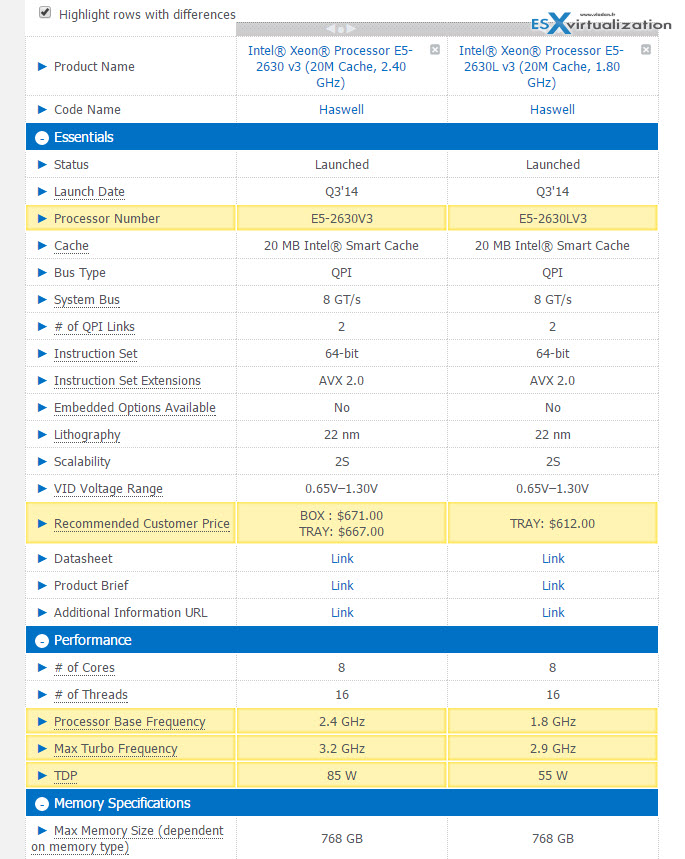

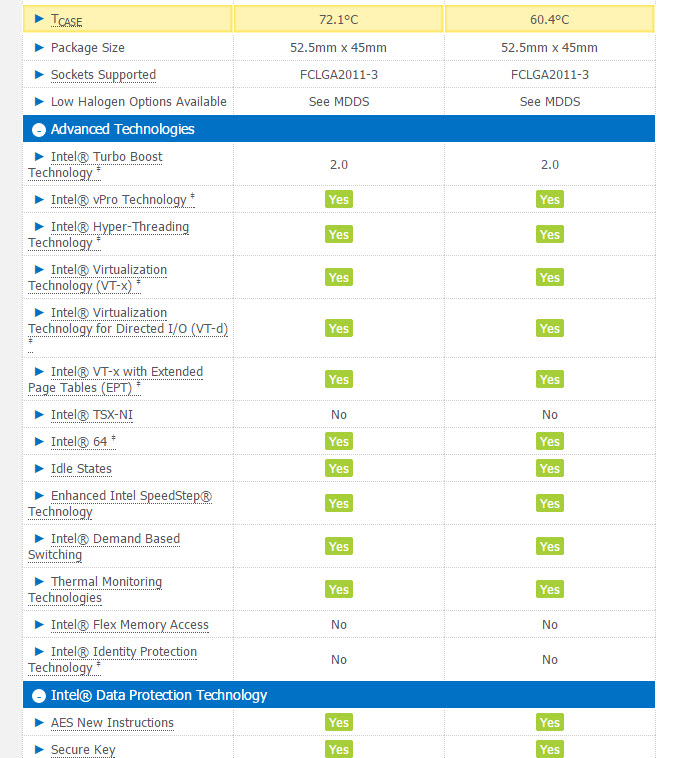

The CPU's details are at Intel ARK's website. The only difference between the E5-2630L V3 and the E5-2630 V3 is that the latter one is on higher frequency (85W TDP). You can see the differences when you compare both CPUs. The yellow color differentiate which characteristics are different between those two.

Intel Xeon E5-2630 V3 and E5-2630L V3 Differences

And here where you can see that the E5-2630L V3 runs 12 Degrees cooler, plus other characteristics like Intel VT-x, VT-d…

Intel Xeon E5-2630 V3 and E5-2630L V3 Differences

Future prove costs money…

We can talk about home labs for hours, days… and I think that with online offers from Ravello where you can run a couple of ESXi hosts on Amazon AWS for a fraction of a cost, we shall rather talk about home datacenters .. -:) But on the long run and for the look and feel (I still like to touch my hardware, to do my “geek” … ) is best to own the hardware. Yes the cost is a factor, but we spend money on cars, apartments, houses, so why not home datacenters?

DDR4 is still about 30% more expensive than equal capacity of DDR3, but planning ahead and shopping for strict minimum RAM (adding more RAM later) shall be a good budget strategy for now and on the long run. With price drops expected this year, additional RAM can get purchased later. The DDR4 pricing shall fall in second half of 2015 to become cheaper than DDR3 in 2016 where DDR3 will start fading out. However PC and server hardware is never future prove…

The RAM is really the first resource which gets exhausted and if you don't have enough slots or you have populated them with lower capacity memory sticks you're stuck. Currently 8, 16, 32 or 64 Gb DDR4 RAM sticks are available with lately some manufacturers putting in production sticks with capacity 128GB.

Supermicro has a range of motherboards based on this new 2011-R3 socket architecture where the one I picked up (X10SRH-CLN4F-O) was the one having 8 RAM slots and supporting up to 512 GB of LRDIMM modules.

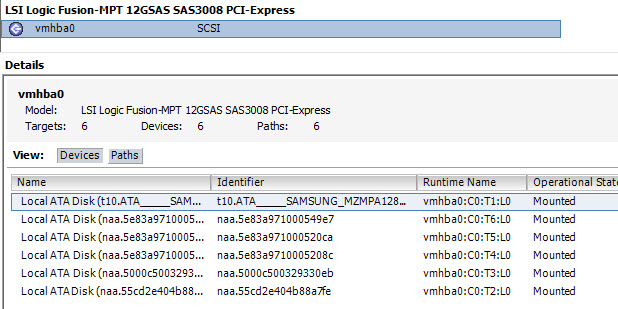

The board also has Four 1Gb Intel NICs and integrated LSI 3008 SAS disk controller card (up to 8 SAS drives in RAID 0, 1, 5, 10). I reference other boards from Supermicro, which are based on the same socket, but are cheaper due the fact that they have less onboard NICs or the integrated controller isn't the one from LSI, but the Intel C612 which can still drive up to 10 SATA devices in RAID 0, 1, 5, 10. However for performance reasons some might just prefer LSI which is understandable.

The C612 is also present ont the X10SRH-CLN4F-O which has the built-in LSI 3008 for SAS (or SATA) attachments, so basically you can attach up to 10SATA HDDs to the C612. Those boards can also be using Xeon E5-1600 v3 family CPUs which carries less cache and less CPU cores (4cpu cores). The 4 core CPUs are obviously priced cheaper allowing to build system which is perhaps 2-3 times cheaper than a “fully loaded” system.

The internal LSI 3008 is recognized by ESXi 5.5 or ESXi 6.0 out of the box, and recently the card has been added to the VMware VSAN HCL – check the VSAN ready nodes PDF. It's a 12 Gbps SAS3/HBA/2 internal mini ports. You can update it with an IT or IR firmware. I did an update with an IT fimware, to be able to pass the disk directly to the ESXi.

It's 12GBs SAS storage controller card so take a complete advantage of such a card, you'll have have some SAS drives (SSDs???)… Not my case for the moment. On this I'll report later.

Update:

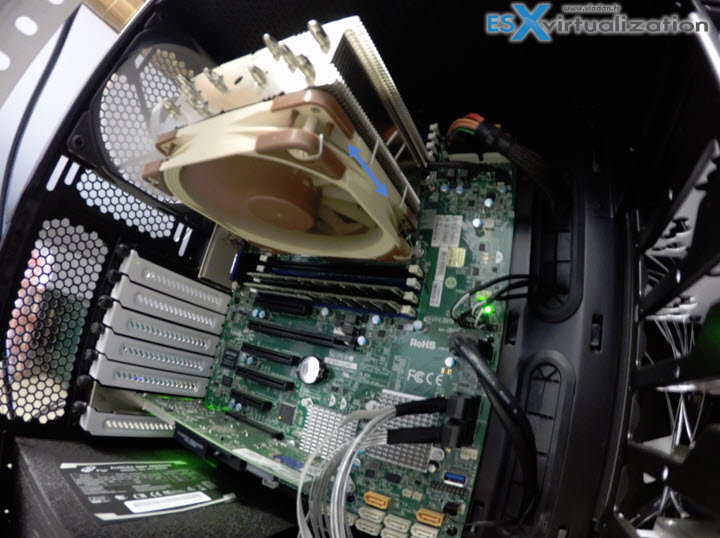

Colin has had a question about fitting a RAM in the slots close to the heatsing. Check my answer, and here I also shooted a photo showing how I mounted the heatsing and that the fan can move up and down in order to fit the RAM sticks which need to fit into the slots close to the CPU…

Buyer's Guide:

Here is the reference of what's possible to assembly with latest Supermicro 20011-R3 based boards. You can build a very powerful system loaded with lots of ram (8 available RAM slots). The choice of RAM is just question of money for the moment – if you take 16Gb or 32 Gb sticks. You can also start with 8Gb RAM sticks, but this would give you “only” 64 Gb of RAM.

The CPU list is the lower cost selection with 6 or 8 cores. I don't imagine to buy a CPU for $1500… just to build a lab system. That's why I did not reference the higher-end models. The RAM selection goes up to 32 Gb modules. Obviously you'll need perhaps some storage and USB stick to install ESXi.

| Item | Power Efficient ESXi Lab Host 2015 based on Haswell-EP architecture | Notes | Shop |

|

Supermicro ATX DDR4 LGA 2011 X10SRH-CLN4F-O (with LSI 3008) | SAS, SATA, 4NICs | Amazon US |

|

Supermicro ATX DDR4 LGA 2011 X10SRH-CF-O (with LSI 3008) | SAS, SATA, 2NICs | Amazon US |

|

Supermicro ATX DDR4 LGA 2011 X10SRI-F-O (No LSI 3008 card on this board…) | SATA, 2NICs | Amazon US |

|

Intel Xeon E5-2630L v3 – 8 cores, 1.8 GHZ (55W TDP) | 20 Mb Cache | Amazon US |

|

Intel Xeon E5-2630 v3 – 8 cores, 2.4GHz ( 85W TDP) | 20 Mb Cache | Amazon US |

|

Xeon E5-2620 v3 – 6 Cores, 2.40 GHz (85W TDP) | 15 Mb Cache | Amazon US |

|

Xeon E5-2609 V3 – 6 cores, 1.90GHz (85 TDP) | 15 Mb Cache | Amazon US |

|

Intel Xeon E5-2603 V3 – 6 cores, 1.6GHz (85 W TDP) | 15 Mb Cache | Amazon US |

|

Xeon E5-1650 v3 – 6 cores, 3.50 GHz (140W TDP) | 15 Mb Cache | Amazon US |

|

Supermicro Certified MEM-DR432L-SL01-LR21 Samsung 32GB DDR4-2133 4Rx4 LP ECC LRDIMM Memory | 32 Gb | Amazon US |

|

Samsung DDR4-2133 16GB/2Gx72 ECC/REG CL15 Server Memory (M393A2G40DB0-CPB0) | 16 Gb | Amazon US |

|

Supermicro Certified MEM-DR416L-CL01-ER21 Micron Memory – 16GB DDR4-2133 2Rx4 ECC REG RoHS | 16 Gb | Amazon US |

|

Hynix DDR4-2133 16GB/2Gx72 ECC/REG CL13 Hynix Chip Server Memory | 16 Gb | Amazon US |

|

Samsung DDR4-2133 8GB/1Gx72 ECC/REG CL15 Server Memory (M393A1G40DB0-CPB) | 8 Gb | Amazon US |

|

Supermicro Certified MEM-DR480L-CL01-ER21 Micron Memory – 8GB DDR4-2133 1Rx4 ECC REG RoHS | 8 Gb | Amazon US |

|

Supermicro certified MEM-DR480L-HL01-ER21 Hynix Memory – 8GB DDR4-2133 1Rx4 ECC REG RoHS | 8 Gb | Amazon US |

|

FSP Group 450W Modular Cable Power Supply 80 PLUS Platinum AURUM 92+ Series (PT-450M) | 450W | Amazon US |

|

FSP Group 550W Modular Cable Power Supply 80 PLUS Platinum AURUM 92+ Series (PT-550M) | 550W | Amazon US |

|

FSP Group 650W Modular Cable Power Supply 80 PLUS Platinum AURUM 92+ Series (PT-650M) | 650W | Amazon US |

|

Noctua i4 CPU Cooler for Intel Xeon CPU_ LGA2011, 1356 and 1366 Platforms NH-U12DXi4 (support the Narrow ILM socket) | Amazon US | |

|

Noctua i4 CPU Cooler for Intel Xeon CPU_ LGA2011, 1356 and 1366 Platforms NH-U9DXi4 (support Narrow ILM and Square ILM) | Amazon US | |

|

Fractal Design Define R4, Black Pearl | Amazon US | |

|

Fractal Design Define R5, Black Pearl, ATX Mid Tower, Case (Black) | Amazon US |

Energy Efficient ESXi Home Lab

- Building Power Efficient ESXi Server – Start with an Efficient Power Supply

- ESXi Home Lab – Get a quality and comfortable case

- Supermicro Single CPU Board for ESXi Home lab – X10SRH-CLN4F

- Supermicro Single CPU Board for ESXi Homelab – X10SRH-CLN4F – part 2

- Supermicro Single CPU Board for ESXi Home lab – Upgrading LSI 3008 HBA on the X10SRH-CLN4F

- Building Energy Efficient ESXi Home Lab – parts list [this post]

- Homelab – Airflow Solar System

Vladan,

I love your blog man. I was considering running everything nested on a beefed up server, but then my requirements grew significantly. I was looking into getting SuperServer 5028D-TN4T, but then i looked at your build and was waiting patiently for you to lay out all the specs so that I can start on my lab build.

Thanks a million man. Can you also please highlight what SSD drives you are using for your new cluster.

Keep up the good work.

Cheers!

Mo

Saber 1000, w. 5yrs of warranty. But that was a deal from a partner…

Vladan, great work on your home lab rebuild and sharing. I’m going thru the same thing. Have 4 servers 32GB each. Like to consolidate to save power and home cooling. I need 128GB RAM to replace my physical boxes. Project on hold as RAM price is killing the “purchase button”.

Tom

Home lab is all about “what you want to do with”. If you want just to learn for passing an Exam, then Online vLAB offers are surely cheaper way to go… but if you want to run real workflows, then you need to go physical.

Yes, the DDR4 is stopper for most folks right now. That’s why I picked up the bare minimum for now. 64Gigs (32Gb RAM sticks).

what kind of 10 gig switch do you recommend for home lab. I’m looking for something that I can leverage for nsx network scenarios as well along with vsan as well. I have to test vmotion MSSQL clusters?

I went for Cisco SG500XG-8F8T 16-Port Managed Layer 3 Stackable 10-Gigabit Switch w/ 8x SFP+ Ports (10GbE) + 8 10GbE ports. Eric Bussink has the same. Except for the noise (and the price), I’m happy with it. I preferred Cisco over Netgear.

Yes I have been following Eric Bussink blog. That switch is slightly on the upper end but it does its job well. How much it ended up paying for it.

Hi Vladan,

I’m a big fan of your blog and finally ordered two new nodes running the same gear as you highlighted above.

For the switch i’m looking at the following so that i can pretty much test all the POC’s

Huawei CE6850-48S4Q-EI 48*10GE 4*40GE 1.28T L2/L3 Switch/Router

This is going to be the main swtich for everything 10 Gig in my lab. Plus i can use it for various other scenarios as well.

However what is your opinion on Cisco SG500XG-8F8T and why you are choosing that over other vendors, which are offering pretty good 10 Gig switches which are L3. Would like to sought your expert opinion.

Again awesome as I use your blog as one of the primary source for everything ESXi and related to virtualization.

Best Regards,

Mo’

Great build and really great blog with lots of usefull stuff you got there. I’ve done simillair build with X10SRH-CLN4F-O and 4x SSDs on SAS interface LSI 3008 in RAID10 and testing ESXi 6.0 free version, but I can’t find any way to monitor RAID health. Trying to setup LSI MegaRAID Storage Manager but no luck with it. Do you have any better expirience?

Cheers and keep up the good work!

Hi Matija,

unfortunately I’m not using the Internal RAID of the LSI 3008 as I’m running VSAN.

Great job! Nice whiteboxes! I’m fan!

Hi Vladan,

Thank you for the writeup. I am looking at pulling the trigger on this as well. While I want to use SSD for higher end testing I was wondering if it might be possible to mix in Sata and SSD in the same chassis using the motherboards you specd? I could envision having one array of larger capacity SATA drives, and another with lesser capacity but better performing.

Thoughts?

Thank you

Not quite getting your question. SATA and SSD.. They’re both SATA based? Did you mean SAS and SATA mixing? (not quite sure about that).

I have 3 identical nodes populated with SATA based SSD’s. So the performance hit is the 6Gbs SATA III interface. The LSI 3008 is 12Gbps capable for SAS drives (SSD or not). So to me the best performance would be with SAS SSD’s. In the case of utilisation for VMware VSAN one could imagine to have PCIe SSD high endurance for caching in front, and then SAS drives for Capacity. But IMHO the capacity tier won’t bring much performance improvements as it does a PCIe SSD. I curently have only SATA based SSDs in front of the capacity tier. Hope it makes sense.

I’m having a dilemma between getting the 2630 or 2630L for my ESXi build. I’ll have to choose between higher clock speeds or better energy savings. How much does clock speed matter in this case? How many more VMs or how much more performance does the 2.4Ghz in 2630 enables?

Been reading the series of posts. New to VM world. Am I missing something but I do not see any GPU listed in hardware list. I understand the rest. Do I not need a GPU? I am thinking that you could use the built in IPM management via dedicated LAN port but not sure if you need a dedicate GPU? I am thinking of building similar spec white box for ESXi homelab. The GPU issue is killing my mind. Can someone please educate me?

Hello,

i would like to build a whitebox for support vsphere 6 essentials kit. What do you think about this:

– Cooler Master Cosmos 2 atx case

– Corsair Psu AX1200i modular 80 plus platinum certified

– Cooler Master Nepton 240m for cpu liquid cooler

– Intel Xeon 2011 E5-2620v3 2,4Ghz

– AsRock motherboard X99 WS-E for socket 2011-v3

– LSI MegarRaid Sas 9240-4i SGL 4-PORT 6GB/s sata+sas

– Corsair Dominator Platinum Kit memory ddr4 2666mhz 32gb (4×8)

– Evga Video Card GeForce Gtx970 4gb ram

– 4 Samsung SSD 850 EVO 500gb mz-75e500b/eu

– Intel PRO/1000 PT dual port server adapter 10/100/1000base-tx

for storage i’ve alread a NAS Qnap TS-451 (with 2 gb port interfaces) about 4 tb based on raid5 (here i have 4 hd wd caviar for a total of 8tb)

with this configuration (hoping that it’s good), do you suggest me to install directly the vcenter or before windows 2012 (for example) and after vcenter etc…?

in any case i would like to have a your general suggestion to use at the best this hardware at least in a lab test. Thank you. Luca

Hi Vladan,

I have recently bought a “X10SRH-CLN4F” Supermicro motherboard with a “E5-2630L v3” CPU, a “Noctua i4 NH-U12DXi4” heatsink and a “Fractal Design Define R5 Case” as you recommend. Considering an air flow from front to back I mounted the “Noctua i4 NH-U12DXi4” heatsink with the NM-XFB5 90° Mounting Bar. With the shape of the heatsink it prevents me from being able to use DIMM B2 and DIMM D2 (closest to the CPU). Is it possible to ONLY use DIMM channels A & C (DIMM A1+2, DIMM C1+2) and NOT channels B & D (DIMM B1+2, DIMM D1+2). Is it mandatory to follow the order of channel A,B,C to D? Otherwise I still could turn the heatsink 90° (“Noctua i4 NH-U12DXi4” comes with many mounting options, but I have already fixed it to the CPU).

Are you using the same heatsink or the “Noctua i4 NH-U9DXi4”? Is your CPU air flow from front to back or from bottom up? Did you run into the same difficulties?

Thank you & merry X-MAS

Hi Colin. thanks for your comment.

I have the Noctua NH-U12DX i4 heatsing/fan mounted the to flow the air from bottom to the top of the case (not from the front to back). Is that perhaps the reason why…. Whether the heatsing itself isn’t obstucting the RAM slot spaces at all, the Fan itself is, but it’s possible to move the fan little bit higher than the heatsing, in order to accomodate the RAM. Then lower the fan back as much as possible. I updated the post with a photo.

I have another Noctua Fan mounted at the very back of the case extracting the air outside the case. Considerning that the CPUs, RAM and SSD’s (now i’m running only with SSD’s) there is no heat accumulating in the case… at all.

I’m not currently populating All RAM slots, just the first two (2x32Gb)…. But I just opened the case and checked that. It should fit.

Hi Vladan,

Sorry to bother you again, but I am trying to use the NH-U12DX i4 and I think it is defective – there is no thread in the four holes in the heatsink, it is simply reamed. This means that the brackets/mounting bar things do not screw into the heatsink at all, they just screw through the 4 holes in the bars themselves which are threaded and then are loose in the heatsink. If i turn the heatsink upside down at this point they brackets fall out.

The screws aren’t long enough for me to be missing bolts or anything – do you remember putting this together and can say whether I must have a faulty product? Noctua have not replied 24 hours since my query.

Many thanks,

George

Just what I was looking for to start off my new-years home lab server rebuild, great post.

Thanks for your commend. Was just thinking, to add this to folks living in hot countries (Australia?). As I live in a country with quite a lot of heat. The PSUs and the boxes does not generate almost any heat. However the 10G Switch and my old Drobo they do generate some -:).

Great article. Have you managed to make any progress on SSD selection for vSAN as yet? I’ve had no end of problems using consumer grade Samsung 850 Pros and am now considering moving across to Enterprise class disks.

No, I’m still running consumer grade SSDs. Let them “die” first -:)

If you do not want to buy server grade CPU and motherboard, then socket 1151 – Skylake is another option.

4x 16GB DDR4 RAM non ECC costed around 450€.

CPU i7 6700 is quite powerful, even my old i7 2600 was, but the memory is a big issue, never enough 🙂

Would there be any issue (Custom/Sec) if i order these parts for Australia?

Australian readers, anyone? Thanks.

Am i missing anything from the list below? looking forward to order from Amazon..

-Supermicro ATX DDR4 LGA 2011 X10SRH-CLN4F-O (with LSI 3008)

-Intel Xeon E5-2630L v3 – 8 cores, 1.8 GHZ (55W TDP)

-Samsung DDR4-2133 16GB/2Gx72 ECC/REG CL15 Server Memory (M393A2G40DB0-CPB0)

-FSP Group 450W Modular Cable Power Supply 80 PLUS Platinum AURUM 92+ Series (PT-450M)

-Noctua i4 CPU Cooler for Intel Xeon CPU_ LGA2011, 1356 and 1366 Platforms NH-U12DXi4 (support the Narrow ILM socket)

-Fractal Design Define R4, Black Pearl

Thanks,

Hi Atta,

Looking good -:). Perhaps some storage, unless you going disk-less with some NAS device.

Thanks Vlad, any recommendation on storage?

hmm, it really depends what you do. In my case I’m running VSAN on 3 hosts. http://www.vladan.fr/lab

I just ordered new cache tier for my VSAN. I’m going NVMe….. with 256 Gb cache per host – Samsung 950 PRO -Series 256GB PCIe NVMe – M.2 Internal SSD 2-Inch MZ-V5P256BW – http://amzn.to/24Nrv13

For that Mobo I also ordered an adapter Lycom DT-120 M.2 PCIe to PCIe 3.0 x4 Adapter (Support M.2 PCIe 2280, 2260, 2242) – http://amzn.to/24Nrzhe

Sadly, Amazon can’t ship few items (Motherboard, Case, Power supply) from the list to Aus.

Motherboard not available locally, so have to look into alternate option.

I have the same problem here at Reunion Island (Fr). Amazon does not ship here. But I’m using shipito.com repackaging service. The only problem I had last time with the mobos was the fact that I had to tell them (to shipito) to take out the small lithium batteries from the mobos otherwise there were problems with the shipping. I bought those batteries then after I received my mobos, at the local gas station, for few bucks -:).

I’ve tested http://www.planetexpress.com as Shipito alternative and it seems that they are the way to go. 🙂

HP 765530-B21 DL80 Gen9 Intel Xeon E5-2630Lv3 (1.8GHz/8-core/20MB/55W) Processor Kit

What do you say, is this same as Intel Xeon E5-2630L v3?

Couldn’t find later one in Australia but i think the above one is same.

It’s 8core, 20 Megs of cache, 55W tdp…. there is no other model in the XeonE5 v3 product line…. http://ark.intel.com/products/family/78583/Intel-Xeon-Processor-E5-v3-Family#@Server

So it’s the same one, branded together with an HP server…

I’m currently looking into the hardware requirement of esxi myself and found your post helped quite abit, thank you.

I would really be interested in some wattage details. Were you tracking this as the build mature?

Whats the SAS Breakout Cable you used? As there are SAS with power, SATA, and a mixed setup

If you are looking for a really quiet power efficient home lab on two host and one san box have a look at what I build at home with Avaton CPUs. All gear – two hosts, san, switch consume less than 130W and quiet enough to keep in the living room.

http://vmnomad.blogspot.com/2015/08/another-budget-vsphere-home-lab-post_31.html

So whats the actual power consumption of this build, haven’t been able to find that number in the series;)

The actual power consumption is about 70W, as I can see it on my wattmeter….. (with 8 SSDs as storage). So, depending on your situation. (number of disks) you may find other values. Also I also have one PCIe with 2 x 10GbE plugged in.

Hello,

First of all, i would like to thank u about this article and the explanation on the way you go.

Can u send us some details about the power consumption. The 70w is in normal situation ? what is the power consumption of the system in stress test and idle ?

Do you think that your configuration can fight against a similar config but with a xeon-d 1540 about power consumption?

Both of the CPUs are 8core. It seems that the 1540d has 10w lower TDP, but maxes at 128Gb of RAM. If RAM is planned to be more than that, you’ll perhaps look at E5-2630l v4 here, which maxes at 1.94Gb RAM. http://ark.intel.com/products/92978/Intel-Xeon-Processor-E5-2630L-v4-25M-Cache-1_80-GHz

As concerning the power consumption, I haven’t really stress test. At the end of the day, the CPU has 55W TDP so you can’t have higher consumption than that…. You obviously will have more as the rest of the consumption per host will be the disk drives, and perhaps the RAM too.

I’ve been told from a friend of mine that don’t try to build your own esxi server instead he recommend the Dell PowerEdge R710, and I asked why, he said it’s just my preference to have a real production server. Power consumption wise isn’t that a big difference compare to the Intel Xeon E5’s. other thing about the Dell PE R710 is they are very load.

What do you guys think?

Hi Vlad,

I really hope you can answer to this comment.

I am planning on building an ESXi server to help me in my CCIE certification (it’s a Cisco certification).

I would need to run up to *20* CSR1000v virtual routers at the same time + some windows / linux machine.

A single CSR router needs the following:

VMware ESXi 5.0, 5.1, or 5.5

Single hard disk

8 GB virtual disk

The following virtual CPU configurations are supported:

– 1 virtual CPU, requiring 2.5 GB minimum of RAM <– this is what I will use

– 2 virtual CPUs, requiring 2.5 GB minimum of RAM

– 4 virtual CPUs, requiring 4 GB minimum of RAM

– 8 virtual CPUs, requiring 4 GB minimum of RAM

Assuming I don't care about storage nor how many networking interfaces I have, but only about CPU + 64G(+) RAM + Power consumption, what's the the best way to spend my money and have zero compatibility issues?

I would really appreciate if you could lend a hand.

Thanks!

Difficult to give a single advice. You’ll need this box after passing your exam? If not consider Ravello systems. Otherwise there is basically a two ways to go:

1. VMware Workstation (or Player) and run your VMs on the top ow Windows (Linux)

2. ESXi box. There you need at least a single NIC which is compatible with ESXi (Usually Intel NIcs play fine). My lab Boxes (the Mobo isn’t on VMware HCL, but works Out of the box – http://www.vladan.fr/energy-efficient-esxi-home-lab/

Advantage is that the Mobo can hold up to 256Gb of RAM…. You can also take this board (cheaper) which has only 2 NICs and no SAS controller http://amzn.to/1ANhDsg

Best,

Vladan

Hi Vladan,

I am assembling a new PC for my home lab to run ESX 6.2. My mobo doesn’t have graphics onboard.So Do i need to check compatibility for a graphics card with ESX before buying , If yes please direct to an option which is not a very expensive one.

Best rgds,

Ragesh

Hi Vladan,

very interesting blog.

I’ve almost the exact same build as you but I get always issues with the onboard nic.

Did you had issues with your onboard nic on esxi 6 u2?

Kind regards,

Phil

Hi Vladan,

Thank you for your interesting blog. I would love to try your setting but have one question:

What hardware setting of your (motherboard + cpu) have you actually used? Because when I look at the X10SRH-CLN4F-O (single socket r3 lga 2011) and some of your proposed CPUs (all FCLGA2011-3) they don’t seem to fit optically. I substituted the E5-2630v3 for the E5-2620v4 (because they use the same socket) and the socket has on the left and right side little nobs where the cpu pins make a straight line. I asked Supermicro about it and the said that these products are not compatible. Looking for a r3 socket I have trouble finding the right intel cpu. Do you have any insight on this? Thank you very much.

best regards,

Mike

Hi Mike,

I used X10SRH-CLN4F with E5-2630L v3, last year when the post and a lab was build. But apparently, since then Supermicro has made it available for v4 intel CPUs…

http://www.supermicro.com/products/motherboard/xeon/c600/x10srh-cln4f.cfm

“Single socket R3 (LGA 2011) supports

Intel® Xeon® processor E5-2600

v4†/ v3 and E5-1600 v4†/ v3 family”

But other than that, I don’t have a real insight on the v4 CPUs…

Hello Vladan,

Thanks for your response. That is strange because Supermicro told me that their socket doesn’t support the FCLGA2011-3 (which is the socket your CPU and my v4 are). If you take a look at the pins on this page: http://www.bostonlabs.co.uk/intel-xeon-e5-2600-v3-codename-haswell-ep-launch/ My socket on the Board looks like it needs a v2 and my v4 looks like the v3. Could it be the board? I ordered a X10SRH-CLN4F but they sen’t a X10SRH-CLN4F-O. I am really confused… and I am curious what your socket and CPU would look like. I know it’s up and running and you can’t dismantle it 😉 Perhaps someone else has some insight in this matter?

Regards,

Mike

Well, perhaps their board has evolved since last year. I don’t know. I technically cannot un-mount my socket right now, but I’m sure you can find an image on Google or elsewhere.

Hi Vladan,

One last question: Do you know or see the revision of your board perhaps? It could be that they gave me an old old version…

Thanks for everything.

regards,

Mike

I’d love to, but I’m NOT physically located close to my lab, right now. I only come back by the end of October. Through the remote session I can’t see the information. I only see the BIOS version (1.0a) and firmware revision 1.67. If by then you’re still interested, just hit me an e-mail.

Best luck.

Hello,

What 12 Gbps SAS internal mini port cables are you using for this motherboard?

Great reading your article. I’m curious though.

Is 1.8 GHz sufficient for the core strength? I’ve read articles where it shows in virtual environments that core strength is least important from other factors (RAM, disk I/O & storage, networking) but have run a windows vm with a E5-2450Lv2 (10 cores, 1.7~2.1Ghz @60Watts) and found it painstakingly slow.

I’m currently thinking about upgrading all my hosts to lower core count with higher frequency to see if it will improve performance. However, at the current speed there is no way I can set up an infrastructure.

Build:

3 Hosts (single socket/dual socket low powered Xeons <1.8Ghz)

1 SAN (Running NexusOS with 14 Intel DC S3710 in RAID10 and 8Gb FC – QLE2564)

Thanks

Hi Vladan,

Thank you for your interesting and amazing blog. I would like to try your setting asap but before I’ll order everything I have some questions to you:

2 * Cremax Icy Dock ExpressCage MB326SP-B

8 * Western Digital WD Red 4TB, 3.5″, SATA 6Gb/s (WD40EFRX)

2 * DeLOCK mini SAS (SFF-8087) auf 4x SATA, 1m (83074)

1 * Intel Xeon E5-2630L v4, 10x 1.80GHz, tray (CM8066002033202)

2 * Samsung DIMM 32GB, DDR4-2133, CL15-15-15, reg ECC (M393A4K40BB0-CPB)

1 * Supermicro X10SRH-CLN4F retail (MBD-X10SRH-CLN4F-O)

1 * Noctua NH-U9DX i4

1 * Fractal Design Define R5 Blackout Edition (FD-CA-DEF-R5-BKO-W)

2 * SanDisk Ultra Fit V2 16GB, USB-A 3.0 (SDCZ43-016G-GAM46)

or

2 * SanDisk Ultra Fit 32GB, USB-A 3.0 (SDCZ43-032G-G46)

2 * TP-Link Archer T9E, PCIe x1 Wireless: WLAN 802.11a/b/g/n/ac

1 * FSP Fortron/Source Aurum 92+ 650W ATX 2.3 (PT-650M)

x * Intel SSD 530 180GB // Samsung SSD 850 EVO 120GB, SATA (MZ-75E120B)

1.) Are the USB Sticks big and fast enough for ESXi 6.5 system ?

2.) The HDD I would like to be attached to the LSI3008 controler and path this to a NAPPIT system and build up a ZFS-Z2 raid as file/data storage. Is it possible to add more than the eight HDD’s to this controler ?

3.) I do not know if you have tried the nappit system and knows some more about ZFS ? I read somehwere and have in mind, that it would be good to add a separate SSD to the HDD raid array to speed up write and read access, but i cannot find the article anymore, does this might be correct ? Do you have any ideas how this should be made ?

4.) The SSD’s should be all used and attached to the C612 Intel chipset. These should be than used as storage location for all the OS of the VM’s. Is that the right way ?

5.) Which VBA card are you using, just a simple one ?

6.) I’m still not sure if I should use 2630 or 2630L CPU, so is it better to have higher clock speeds or better energy savings.

a) How much does clock speed matter in this case ?

b) How many more VM’s or how much more performance does the 2630 could bring ?

I hope that it sufficent for that what I would like to do. I would like to have multiple VM’s ‘Napp-IT system, Web system, Mail system, Firewall VPN Proxy system, Active Directory system, Cloud / NAS / File / Data system, some Workstations test clients like Windows 7 – Windows 10, …”. The server should be used for a small amount of user up to 20.

Hope that I’ve not placed to much into the case so that I running into any heating troubles !

Do you have an overview of your system “Vm’s” you have configured on this system ?

Are there any CPU / Memory / Power usage graphs over a longer period which you can share ?

Thank you very much in advanced for all your answers and work.

best regards,

Oliver

That’s a ton of questions Oliver…

The USB is just to boot ESXi, you won’t find any speed increase in there whether you pick any of the USBs.. They’ll boot the same speed.

2) It should work. passing-through the disks via the LSI3008 works well for vSAN, why not for NAPPIT.

3) Yes, ZFS needs SSD cache for getting best possible speed. During the volume creation you can tick the caching tier, then the capacity tier.

4)No the storage should be attached to the LSI3008 storage controller, not the C612 chipset, which can do a software raid, but hasn’t got much performance.

5)VBA card?

6)It depends what you want to do. More Powerfull lab (with a little more spend on electricity), or cooler one with a bit less power… Both are 8-core CPUs…

All it depends on the memory all your VMs will consume. a system like this is upgradeable on RAM, which is an advantage over smaller-size boxes such as Intel NUC or SuperMicro E200.

Well, the lab moves quite a bit, over time. I just run a minimalistic number of VMs on the standalone ESXi (DC, VCSA, Filers) and the 2-node vSAN 6.5 clusters. I’m testing quite a few client’s systems, client’s software, so after the article is published I usually don’t keep the VM in the environment. But yes, so there is about 10-40 VMs runing easily.

The 3-host cluster with one (Haswell) Hyperv host, the PSUs, switches, all this consumes about 400W….

Many thanks for the quick reply.

Great, so 32 GB should be enough for the ESX system, or is 16 GB enough as well, which will then just save some bucks 😉 ?

So this means that you have nothing attached to the C612 chipset ?

I thought i can use it to use the SSD’s for the OS of the VM’s from there.

But how can i attach more than the eight disks to the LSI3008 controller ? Like the eight hdd’s and the 10 ssd’s.

I did not want to use the C612 chipset in RAID mode, I just want to use SSD1 for VM1 and SSD2 for VM2, ….

I mean VGA card 😀 sorry for the typo.

Thanks again.

Oliver

Hey, No problem.

Well, I’d go with at least 64 Gigs cause running 20 VMs (including some VM hungry VMware VMs) might eat all the RAM quite fast.

Well, you can always attach the storage to the C612 chipset, but without being able to configure some kind of RAID protection. (With the hope that ESXi sees the disks, haven’t tested that).

You’ll have to purchase another storage controller to get more storage on the board as the built-in has a possibility to manage only 8 disks.

Best luck with your setup.

++

Vladan

Oliver, did you get the right SAS cable? I have read that it needs SFF-8643 type but you have ordered a different set…

Vladan, can you confirm the right cable to break out the SAS ports to SATA connectors?

Hi Vladan, I’ve been coming to this blog post on and off for over a year now and I’m finally making the purchases after saving money for all this time!

Anyway, the SM X10SRH now comes with Broadcom 3008. How does this compare with LSI 3008? I can’t find information online about this but maybe your general server experience means you have an answer. Will it support the usual magic IT firmware out there, to allow me to pass through disks with HBA to a FreeNAS guest VM?

Many thanks,

George

Hi George,

I cannot guarantee that. I chose my hardware with the LSI 3008 because it was on VMware HCL for VSAN. However, Broadcom has bought the LSI branch, didn’t they? … -:).

You answered really quick even on such an old post, I appreciate that!

Well it’s reassuring to know that Broadcom simply bought LSI, so maybe it’s just a simple name change here and the chip is identical. The mobo reference manual that came in the box still calls it LSI, so it’s all so recent, which makes me feel even more confident that it’s just the same chip in reality. Annoyingly there’s a heatsink mounted to the chip so I can’t read the markings. I will instead wait until my build is finished and then see what info I can glean about it from either PCI bus enumeration or something else, and report back.

Thanks!

Great post. Just weeding through all the data. Please verify for me once more.

Are you running ESXi 6.5 now and vSAN 6.5? On this whitebox hardware?

Thank you,

Jay, Thanks for the comment. Yes, the system runs latest vSphere 6.5 and ESXi 6.5.

Greetings just came across this website and have many hours ahead of me digging into all the information you offer. For sake of time I wanted to inquire as to whether or not, at face value and hardware, will using the exact parts listed above would be feasible in terms of cost in regards to availability? Meaning I were to budget, and buy everything listed piece-by-piece, would that be advised in terms of find it all / cost. My intentions are to deep-dive into setting up an esxi host, also labbing for things like VMware and mcsa. Please advise & assist. Thank you.

The list is like 2 years old now. Perhaps new CPU’s, Motherboards, might be more appropriate? Always look for Low power CPU if planning the lab for use 24/7.

Hi Vladan

I would love to see you do a “simulated” upgrade of a new part list for a 2018 build 🙂

CPU’s are now with many cores and prices are lower so 8 cores and low power is a possibility

it is time for me to upgrade my environment for my home lab, vcenter, vsphere, storage, etc.

Is this article calling out the hardware still considered viable for the 6.7 and future releases?

I have been looking at different offerings for compact systems: Intel NUC, Lenovo Thinkcentre, etc.

My ultimate objective is to have an environment capable of migration, vMotion, with common attached storage, without going to enterprise server hardware.

This is only for home labs, experimentation, training, etc.

Thanks!

Considering that this has been like 2 years ago, I’d go for more recent CPU/mobo build. But this one still works on 6.7 latest build. Depends if you want to go cheaper way or not…. ++

Hello Vladan,

thanks for this great writeup,

It has been over 2 years that I had a custom build setup to run esxi homelab and I am looking into building a new one. are there any upgrades that will fit with the rest of your setup ex. a newer cpu or another supermicro board which supports the same features as the one in your list?

Do you have any plans on updating the current configuration and article or is this still a good enough build ?

Danny, this build is now a couple of years old. You can find a better deal now I think concerning Motherboard and CPU. I sold 2 of the hosts and using the one which is left as a Windows Workstation 128 Gb RAM(with VMware Workstation installed) and nested environment. It’s because we’ve moved to a small apartment.

Best,

Vladan

could you recommend any xeon cpu ? there are so many and it would take a lot of reading to catch up on the different models available. boardwise I will seek for a supermicro which then matches with the cpu and is compatible with esxi

BTW, if you’re on a budget, you can find those CPUs mentioned in the post for this lab build, used at eBay… Good deal IMHO. As for new Xeons, I haven’t done any research on that yet.