I've recently bought some parts to replace my old NAS build to get more power and more IOPS. This NAS box will be used for lab only. I also recovered some parts from the old box, but added some SSD's. The Mini-NAS will be used also as an ESXi box. While the Mini-ITX boards are limited in expansion cards, I priviledge speed over redundancy and run Raid 0 (no redundancy). This is certainly not to do in production, where usually don't have that space limit as I have and so you'd rather use RAID 10 for best performance.

There are many software distributions which can be run on bare metal or as a VSA appliances out there (FreeNAS, OpenFiler, Nexenta….) but there is many blog posts already about those. One of the new ones, which is very interesting is SoftNAS.

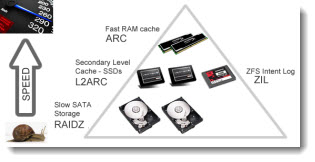

Based on hardened CentOS linux distribution and on ZFS filesystem, the product can take advantage of using of the available RAM as a cache, but also SSD's for read and write cache, completed with cheap SATA storage. Easily get super fast multi tiered storage solution. The SoftNAS VM consumes about 1Gig of RAM itself, so for example if you configure the VM with 16 Gigs of RAM, about 15 Gb of RAM is used for caching. In my case I'll configure the VM with 8Gb of RAM and use the rest of the RAM (8gb) for some other VMs of that management cluster. For homelabs the 1Tb Free offer is sufficient to run my small cloud VMware lab with now third, very compact ESXi host… -:)

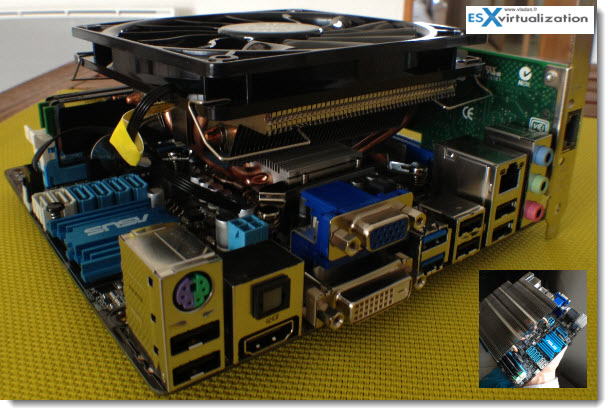

It's very popular between the community to use Single box with VMware ESXi installed on an USB stick, and some other VMs running out from the local storage together with some VSA (virtual storage appliance) VM configured with passthrough (VMDirect Path) to a mix of SSDs/SATA drives. While this is certainly the solution to get the most speed, it's quite difficult to pick a consumer board (with mini-ITX format with this capability) . Not only the motherboard needs to support VT-D, but also your CPU. You can configure VMDirectPath I/O pass-through devices in VMware vSphere client, when you click Advanced Settings, you can select the devices that are dedicated to the VMDirectPath I/O.

If your motherboard does not support VMDirectPath I/O, it's still possible to use other methods for using local storage to be presented as a shared storage for the existing infrastructure.

If I manage to and will have passthrough which will work with the Mini-ITX board and I'll be able to pass directly the local SSDs and SATA drives to my SoftNAS VM and at the same time I can run my management VMs for the whole lab on the same box (vCenter, DC ….). I can built fully functional hybrid storage box which is at the same time an ESXi installed on bare metal.

The goal of the setup:

- Get a shared storage for my lab that has an acceptable performance for 40-50 VMs, learn some magic that ZFS can do with data tiering between the RAM, the local SSDs and SATA drives.

- To build a box, that will run VMware ESXi and run SoftNAS VM with VMDirectPath I/O pass-through configuration for local disks and SSDs. The SSD's will be used for L2ARC and ZIL caching. The rest will use usual 7200rpm SATA II drives configured in RAID0 for best performance (no redundancy).

My Limitations:

There is a single PCI-Express expansion card present on that board as well, so I reserved that for an additional NIC so I can reserve it for storage traffic. The NIC present on the board – Realtek® 8111E – works with VMware vSphere 5.

I recommend if you're not have limitations like I did, go for a mobo with full ATX which has more expansion slots. Get 1155 socket based board which can hold up to 32Gb of RAM, or if you have the budget, get a newer 2011 socket based board which can held 64 or 128Gb of RAM.

My parts I bought:

- SSD OCZ Vertex 4 Series 2,5″ – 256 Go 163,87 €

- ASUS P8H77-I – Socket 1155 – Chipset H77 – Mini-ITX 1 77,68 €

- KINGSTON Memory PC HyperX blu Black Series – 2 x 8 Go DDR3-1600 PC3-12800 74,41 €

- INTEL Core i5 3470S – 2,9 GHz – Cache L3 6 Mo – Socket LGA 1155 (VT-D is supported)

- COOLER MASTER Ventirad GeminII M4 (RR-GMM4-16PK-R1) 22,57 €

Total Cost – around €586

Parts that I recovered from my previous NAS built:

- Lian-Li PC-Q8B (Mini-ITX case)

- PowerSupply Antec HCG 400

- SSDs with respectively 64 and 256 Gb of capacity

Before we follow the write up I'd like to introduce SoftNAS to my readers, what uses SoftNAS as a technology and how it works. SoftNAS uses CentOS linux based distribution which is built around ZFS storage file system, and it's a truly business-class network attached storage (NAS).

It takes advantage of Solid State Drives (SSDs) for multi tiering of data. Basically, the hot data which are stored first in RAM, then when RAM is fully used, on SSD's, and then only moved to slower spinning SATA drives.

SoftNAS uses ZFS filesystem, which is very robust, scalable file system which enables you to use RAM, SSDs and spinning hard drives in single pool with very good performances. Very popular in VMware communities, because VMs are IOPS hungry…

How the ZFS filesystem works:

L2ARC – Level-Two ARC (L2ARC) – It's an extension of the ARC, since you never get enough RAM. The L2ARC uses SSD's which are slower than RAM, but thousands time faster than spinning disks.

ZIL – ZFS Intent Log (ZIL) – used for write operations. ZFS places the ZIL on SSD to have a persistent location with fast access. ZFS writes the recent changes in the ARC into the spinning disks, so the data in the ZIL can be replaced by new writes to match the new data which are stored in the spinning disks (which are persistant and non-volatile).

Cheap (and slow) SATA storage – used for cold data, which are data that does not change a lot over time.

While this setup is for my homelab, you can easily imagine that it's possible to build a cheap and performant storage system with commodity server hardware. SoftNAS pricing is subscription based model (per TB of used storage), which makes the initial costs even lower.

It's a subscription basis paid for annually or “pay as you go” on a monthly basis. Subscriptions include software maintenance and business day support, and automatically renew for continued, uninterrupted service (cancel anytime). You can choose monthly or yearly subscription where you get 7/7 support.

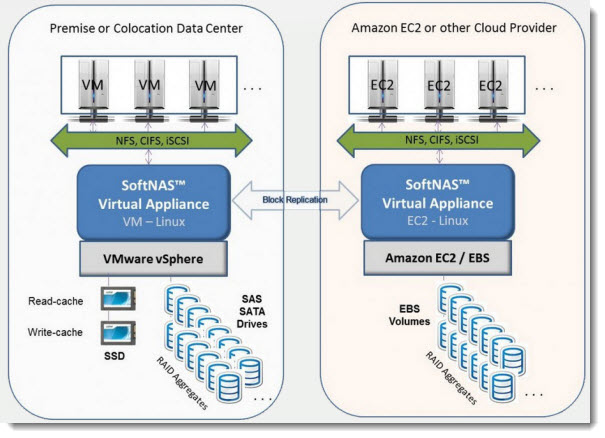

SoftNAS may be installed on any supported hardware platform, including an Amazon EC2 instance for a “Cloud Only” solution. The licensing for SoftNAS is the same no matter how you deploy the solution.

SoftNAS enables you to use replication between two nodes and the setup is very simple to do. We could imagine as a DR solution to have one node running on premises, at your company's primary site, and second instance at Amazon and having your VMs being replicated between those two. They just published video showing how-to setup replication between two SoftNAS Nodes:

SoftNAS runs as a VM on VMware vSphere but also on Hyper-V or directly at Amazon as as a EC2 instance. Here is a screenshot from their website, where you can see the solution running locally and at Amazon. The replication between those two is block level, and the replication runs every minute.

You can also have SoftNAS installed on your primary site and replicate to Remote branch office site. Get a Free 1TB of Storage or download Free 60 days trial.

Update: I've encountered a trouble to configure the SoftNAS VM with passthrough (VMDirect Path) on my whitebox, so there has been a possibility to use RDMs (which I tried and it worked). Nevertheless, I wasn't able (in the Free version) to specify which disk I wanted to use for what function. Ex. I wanted to use my Vertex 4 for L2ARC and the other 60 Gb SSD for ZIL, but I wasn't able to to that…. So the follow up will be with other OS … Probbably Nexenta.

- Building NAS Box With SoftNAS (This post)

- Homelab Nexenta on ESXi – as a VM

- Fix 3 Warning Messages when deploying ESXi hosts in a lab

-

Performance Tests of my Mini-ITX Hybrid ESXi Whitebox and Storage with Nexenta VSA

Feel free to subscribe via RSS as this article will have a follow-up on the setup.

I am using FreeNAS under ESXi (RDM) from two years on ~3TBs.

0$ cost and full command line support compatible with GUI.

You can assign your disks as you want.

I’ve been using FreeNAS in the past 3 years. Good and does evolves over time. Not really the case of Openfiler for example. Nexenta is looking good for my homelabbing… -:)

Hi, I have an ITX NAS with ASUS P8H77-I and i3 3220T,I want to install ESXi on it as a home server. I wonder if th MOBO support VT-d, so I will upgrade the processor to i7 3770T to enable VMDirectPath function.

Thanks a lot!

Hi

Would you today still recommend building your own NAS ? Or do you think its better to buy a finished one ? IT seems like a lot of work and very little benefit ?

It depends if you want flexibility or not. Sure those days there is tons of NAS boxes including those accepting SSDs as a cache tier… Also, NAS whitebox can easily be “converted” to a PC or for other usage….