VCP6-DCV (data center virtualization) VMware certification exam was recently released and the registration will be soon available. The term software-defined, you can love or hate this term, but Software-defined storage is here, and this post covers VCP6-DCV Objective 3.2 – Configure Software-defined Storage. Hopefully, it will help you to learn this topic towards the exam…

For whole exam coverage, I created a dedicated VCP6-DCV page. Or if you're not preparing to pass a VCP6-DCV, you might just want to look on some how-to, news, videos about vSphere 6 – check out my vSphere 6 page.

If you find out that I missed something, don't hesitate to comment.

vSphere Knowledge Covered in this post:

- Configure/Manage VMware Virtual SAN

- Create/Modify VMware Virtual Volumes (VVOLs)

- Configure Storage Policies

- Enable/Disable Virtual SAN Fault Domains

Configure/Manage VMware Virtual SAN

- VMware VSAN (traditional) needs some spinning media (SAS or SATA) and 1 SSD per host (SATA, SAS or PCIe).

- VMware VSAN (All-Flash) needs some SATA/SAS for capacity tier and 1 SSD hight performance and endurance for caching.

- HBA which is on the VMware HCL (queue depth > 600)

- All hardware must be part of HCL (or if you want easy way -> via VSAN ready nodes!)

- HBA with RAID0 jor direct pass-through so ESXi can see the individual disks, not a raid volume.

- SSD sizing – 10% of consumed capacity

- 1Gb Network (10GbE recommended)

- 1 VMkernel unterface configured (dedicated) for VSAN traffic

- Multicast activated on the switch

- IGMP Snooping and an IGMP Querier can be used to filter multicast traffic to a limited to specific port group. Usefull if other non-Virtual SAN network devices exist on the same layer 2 network segment (VLAN).

- IPv4 only on the switch

- Minimum 3 hosts in the cluster (4 recommended) – maxi. 64 hosts (vSphere 6)

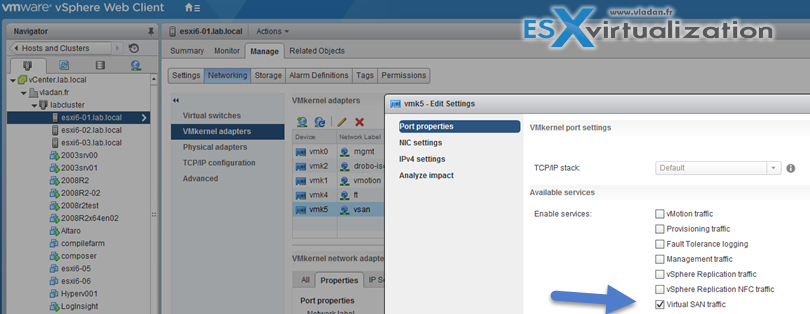

Create VMkernel interface with VSAN traffic on

Host > Manage > Networking > VMkernel Adapters > Add

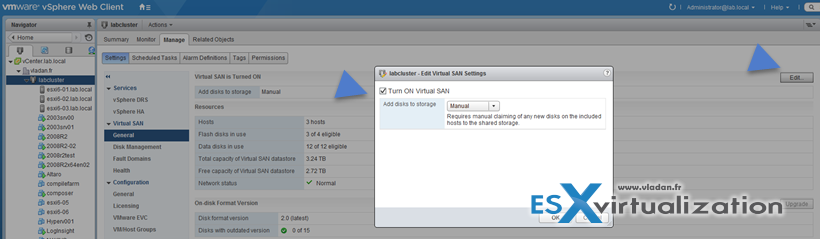

Enable VSAN at the cluster level

Hosts and Clusters > Cluster > Manage > Settings > Virtual SAN > General

Add disk to storage:

- Manual – Requires manual claiming of any new disks.

- Automatic – All empty disks on cluster hosts will be automatically claimed by VSAN

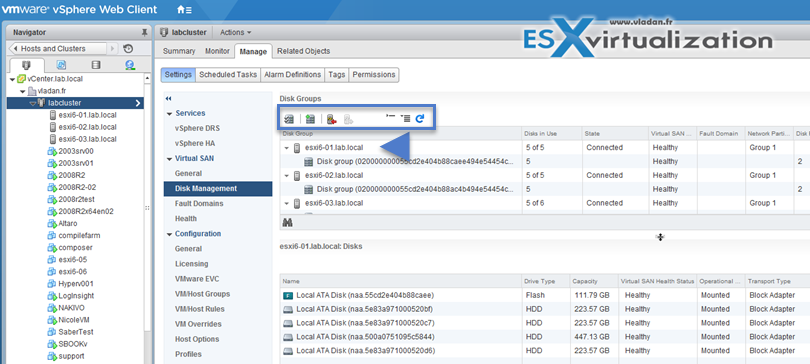

Create disk groups

Hosts and Clusters > Cluster > Manage > Settings > Virtual SAN > Disk Management

Claim disks for VSAN

You can do several tasks when managing disk in VSAN cluster.

- Claim Disks for VSAN

- Create a new disk group (when adding more capacity).

- Remove the disk group

- Add a disk to the selected disk group

- Place a host in maintenance mode

So How to Mark local disk as SSD disk ?

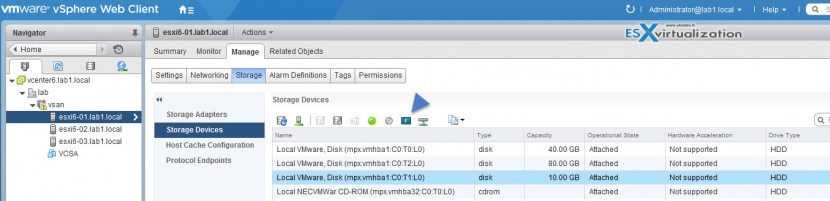

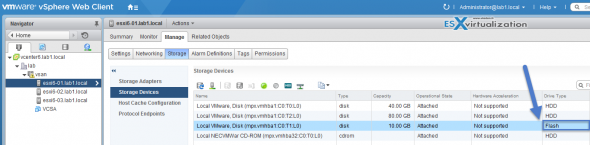

Connect to your vCenter > Go to Hosts and clusters > Select a Host > Select disk which you want to tag as SSD. You can click to enlarge.

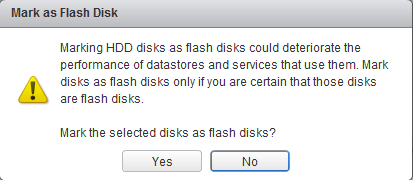

This brings a small warning window saying that you might deteriorate the performance of datastores and services that use them, but if you’re sure on what you’re doing, then go ahead and validate on Yes button.

As a result, after few seconds (without even refreshing the client’s page) the disk turns into a SSD disk… It’s magic, no?

It works also the other way around! SSD to HDD. Note that this works only in VSAN 6.0!

Tag Disks for capacity or caching

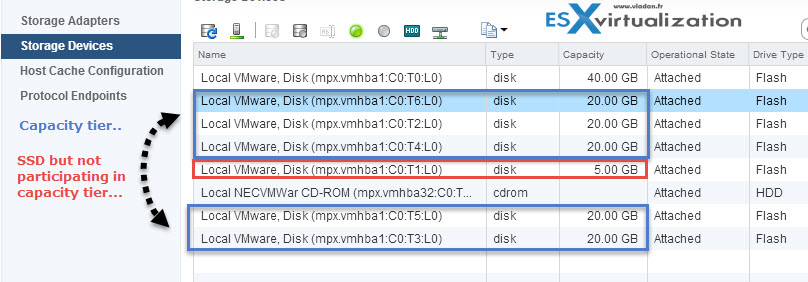

So let’s demonstrate it in my lab. I use VMware Workstation for the job where I quickly created few ESXi VMs. I configured the ESXi 6 host with 7 hard drives, where each virtual disk is destined to fill different function. Here are the details:

- 40Gb is local disk where is installed ESXi

- 20 Gb drives are the ones which I need to tag as capacity

- 5 Gb drive is the caching tier

The view or our disks…

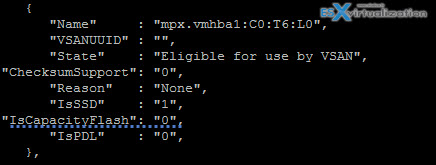

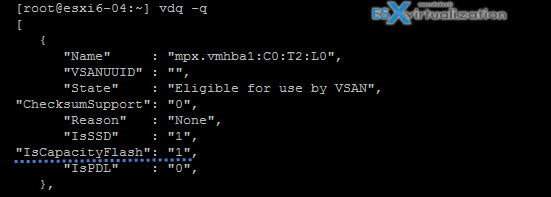

To check the status of your disks as ESXi sees them you can use the vdq -q command

So in our case:

vdq -q

gives us this:

We can see that the mpx.vmhba1:C0:T6:L0 is our disk which we need to tag to be able to use is in our disk group. (otherwise the disk won’t appear to be used in VSAN as capacity tier).

We need to connect via SSH to our host. If you haven’t enabled yet, please enable SSH by going and selecting your host > Manage > Security Profile > services > Edit

After you have identified the disk which you need to tag, just enter this command:

esxcli vsan storage tag add -d naa.XYZ -t capacityFlash

where naa.XYZ is your hard drive. In my example

esxcli vsan storage tag add -d mpx.vmhba1:C0:T5:L0 -t capacityFlash

![]()

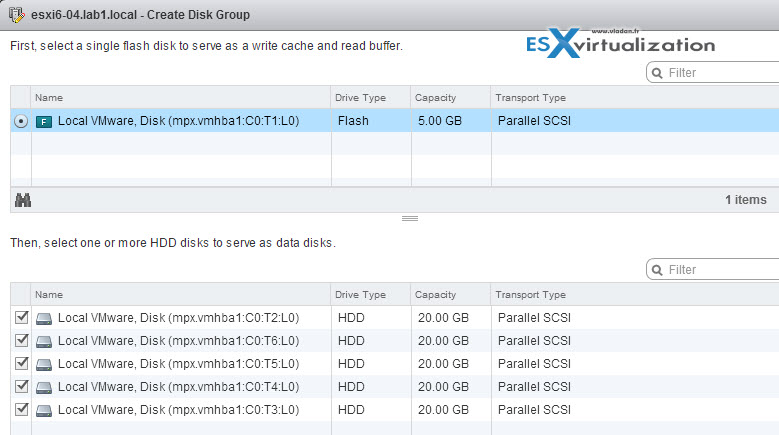

After tagging all of the 20Gb disks we can create a disk group where those disks will appear as data disks below… (You can see that our mpx.vmhba1:C0:T6:L0 device can now be selected to be used data disk)…

Note: You can not only tag but also untag!

Check this:

esxcli vsan storage tag remove -d naa.XYZ -t capacityFlash

the above command will simply remove the “capacityFlash” tag from the storage device.

How to check if SSD is participating as capacity tier or not?

So if you just want to check which tag does your storage has you can use this command:

vdq -q

See the output here…

You should get this VSAN Troubleshooting Reference Manual which is great resource

VSAN and Maintenance Mode

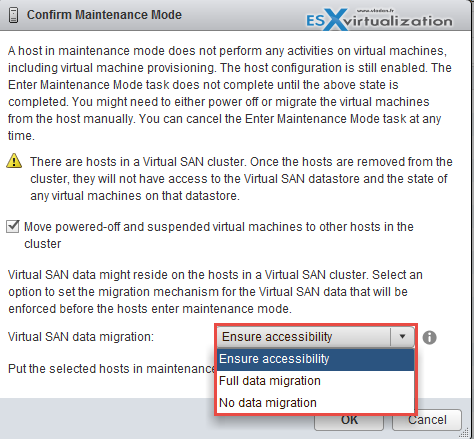

Maintenance mode for each ESXi participating in VSAN cluster has new options depending what you want to do with the data located on the particular host (the object's locations are on the local storage of each host) So, Virtual SAN host's when you want to put them in maintenance mode thay allows 3 options:

- Ensure accessibility – Virtual SAN ensures that all virtual machines on this host will remain accessible if the host is shut down or removed from the cluster.

- Full data migration – Virtual SAN migrates all data that resides on this host.

- No data migration – Virtual SAN will not migrate any data from this host. Some virtual machines might become inaccessible if the host is shut down or removed from the cluster.

Create/Modify VMware Virtual Volumes (VVOLs)

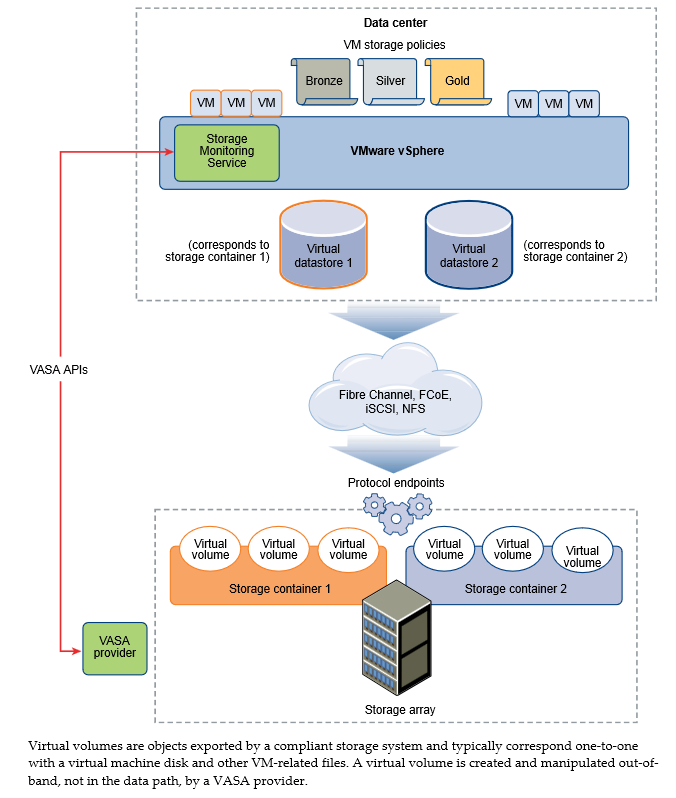

VVOls are new in vSphere 6. By using a special set of APIs called vSphere APIs for Storage Awareness (VASA), the storage system becomes aware of the virtual volumes and their associations with the relevant virtual machines. Through VASA, vSphere and the underlying storage system establish a two-way out-of-band communication to perform data services and offload certain virtual machine operations to the storage system. For example, such operations as snapshots, storage DRS and clones can be offloaded.

- VVOLs are supported on SANs compatible with VAAI (vSphere APIs for Array Integration).

- VVOLs supports vMotion, sVMotion, Snapshots, Linked-clones, vFRC, DRS

- VVOLs supports backup products which uses VADP (vSphere APIs for Data Protection)

- VVOLs supports FC, FCoE, iSCSI and NFS

Image courtesy VMware

VVOLs Limitations

- VVOLs Does not works with standalone ESXi hosts (needs vCenter)

- VVOLs do not support RDMs

- VVOLs wih the virtual datastores are tighten to vCenter sor if used with Host profiles, than only within this particular vCenter as the extracted host profile can be attached only to the hosts withing the same vCenter as the reference host is located.

- No IPv6 support

- NFS v3 only (v4.1 isn't supported)

- Multipathing only on SCSI-based endpoints, not on NFS-based protocol endpoint.

VVOLs vSphere Storage Guide p211.

Virtual volumes are encapsulations of virtual machine files, virtual disks, and their derivatives. Virtual volumes are not preprovisioned, but created automatically when you perform virtual machine management operations. These operations include a VM creation, cloning, and snapshotting. ESXi and vCenter Server associate one or more virtual volumes to a virtual machine.

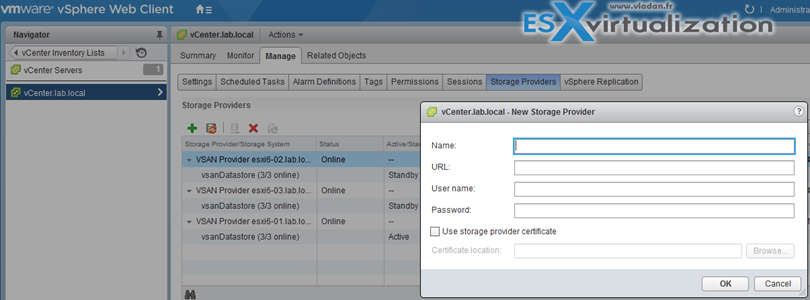

- Storage Provider – A Virtual Volumes storage provider, also called a VASA provider, is a software component that acts as dastorage awareness service for vSphere.

- Storage Container – A storage container is a part of the logical storage fabric and is a logical unit of the underlying hardware. The storage container logically groups virtual volumes based on management and administrative needs.

- Protocol Endpoints -ESXi hosts use a logical I/O proxy, called the protocol endpoint, to communicate with virtual volumes and virtual disk files that virtual volumes encapsulate. ESXi uses protocol endpoints to establish a data path on demand from virtual machines to their respective virtual volumes.

- Virtual Datastores – A virtual datastore represents a storage container in vCenter Server and the vSphere Web Client.

Steps to Enable VVOLs (p.218):

- Step 1: Register Storage Providers for VVOLs

vCenter Inventory Lists > vCenter Servers > vCenter Server > Manage > Storage Providers

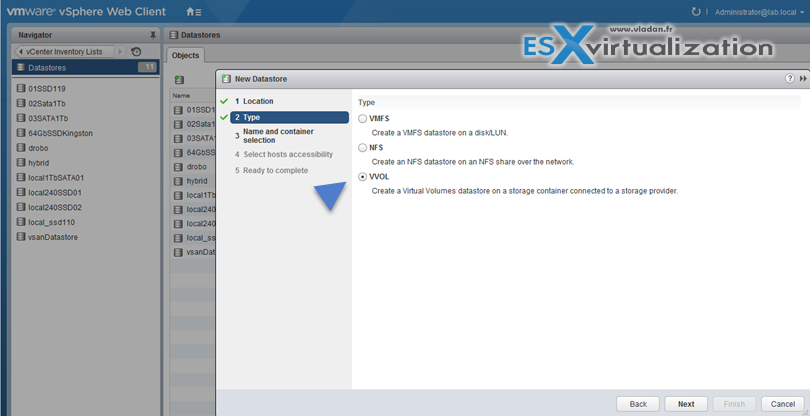

- Step 2: Create a Virtual Datastore

vCenter Inventory Lists > Datastores

- Step 3: Review and manage protocol endpoints

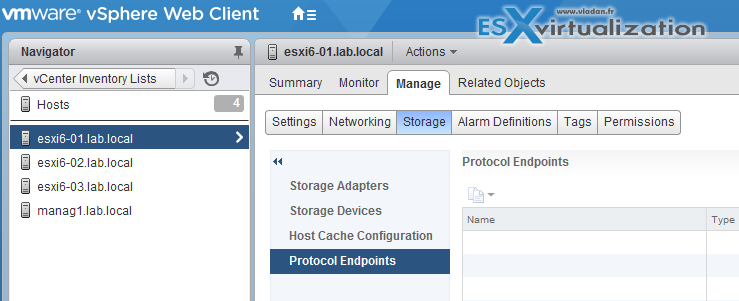

vCenter Inventory Lists > Hosts > Host > Manage > Storage > Protocol Endpoints

- (optional) Change the path selection policy (psp) for protocol endpoint.

Manage > Storage > Protocol Endpoints > select the protocol endpoint you want to change and click Properties > Under multipathing Policies click Edit Multipathing

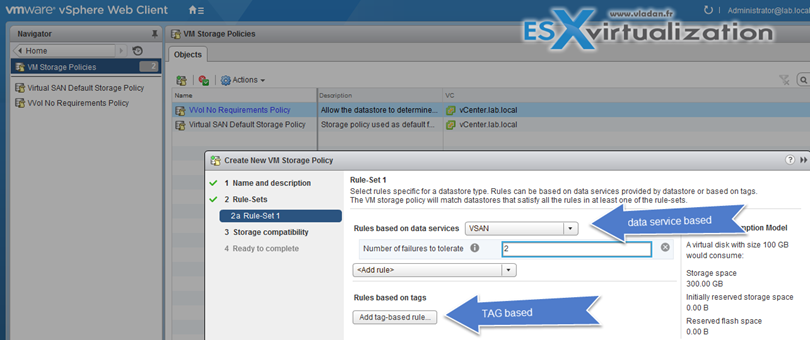

Configure Storage Policies (VM storage policies)

Virtual Machine Storage policies are covered vSphere Storage Guide on p. 225. Virtual machine storage policies are essential to virtual machine provisioning. These policies help youdefine storage requirements for the virtual machine and control which type of storage is provided for the virtual machine, how the virtual machine is placed within the storage, and which data services are offered for the virtual machine. SP contains storage rule or collection of storage rules.

- Rules based on storage-specific data service – VSAN and VVOLs uses VASA to surface the storage capability to VMstorage policies's interface

- Rules based on TAGs – by tagging a specific datastore. More than One tag can be applied per datastore

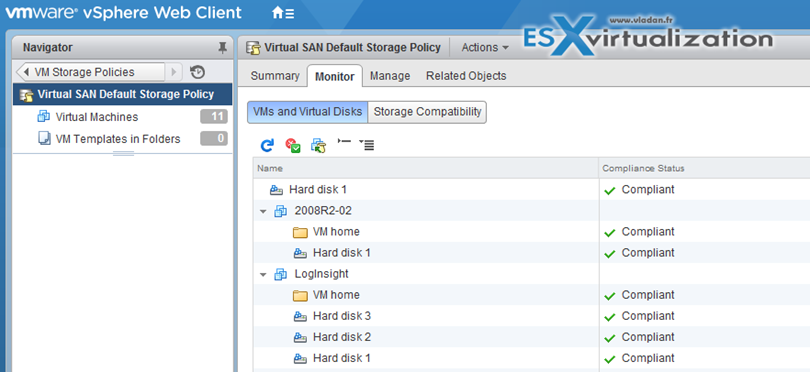

View VMs and disks if they comply with VM storage policies

VM Storage Policies > Click a particular Storage Policy > Monitor

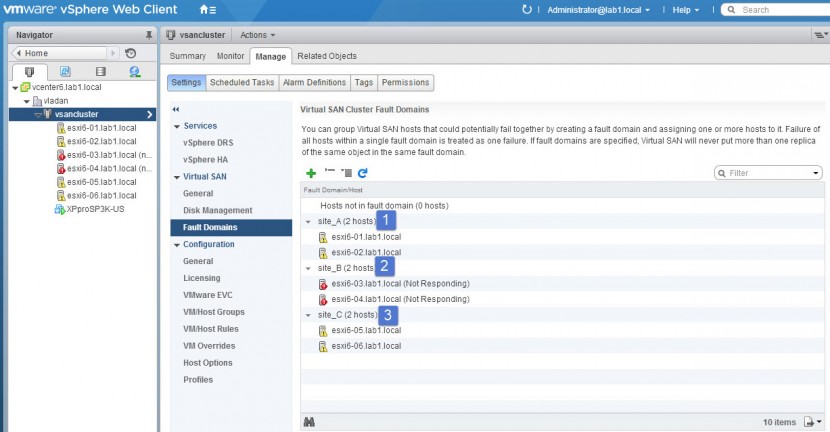

Enable/Disable Virtual SAN Fault Domains

VSAN fault domains allows to create an environment where the in case of failure 2 hosts for example, which are in the same rack. Failure of all hosts within a single fault domain is treated as one failure. VSAN will not store more than one replica in this group (domain).

VSAN Storage Guide p.22

Requirements: 2*n+1 fault domains in a cluster. In order to leverage fault domain you need at least 6 hosts (3 fault domains). Using a three domains does not allow the use of certain evacuation modes, nor is Virtual SAN able to reprotect data after a failure.

VMware recommends 4 Fault domains. (the same for vSAN clusters – 4 hosts in a VSAN cluster).

On the pic below you see my hosts are down, but VSAN still works and provide storage for my VM… (nested environment).

Hosts and Clusters > Cluster > Manage > Settings > Virtual SAN > Fault Domains

If a host is not a member of a fault domain, Virtual SAN interprets it as a separate domain.

Tools

- Administering VMware Virtual SAN

- vSphere Storage Guide

- What's New: VMware Virtual SAN 6.0

- What’s New in the VMware vSphere® 6.0 Platform

- Virtual SAN 6.0 Performance: Scalability and Best Practices

- vSphere Client / vSphere Web Client

vSphere how-to, news, videos on my Dedicated vSphere 6 page!

Since VVOLS rely on vCenter, what happens if vCenter goes down? Do you just lose the management of VVOLS, or do they start having any interruption?

Is there a way to setup VVOLS in a nested lab, like you did for VSAN?

Good questions Josh. I haven’t tested VVOLs in a lab just yet, I’d say that you loose the management only. As for the lab setup I’d expect some of the VSAs out there to be VVOLs compatible pretty soon – Q3 for the vVNX…. check comments in this post http://www.vladan.fr/free-virtual-vnx-software-to-download-a-full-featured-version-of-vvnx/

Important to know, and for your vVOL planing.

In case the VASA provider is down (VM in cases of some storage vendors) it is not possible to change the VM’s state (power up/down) for VM’s on vVOL.

With this in mind it should be clear to not use vVOL for the VASA or vCenter VM.

would be good this in Spanish