During VMworld 2012 in Barcelona I had the chance to see the Nutanix hardware and technology. At first, it looks like there is nothing really spectacular. 2U rack server with Fusion-IO card, 2 CPU system and lots of memory, all in one block. It's really when you take a closer look at the distributed architecture inspired by Google, Amazon, Twitter or Facebook. All those big guys use distribute file systems. Nutanix uses distributed file system designed for virtual infrastructure, which is not only distributed file system but also computing power and network.

Nutanix has been founded in 2009 by a team that worked at Google on the design of the Google File System. The Nutanix Distributed File System got inspired by Google's technology but has been adapted for virtual environments, as Google don't use virtualization.

“No SAN” solution means that the system has all the features that a SAN device offers already built-in (VAAI, array-side snapshots…) and so the VMware vSphere features like HA, DRS, vMotion, can be leveraged. As an advantage over classic SAN based systems is that it's easily extensible and scales up in a linear fashion.

Nutanix – The Distributed File System

Nutanix ha also full sets of SAN features includes striping, replication, auto-tiering, error detection, failover and automatic recovery (auto-healing). Also, two kinds of compression has been added recently. But I'll blog on those features in another post and today I'll try to focus on the Nutanix distributed file system (NTFS). More and more I read about Nutanix, more and more I get hooked by the design, by the technology and by the simplicity for the end user – the Administrator.

Nutanix definition of the NDFS:

NDFS acts like an advanced NAS that uses local SSDs and disks from all nodes to store virtual machine data. Virtual machines running on the cluster write data to NDFS as if they were writing to a NAS. NDFS is VM aware and provides advanced data management features. It brings data closer to virtual machines by storing the data locally on the system, resulting in higher performance at a

lower cost.

The local Storage and tiering – how it works?

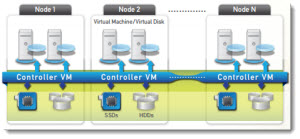

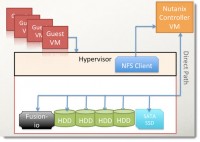

The Controler VM (a virtual machine) is basically a VSA, which sits at each node (1 bloc has four nodes) uses VM Direct Path to the local physical storage. (see img. below). This storage subsystem can be accessed by the hypervisor through industry standard iSCSI/NFS protocols.

Nutanix uses Heat-Optimized Tiering (HOT) that automatically moves the most frequently accessed data to the highest performing storage tier – the Fusion-IO flash PCI-e card, then the Intel SSD, or the slowest SATA tier for cold data.

The thresholds are user defined. You can decide how many hours/days before the data becames “cold” …. and moved to the SATA tier.

In addition, to optimize access speed, most of the VMs data are always local. If VM wants to access data through another controler VM, than the local one, the datas are moved closer to the VM.

Nutanix storage – Designed for resiliency.

The controller VM writes the data locally to the storage and then copies the parts of it also to different nodes as well, so it can be picked up if a hardware failure occurs. But the way it's written is quite unique, and check-summed If for example, you have 3 items to write. Those 3 items – A, B and C, are written to one node, but the system is designed for DR, so those 3 items will not be replicated exactly on the same way on another node, but on three different nodes. For best possible speed the local storage is leveraged and VM always uses local storage. If the VM needs part of the data which lays on another node and it calls the network to access it, the blocs needed gets copied closer to the VM so the VM can access those blocs locally.

NDFS uses a replication factor (RF) that keeps redundant copies of the data. Writes to the platform are logged in the PCIe SSD tier, which can be configured to replicate to another controller before the write is committed. If a failure occurs, NDFS automatically rebuilds data copies to maintain the highest level of availability.

A video presenting the resiliency – “anything can fails, but there is nothing, if that fails that can bring down the system”

- Check-sums has written locally

- If reconstruction – then no hot node are used for reconstruction, but multiple nodes

- Incremental check and healing

Nutanix – The Hardware Architecture:

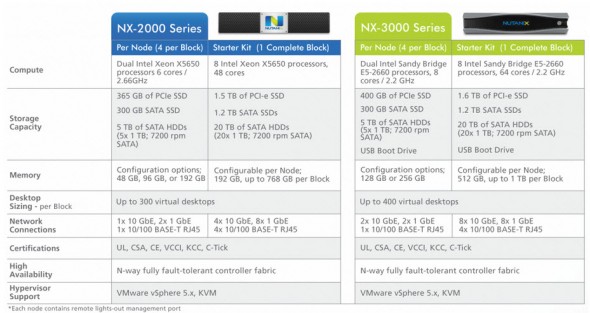

Nutanix single bloc (2U) has four nodes. Each node has local storage (Fusion-IO, SSD and SATA disks), which has different performance. The latest hardware model is NX 3000.

- It uses Dual Intel Sandy Bridge E5-2660 CPU, 8cores /2.2 Ghz

- 400Gb Fusion-IO card

- 300Gb SSD and 5TB of SATA drives.

- Each block can hold 128 or 256Gb of RAM. Two 10GbE, 1x 1GbE.

And now, I'm sure that you'll love this video…… the technical deep dive.

More from ESX Virtualization:

Hi,

nautanix supports clustering concepts or supports NFSv4. We are planning to cluster with IBM products and planning to place the NFSv4. nautanix supports this functionality?

Regards,

Sathish