When dealing with overprovisioning VMs, we must first understand the meaning, because while we talk about overprovisioning VMs, we might not talk about the same thing while using the same wording. Usually “overprovisioning” means that you configure (provision) more than you have.

Over-provisioning does not lead to contention always, but it could lead to contention. In the case of overprovisioning the storage, you should monitor usage and adjust before your workloads are in contention or stopped because your datastore is full. This could happen due to a VM's rapid disk growth due to some logs filling partition(s) or so. If that happens to only a couple of VMs your alarms at the VM level might alert you and you take action. But if your datastore fills up quickly, this could lead to workloads being stopped or unresponsive.

What is contention?

A contention is basically overutilization, which leads to what we call contention. The contention is workloads fighting for resources.

If I don't want to be the only one judging what overprovisioning means, there is a thread at Reddit and I'll quote few opinions that are interesting:

Quote:

“Overprovisioned/overprovisioning” has two meanings. The first and simplest is when a VM is given more resources than it should have. Too many vCPUs or too much RAM, generally speaking. On a host with few VMs and lots of capacity, overprovisioning a VM this way might not have a big impact, but on a host with many VMs, all in contention for its shared resources, overprovisioning can lead to serious performance problems across VMs. In either case, overprovisioning a VM is considered wasteful.

The second meaning for overprovisioning is the concept of giving the VMs on a host collectively more resources than the host has. This is very common with vCPU, somewhat less common with datastore space, and less common still with RAM.

As you can see, overprovisioning from the cost perspective means the same when VMs runs on-prem or in the public cloud = you pay more for nothing. You waste resources.

it's very common to overprovision or over-allocate on vCPU because a VM will generally not keep a CPU busy all the time. In many situations, you can over-allocate vCPUs 4, 6 or 8 times what you actually have. As an example, you can have 16 VMs using two cores each in a host that only has 8 cores in it. In this case, you've allocated 32 virtual cores on a host that has 8, so you are over-provisioning CPU by 4 times.

And here is another interesting quote that talks about cloud computing and the importance of over-provisioning.

Cloud computing is one of the important ways to mitigate over-provisioning. In the context of migrations and cloud migrations, there are tools available that can help to right-size your infrastructure. They can offer guidance on which workloads to migrate or not, what are the optimal cloud configurations and vendors, an estimated cloud cost, and how your apps will perform as expected in the cloud.

and for the storage, I think that for everyone this is pretty clear, but I'd prefer still to post this one as well. Basically, you can give more storage to VMs than you actually have.

In the context of storage, overprovisioning of storage derives can be mitigated through the ability to use thin provisioning for virtual disks. Thin provisioning means that when you add a virtual disk to a VM, you can designate it as “Allocate on demand.” If you setup a 100 GB disk for a VM, it might only use 10GB initially for applications and other files but as data grows the disk can grow dynamically to a maximum of 100GB. The downside is that if you’ve setup several VMs with thin provisioned disks and they all grow over time, and they will, then at some point, you’re going to run out of space.

As you can see, storage overprovisioning when monitored carefully, can save you a lot of money on storage, especially when dealing with expensive SSD or NVMe storage arrays.

Stop storage waste:

The point of overprovisioning (to me) is that each individual VM has more space – “on paper” – than they actually use. However, if something happens that starts filling logs or whatever, they can transparently put many gigabytes on disk until someone corrects the issue (for example).

Overprovisioning allows you to use as much of your storage space as you safely can, leaving a buffer for events like the above. It's very unlikely all your VM's suddenly seee a huge space use increase, but one or two might.

Thick provisioning to me is kind of a waste for everything except specialized cases, like say databases where you may want to thick provision and “waste” space for increased performance. But then, I don't claim to be an authority, just my personal thoughts.

Could have many contexts:

It could be a host or computing node that has allocated computing resources such as CPU, memory, I/O, disk, or network that are unused at peak times.

In the context of cloud computing, Infrastructure-as-a-Service providers bill every month, but your costs can vary wildly. If you can scale back number of processor cores, RAM, storage capacity or performance, how long the cloud server is actually turned on, availability of features (such as load balancing and auto-scaling), you can save a lot of money.

Cloud computing is one of the important ways to mitigate over-provisioning. In the context of migrations and cloud migrations, there are tools available that can help to right-size your infrastructure. They can offer guidance on which workloads to migrate or not, what are the optimal cloud configurations and vendors, an estimated cloud cost, and how your apps will perform as expected in the cloud.

In the context of storage, overprovisioning of storage derives can be mitigated through the ability to use thin provisioning for virtual disks. Thin provisioning means that when you add a virtual disk to a VM, you can designate it as “Allocate on demand.” If you setup a 100 GB disk for a VM, it might only use 10GB initially for applications and other files but as data grows the disk can grow dynamically to a maximum of 100GB. The downside is that if you’ve setup several VMs with thin provisioned disks and they all grow over time, and they will, then at some point, you’re going to run out of space. Expensive SAN is often wasted on “thick” provisioned disks. It is a tradeoff and you have to measure the risk in your environment and maintain a level of vigilance through disk space and performance monitoring.

Many meanings…

Overprovisioned has many meanings depending on situation/resource you are talking about. The wasted space definition is based off of resources like memory/cpu.

In the world of data, Overprovisioned is common with SAN's. With compression and deduplication, a lot of space is saved and a OS/VM may show more space is used than the SAN has allocated to it with the data space(NFS/volume/Datastore…etc)

Example: 10 Windows machines show 40GB used each. They should show up on the SAN as 400GB, right? But with deduplication and compression it could show up somewhere around 150GB. So your Machines all show 40GB of used space in the OS/VM but the SAN only shows 150GB of used space. Thus you can have several Datastores setup that far exceed the amount of space on the SAN in your environment, but still not use all your storage space. That is Data Overprovisioning. It is common and not a waste of space.

So to answer the questions directly. That situation you asked about is simply called “Provisioned” You have 6TB Provisioned. If you have a 20TB SAN and 10 Datastores with 6TB each giving you a total of 60TB provisioned, you are considered overprovisioned. But that does not mean you are using all of that space. Just that you have allocated that much space to volumes/Datastores.

You should not need to take action unless the OS/VM's need more space and you fully use the 6TB datastore.

The Volume/Datastore will not grow unless you tell it too. Same for the partitioned OS/VM disk space. The only time growth will happen without you doing anything is if you have an OS/VM with thin provision or Thick provisioned/lazy zeroed. and you use up more space on the local partion of the OS/VM. Then you will see the space used up on the SAN, But no growth increase on the volume/Datastore.

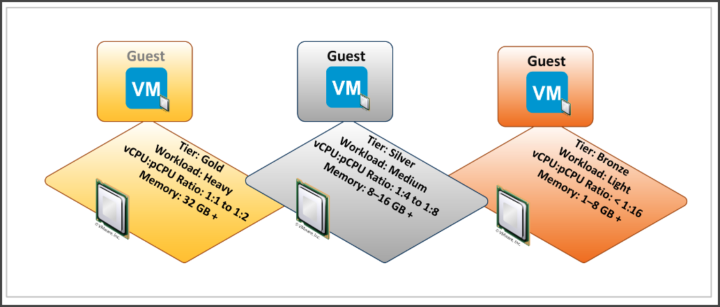

This screenshot is taken from an old VMware document. We can see a possible degen design scenario.

Wrap Up

Overprovisioning VMs isn't a topic that should be taken lightly. It needs an understanding of your needs, your budget, your monitoring possibilities, and lastly the context. Hopefully, we can discuss this for hours.

The main issue with thin provisioning, especially CPU and Storage, is that during the time when applications needs to perform (not idle times), the server can possibly run out of resources so VMs might fight for resources more and more until unacceptable performance occurs. This could lead, if not solved, to the hypervisor crash at the end too.

More from ESX Virtualization

- vSphere 8.0 Page (NEW)

- VMware vCenter Server 7.03 U3g – Download and patch (NEW)

- Upgrade VMware ESXi to 7.0 U3 via command line

- vSphere 7 U2 Released

- vSphere 7.0 Download Now Available

- vSphere 7.0 Page[All details about vSphere and related products here]

- VMware vSphere 7.0 Announced – vCenter Server Details

- VMware vSphere 7.0 DRS Improvements – What's New

- Upgrade from ESXi 6.7 to 7.0 ESXi Free

- USB Network Native Driver for ESXi Released as Fling

- TOP differences between ESXi 6.7 and ESXi 7.0

Stay tuned through RSS, and social media channels (Twitter, FB, YouTube)