StarWind Virtual SAN (VSAN) is a hyper-converged software solution (HCI) that uses internal disks to create high-available shared storage. StarWind is able to create shared storage for virtual environments running VMware vSphere, Microsoft Hyper-V, KVM or Citrix XEN. In this post we'll talk about some StarWind VSAN 2-Nodes Direct Connect Networking Setup Tips.

The main reason why software-based solutions with high-available storage are better over hardware-only shared storage, is resiliency and redundancy. StarWind vSAN is an HA solution so there's local redundancy with usually 2 disks in a pool that can fail with RAID 6.

And there is also Inter-node redundancy where one node can go down when you have 2-way replication or 2 nodes with 3-way replication in your environment. So you can have disk failures or node failures and your VMs can still be restarted on the remaining node. Not in the case when you lose your shared storage provided via your hardware device which if fails, the whole cluster has a blackout.

You can install StarWind on your own hardware, but the best is perhaps to use certified hardware directly pre-configured from StarWind. There are several advantages such as support, monitoring, or an All-Flash hardware solution. Yes, no more spinning rust with StarWind as the vendor offers hardware appliances pre-populated with SSD storage.

However, this post was meant to be to give you some advice when configuring StarWind by yourself and trying to maximize the performance and resiliency of networking. Comparing other solutions on the market, you don't have to follow and be forced to vendor's HCL as StarWind vSAN is flexible and uses whatever hardware you have available.

StarWind VSAN Networking Setup Tips

Networking in StarWind VSAN is configured in separate channels. You'll need separate NICs which are connected via separate networking cables. The advantage is that it is cheaper to get high-speed NICs than High-speed switch to benefit 10Gb 2-Node connection between nodes.

1Gb or 10Gb?

You can have a look at the following screenshot from the StarWind blog post showing approx. IOPS to Networking requirements… You'll have to know your IOPS requirements.

For 2-Nodes setups which are most common for ROBO, it's best to use direct connect to optimize the cost.

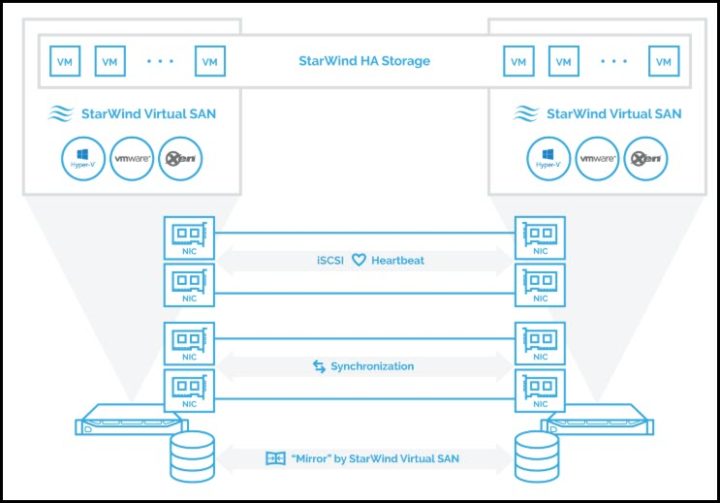

The image below shows the SAN connections. LAN connections, internal cluster communication, and any auxiliary connections have to be configured using the separate network equipment or be separated from the Synchronization/iSCSI traffic. Networking inside the cluster should be configured according to the hypervisor vendor recommendations and best practices.

- Do not use ISCSI/Heartbeat and Synchronization channels for the same physical link

- Set MTU to 9000 (Jumbo frames) at the VMKernel ports for iSCSI and Synchronization channels.

- Set MTU to 9000 at the vSwitch level as well.

- Shielded cabling (e.g., Cat 6a or higher) has to be used for all network links intended for StarWind Virtual SAN traffic. Cat. 5e cables are not recommended.

- StarWind Virtual SAN does not have specific requirements for 10/40/56/100 GbE cabling. But check with your networking vendor.

- In HyperConverged configurations, the recommended MPIO mode is Failover Only or Fixed path.

- In order to minimize the number of network links in the Virtual SAN cluster, the heartbeat can be configured on the same network cards with iSCSI traffic. Heartbeat is only activated if the synchronization channel has failed and, therefore, it cannot affect the performance.

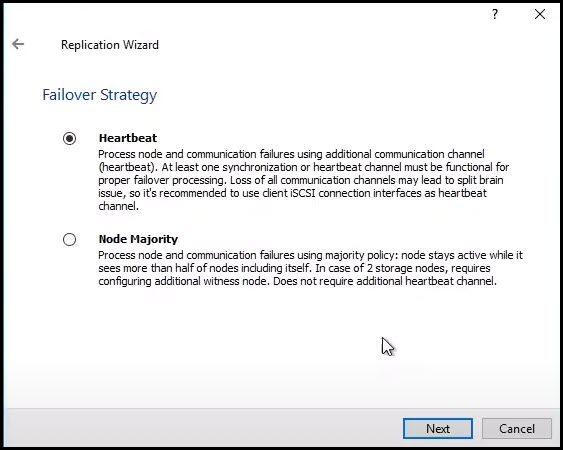

Heartbeat channel recommendations

By configuring heartbeat, you avoid so-called “split-brain”, situations when the HA cluster nodes are unable to synchronize but continue to accept write commands from the initiators. With StarWind Heartbeat technology, if the synchronization channel fails, StarWind attempts to ping the partner nodes using the provided heartbeat links. If the partner nodes do not respond, StarWind assumes that they are offline. In this case, StarWind marks the other nodes as not synchronized, and all HA devices on the node flush the write cache to the disk to preserve data integrity in case the node goes out of service unexpectedly.

If the heartbeat ping is successful, StarWind blocks the nodes with the lower priority until the synchronization channels are re-established. This is done by designating node priorities. These priorities are used only in case of a synchronization channel failure and are configured automatically during the HA device creation.

A network configuration overview

One of the questions which some might have is: How StarWind process the reads?

Quote From StarWind:

- Hypervisor reads from local storage only. High-speed synchronization network links are used to replicate writes to the partner hypervisor nodes.

- The cache is local, so the performance gain is much better compared to the cache sitting behind a slow network.

- Hypervisor nodes do not interfere when accessing the LUN, which increases performance and eliminates unnecessary iSCSI lock overhead.

StarWind Linux Based VSAN features

- Management – Web-based or Thick client management (on Windows management workstation). Also via Powershell

- Caching – RAM and Flash cache

- Virtual devices – ImageFile, VTL

- Replication – 2-way or 3-way replication, VTL replication to the cloud

- Hybrid Cloud – Yes

- Stretched Clustering – Yes

- Licensing – StarWind only (no need Windows license to install to).

Final Words

A very flexible software solution that can be configured not only for HCI and using local storage for creating a shared storage pool. You can also use StarWind as a highly available storage solution only where your hypervisors connect remotely. The solution has also a free version which we have covered a while back here. You can find further details on StarWind's website.

Check out StarWind website.

More posts about StarWind on ESX Virtualization:

- StarWind VSAN Graceful Shutdown and PowerChute Configuration

- Free StarWind iSCSI accelerator download

- VMware ESXi Free and StarWind – Two node setup for remote offices

- VMware vSphere and HyperConverged 2-Node Scenario from StarWind – Step By Step

- StarWind Storage Gateway for Wasabi Released

- How To Create NVMe-Of Target With StarWind VSAN

- StarWind and Highly Available NFS

- StarWind VVOLS Support and details of integration with VMware vSphere

- StarWind VSAN on 3 ESXi Nodes detailed setup

- VMware VSAN Ready Nodes in StarWind HyperConverged Appliance

More from ESX Virtualization

- vSphere 7.0 Download Now Available

- vSphere 7.0 Page [All details about vSphere and related products here]

- VMware vSphere 7.0 Announced – vCenter Server Details

- VMware vSphere 7.0 DRS Improvements – What's New

- Upgrade from ESXi 6.7 to 7.0 ESXi Free

- What is VMware Skyline?

- What is vCenter Server 7 Multi-Homing?

Stay tuned through RSS, and social media channels (Twitter, FB, YouTube)