NVMe SSDs are the fastest storage for consumer laptops for example but exists also as PCIe add-on cards for desktops or servers. Those kind of SSDs are much faster than SATA and SAS SSDs in the data center and will probably replace, sooner or later, the traditional SATA/SAS devices. The storage industry today seeks lower latency and higher performance. That's where NVMe and NVMe Over Fabrics (NVMe-OF) is starting to be interesting. What is NVMe Over Fabrics (NVMe-oF)? This is the topic of today's post.

We'll talk first about NVMe, and after NVMe-oF. I think it's easier to understand the core of the technology as NVME-oF is something to do with a network where NVMe is local storage. (Note I'm simplifying a bit here for non-storage folks).

NVMe is an interface specification. Similar way as SATА, but much faster as there are fewer layers for the data to access directly the SSD. It's optimized for NAND flash (remember SATA was optimized for spinning disks).

NVMe devices communicate with CPU using high-speed PCIe connections directly. So you don't need any separate storage controller (HBA). There are many formats of NVMe. Add-in cards, U.2 and M.2.

Those storage devices can achieve 1 million IOPS with like 3 microseconds latency and most importantly, very low CPU usage. So they're not only faster than SATA SSDs, but they're also much faster. To roughly give you an idea, between SATA and NVMe (high-end devices) there can be a magnitude of 10 better performance. Ten times more IOPS and 10 times lower latency.

What is NVMe Over Fabrics (NVMe-oF)?

NVMe over Fabrics is a network protocol. If you follow our blog, you know that iSCSI and connection of your ESXi host to remote storage via ISCSI protocol. Usually, a host communicates with a storage system over a network (we say “fabric”). It depends on and requires the use of Remote Direct Memory Access (RDMA). There are quite a few RDMAs. NVMe over Fabrics can use any of the RDMA technologies, such as RoCE, iWARP or InfiniBand.

NVMe-oF, compared to iSCSI has much lower latency. It adds just a very few microseconds to reach the other side of the network and reach the storage device. This is what is really great about NVMe-oF and which differentiates from (old) iSCSI technology.

It's a protocol, so you can use it to access older SAN devices with SATA disks, but in order to have the best of the best, it would make more sense to have a SAN with NVMe storage. It's an emerging technology which is just starting, but which is very promising. Basically, NVMe-oF allows faster access between your ESXi hosts and your storage.

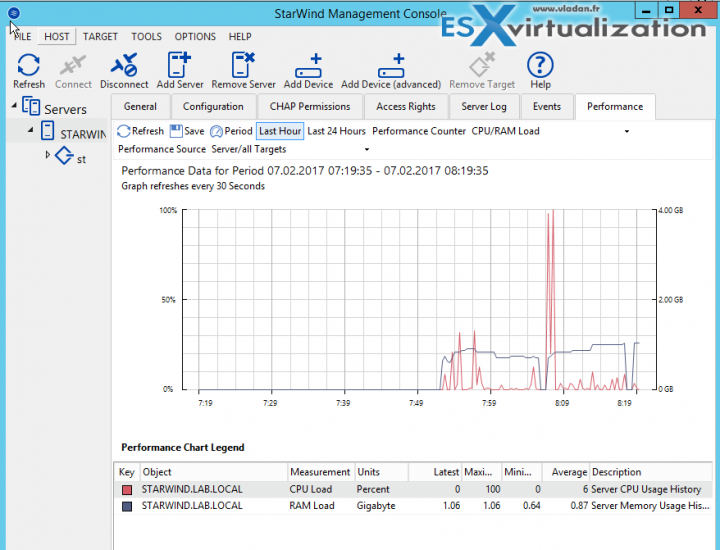

StarWind Software is working with this cool technology, which is one of the fastest storage technology around. They're not the only storage vendor experimenting with NVMe-oF, but they want to bring this one of the fastest software storage technology to the masses.

They also developed their own initiator (CentOS Linux) for NVMe-oF. The target system was a multicore server running Windows Server with a single Optane SSD that they used to test their software.

You can read their performance series here:

- Intel SPDK NVMe over Fabrics [NVMe-oF] Target Performance Tuning. Part 1: Jump into the fire©

- Intel SPDK NVMe-oF Target Performance Tuning. Part 2: Preparing a testing environment

First, we'll talk about SPDK. This is new for me and also for ESX Virtualization readers perhaps. SPDK which stands for Storage Performance Development Kit and NVMe-oF (Nonvolatile Memory Express over Fabrics).

SPDK is an open-source set of tools and libraries that allows you to create high-performing and easily scalable storage applications. It does provide very high performance as all the drivers are moved into userspace and operating in a polled mode. The drivers no longer rely on interrupts, which means that they're more effective as it avoids kernel context switches and eliminates interrupt handling overhead.

They have done a demo testbed.

StarWind's initiator was running CentOS on another server. When they decided to test how far they could push their initiator, they were able to drive 4 Optane SSDs at up to ~1.9M 4K random write IOP performance.

More about StarWind?

Starwind does also storage appliance. Did you know? You can check my article where I report on their storage appliance with storage which has ‘shared-nothing’ architecture without shared backplane and which can tolerate 4 disk failures in the group without losing the uptime.

It uses distributed RAID61 where other vendors use only RAID6 (2 disk failures). It can expand and scale up or scale-out. You can scale up adding individual disks and flash modules, or JBODs, while adding ready controller nodes allows scaling out.

If you are interested in VVOLS, know that StarWind VSAN supports VVOLS.

StarWind Free

- No Capacity Restrictions – you can use as many capacities for your mirrors, as you like (previously restricted)

- No Scalability Restrictions – as many nodes as you like. (previously limited to 2-nodes only)

- No Time Limit on License – The Free license if for life. After 30 days, the only management option you’ll have is PowerShell or CLI.

- Production use – can be used in production, but if anything goes wrong, you will only find support through community forums.

- PowerShell Scripts – StarWind Virtual SAN Free is shipped with a set of ready to use PowerShell scripts allowing users to quickly deploy the Virtual SAN infrastructure.

- No StarWind Support – only community-based support.

- StarWind HA – The shared Logical Unit is basically “mirrored” between the hosts, maintaining data integrity and continuous operation even if one or more nodes fail. Every active host acts as a storage controller and every Logical Unit has duplicated or triplicated data back-end.

- No virtual tape library VTL as on the paid version.

Download your Free 30 days eval copy of StarWind VSAN. The eval will become Free, with some limits, after 30 days.

More posts about StarWind on ESX Virtualization:

- StarWind Hybrid Cloud For Microsoft Azure

- What is iSER And Why You Should Care?

- StarWind Virtual SAN New Release with NUMA Support and Flash Cache Optimization

- VMware VSAN Ready Nodes in StarWind HyperConverged Appliance

- StarWind Virtual SAN Deployment Methods in VMware vSphere Environment

More posts from ESX Virtualization:

- How To Reset 120 Day RDS Grace Period on 2012 R2 And 2016 Server

- How To Upgrade ESXi 6.x to 6.7 via ISO

- VMware Cold Clone to convert your physical machines, where to get it?

- Upgrade Windows Server 2012R2 AD to Server 2016

Stay tuned through RSS, and social media channels (Twitter, FB, YouTube)

Nice write up. Thanks for sharing.