We continue to fill our VCP6.5-DCV Study guide page with one objective per day. Today's chapter is VCP6.5-DCV Objective 3.3 – Configure vSphere Storage Multipathing and Failover. Each chapter is a single blog post with a maximum of screenshots. Our previous study guide covering VCP6-DCV exam is still available.

I think the majority of new VCP candidates right now will go for the VCP6.5-DCV even if the VCP6-DCV seems less demanding. There are fewer topics to know.

As you know, the latest vSphere 6.5 has now its certification exam. (since April). Not many guides are online so far, so we thought that it might be (finally) perhaps, a good idea to get things up.

Exam Price: $250 USD, there are 70 Questions (single and multiple answers), passing score 300, and you have 105 min to complete the test.

Check our VCP6.5-DCV Study Guide Page.

You can download your free copy via this link – Download Free VCP6.5-DCV Study Guide at Nakivo.

VCP6.5-DCV Objective 3.3 – Configure vSphere Storage Multipathing and Failover

- Explain common multi-pathing components

- Differentiate APD and PDL states

- Compare and contrast Active Optimized vs. Active non-Optimized port group states

- Explain features of Pluggable Storage Architecture (PSA)

- Understand the effects of a given claim rule on multipathing and failover

- Explain the function of claim rule elements:

- Vendor

- Model

- Device ID

- SATP

- PSP

- Change the Path Selection Policy using the UI

- Determine required claim rule elements to change the default PSP

- Determine the effect of changing PSP on multipathing and failover

- Determine the effects of changing SATP on relevant device behavior

- Configure/Manage Storage load balancing

- Differentiate available Storage load balancing options

- Differentiate available Storage multipathing policies

- Configure Storage Policies including vSphere Storage APIs for Storage Awareness

- Locate failover events in the UI

Explain common multi-pathing components

VMware ESXi, in order to keep its connection to a storage, it supports multipathing, which is basically a technology allowing to use more than one physical path that transfers data between ESXi host and storage device.

This is particularly handful when you have a failure on your storage network, (NIC, storage processor, switch, cable). VMware ESXi can switch to another physical path, which does not use the failed component. The path switching to avoid failed components is called path failover.

In addition to path failover, multipathing provides load balancing. Load balancing is the process of distributing I/O loads across multiple physical paths. Load balancing reduces or removes a potential bottleneck.

For iSCSI, there can be two different cases whether you use software iSCSI or hardware iSCSI.

Failover with Software iSCSI – With software iSCSI, as shown on Host 2 of the Host-Based Path Failover illustration, you can use multiple NICs that provide failover and load balancing capabilities for iSCSI connections between your host and storage systems.

For this setup, because multipathing plug-ins do not have direct access to physical NICs on your host, you first need to connect each physical NIC to a separate VMkernel port. You then associate all VMkernel ports with the software iSCSI initiator using a port binding technique. As a result, each VMkernel port connected to a separate NIC becomes a different path that the iSCSI storage stack and its storage-aware multipathing plug-ins can use.

Failover with Hardware iSCSI – ESXi host has at least two (or more) hardware iSCSI adapters connected. The storage system can be reached using one or more switches (at least two preferably). Also, the storage array should have two storage processors (SP) so that the adapter can use a different path to reach the storage system.

Differentiate APD and PDL states

VMware High Availability (HA) was further enhanced with a function related to shared storage and it’s called VM Component Protection (VMCP).

When VMCP is enabled, vSphere can detect datastore accessibility failures, APD (All paths down) or PDL (Permanent device lost), and then recover affected virtual machines by restarting them on other hosts in the cluster which is not affected by this datastore failure. VMCP allows the admin to determine the response that vSphere HA will make. It can be simple alarm only or it can be the VM restart on other hosts. The latter one is perhaps what we’re looking for.

Limitations:

- VMCP does not support vSphere Fault Tolerance. If VMCP is enabled for a cluster using Fault Tolerance, the affected FT virtual machines will automatically receive overrides that disable VMCP.

- No VSAN support (if VMDKs are located on VSAN then they’re not protected by VMCP).

- No VVOLs support (same here)

- No RDM support (same here)

Compare and contrast Active Optimized vs. Active non-Optimized port group states

The active unoptimized is the path to the LUN which is not owned by the storage processor. This situation occurs on Arrays which are Asymmetric Active-Active.

- Optimized paths: are the paths to the Storage Processor which owns the LUN.

- Unoptimized paths: are the paths to the Storage Processor which does not own the LUN. And has a connection to the LUN via interconnect between the processors.

The default PSP for devices claimed by VMW_SATP_ALUA is VMW_PSP_MRU, which selects an “active/optimized” path reported by VMW_SATP_ALUA, or an “active/unoptimized” path if there’s no “active/optimized” path. The system will revert to active/optimized when available.

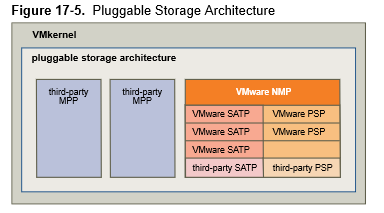

Explain features of Pluggable Storage Architecture (PSA)

To manage storage multipathing, ESXi uses a collection of Storage APIs, also called the Pluggable Storage Architecture (PSA). The PSA is an open, modular framework that coordinates the simultaneous operation of multiple multipathing plug-ins (MPPs). The PSA allows 3rd party software developers to design their own load balancing techniques and failover mechanisms for a particular storage array, and insert their code directly into the ESXi storage I/O path.

The VMkernel multipathing plug-in that ESXi provides by default is the VMware Native Multipathing PlugIn (NMP). The NMP is an extensible module that manages sub plug-ins. There are two types of NMP sub plug-ins, Storage Array Type Plug-Ins (SATPs), and Path Selection Plug-Ins (PSPs). SATPs and PSPs can be built-in and provided by VMware, or can be provided by a third party.

- VMware NMP – default multipathing module (Native Multipathing Plugin). Nmp plays a role when associating the set of physical paths with particular storage device or LUN, but delegates the details to SATP plugin. On the other hand, the choice of path used when IO comes is is handled by PSP (Path Selection Plugin)

- VMware SATP – Storage Array Type Plugins runs hand in hand with NMP and are responsible for array based operations. ESXi has SATP for every supported SAN, It also provides default SATPs that support non-specific active-active and ALUA storage arrays, and the local SATP for direct-attached devices.

- VMware PSPs – Path Selection Plugins are sub-plugins of VMware NMP and they choose a physical path for IO requests.

The multipathing modules perform the following operations:

- Manage physical path claiming and unclaiming.

- Manage creation, registration, and deregistration of logical devices.

- Associate physical paths with logical devices.

- Support path failure detection and remediation.

- Process I/O requests to logical devices:

- Select an optimal physical path for the request.

- Depending on a storage device, perform specific actions necessary to handle path failures and I/O

command retries.

- Support management tasks, such as reset of logical devices.

Understand the effects of a given claim rule on multipathing and failover

ESXi does rescan your storage adapter. You can rescan manually as well. When ESXi host discovers all physical paths to storage devices available to the host, it uses a storage multipathing plugin (MPP) to claim the path to a particular storage device.

By default, the ESXi host scans its paths every 5 minutes to find out which unclaimed paths should be claimed by the appropriate MPP.

The claim rules are numbered. For each physical path, the host runs through the claim rules starting with the lowest number first. The attributes of the physical path are compared to the path specification in the claim rule. If there is a match, the host assigns the MPP specified in the claim rule to manage the physical path. This continues until all physical paths are claimed by corresponding MPPs, either third-party multipathing plug-ins or the native multipathing plug-in (NMP).

For the paths managed by the NMP module, the second set of claim rules is applied. These rules determine which Storage Array Type Plug-In (SATP) should be used to manage the paths for a specific array type, and which Path Selection Plug-In (PSP) is to be used for each storage device.

Use the vSphere Web Client to view which SATP and PSP the host is using for a specific storage device and the status of all available paths for this storage device. If needed, you can change the default VMware PSP using the client. To change the default SATP, you need to modify claim rules using the vSphere CLI.

The objective VCP6.5-DCV Objective 3.3 – Configure vSphere Storage Multipathing and Failover, is quite important so you really understand how multipathing works under the hood. Claim rules is a must.

Explain the function of claim rule elements

Claim rules indicate whether the NMP multipathing plug-in or a third-party MPP manages a given physical path.

List the multipathing claim rules by running the esxcli command:

–server=server_name storage core claimrule list –claimrule-class=MP

- Vendor – Indicate the vendor of the paths to user in this operation (-V)

- Model – Indicate the model of the paths to use in this operation. (-M)

- Device ID – Indicate the device Uid to use for this operation. (-d)

- SATP – The SATP for which a new rule will be added -s)

- PSP – Indicate which PSA plugin to use for this operation. (-P) (A required element)

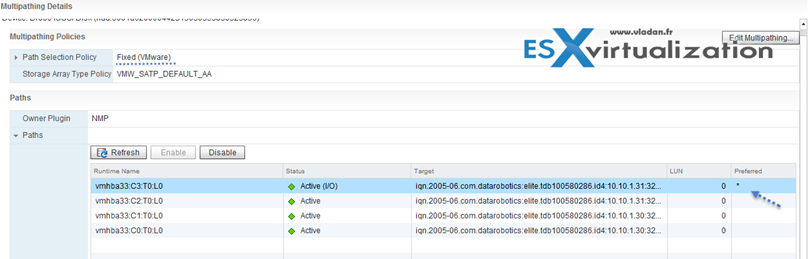

Change the Path Selection Policy using the UI

See “Differentiate available Storage multipathing policies” chapter, for visuals.

Determine required claim rule elements to change the default PSP

Use the esxcli command to list available multipathing claim rules.

Claim rules indicate which multipathing plug-in, the NMP or any third-party MPP, manages a given physical path. Each claim rule identifies a set of paths, the parameters for which are earlier on this page.

Run this command:

esxcli storage core claimrule list –claimrule-class=MP

(there is a double dash in front of “claimrule”).

in my case

Determine the effect of changing PSP on multipathing and failover

The NMP SATP claim rules specify which SATP should manage a particular storage device. Usually, you do not need to modify the NMP SATP rules. If you need to do so, use the esxcli commands to add a rule to the list of claim rules for the specified SATP.

VMware SATP monitors the health of each physical path and can respond to error messages from the storage array to handle path failover. If you change the SATP for an array, it may change the PSP which might create unexpected failover results.

Determine the effects of changing SATP on relevant device behavior

VMware provides a SATP for every type of array on the HCL. The SATP monitors the health of each physical path and can respond to error messages from the storage array to handle path failover. If you change the SATP for an array, it may change the PSP which might create unexpected failover results.

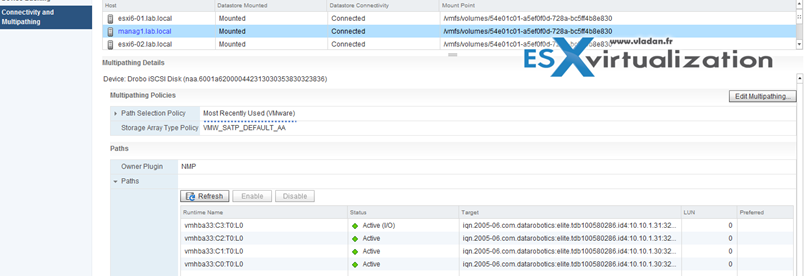

Configure/Manage Storage load balancing

The goal of load balancing policy is to give equal “chance” to each storage processors and the host server paths by distributing the IO requests equally. Using the load balancing methods allows optimizing Response time, IOPs or MBPs for VMs performance.

To get started, if you’re using block storage – check the Storage > Datastore > Configure > Connectivity and Multipathing > Edit Settings.

Load balancing is the process of spreading server I/O requests across all available SPs and their associated host server paths. The goal is to optimize performance in terms of throughput (I/O per second, megabytes per second, or response times).

Differentiate available Storage load balancing options

Make sure read/write caching is enabled.

SAN storage arrays require continual redesign and tuning to ensure that I/O is load balanced across all storage array paths. To meet this requirement, distribute the paths to the LUNs among all the SPs to provide optimal load balancing. Close monitoring indicates when it is necessary to rebalance the LUN distribution.

Tuning statically balanced storage arrays is a matter of monitoring the specific performance statistics (such as I/O operations per second, blocks per second, and response time) and distributing the LUN workload to spread the workload across all the SPs

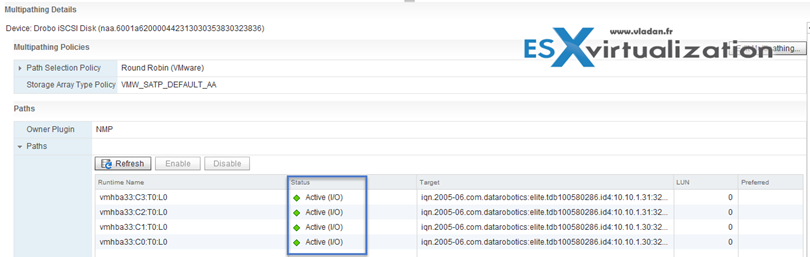

Differentiate available Storage multipathing policies

You can select different path selection policy from the default ones, or if you have installed a third-party product which has added its own PSP:

- Fixed – (VMW_PSP_FIXED) the host uses designated preferred path if configured. If not it uses first working path discovered. The prefered path needs to be configured manually.

- Most Recently Used – (VMW_PSP_MRU) The host selects the path that it used most recently. When the path becomes unavailable, the host selects an alternative path. The host does not revert back to the original path when that path becomes available again. There is no preferred path setting with the MRU policy. MRU is the default policy for most active-passive arrays.

- Round Robin (RR) – VMW_PSP_RR – The host uses an automatic path selection algorithm rotating through all active paths when connecting to active-passive arrays, or through all

available paths when connecting to active-active arrays. RR is the default for a number of arrays and can be used with both active-active and active-passive arrays to implement load balancing across paths for different LUNs.

Configure Storage Policies including vSphere Storage APIs for Storage Awareness

For entities represented by storage (VASA) providers, verify that an appropriate provider is registered. After the storage providers are registered, the VM Storage Policies interface becomes populated with information about datastores and data services that the providers represent.

Entities that use the storage provider include Virtual SAN, Virtual Volumes, and I/O filters. Depending on the type of the entity, some providers are self-registered. Other providers, for example, the Virtual Volumes storage provider, must be manually registered. After the storage providers are registered, they deliver the following data to the VM Storage Policies interface:

- Storage capabilities and characteristics for such datastores as Virtual Volumes and Virtual SAN

- I/O filter characteristics

Where?

vSphere Web Client > Select vCenter Server object > Configure TAB > Storage Providers

There you can check the storage providers which are registered with vCenter.

The list shows general information including the name of the storage provider, its URL and status, storage entities that the provider represents, and so on. To display more details, select a specific storage provider or its component from the list.

Locate failover events in the UI

You can check failover events in the Events TAB of the monitor window from vCenter server.

Check the Full VCP6.5-DCV Study Page for all documentation, tips, and tricks. Stay tuned for other VCP6.5-DCV topics -:).

You might also want to have a look at our vSphere 6.5 page where you'll find many tutorials, videos and lab articles.

More from ESX Virtualization:

Stay tuned through RSS, and social media channels (Twitter, FB, YouTube)