vSphere Storage Appliance (VSA) 5.1 uses local storage (local disks) to create (simulate) shared storage which is spread through 2-3 nodes. Like this, no need to buy expensive SAN to be able to leverage HA or vMotion.

UPDATE: The VSA is part of vSphere Essentials Plus Package. Look at the new vSphere licensing paper (PDF), so the Essential Plus is much more attractive for SMBs.

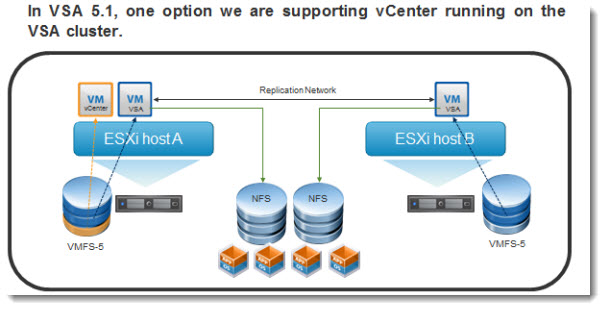

One vCenter can now manage multiple VSA clusters. Shared storage created by VSA can now be resized via the UI, to include any local storage which was left behind. vCenter can now be installed on local storage and then moved to shared storage provided by VSA 5.1, so it can reside on the VSA cluster. This was one of the limitation of VSA 1.0, where the vCenter server had to be installed elsewhere (physical host, VM on local storage only). But let me recap all the features one by one.

vSphere Storage Appliance 5.1 – So what's new?

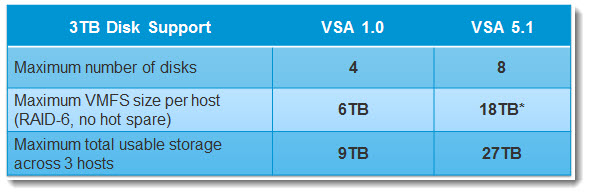

More Disk Drives per Node – capacity handled by VSA 5.1 was increased, now up to 27 TB across 3 hosts, can be handled (previously only 9TB). You can configure up to 12 disks (up to 16 disks can now be supported in an expansion chassis or JBOD) per host (previously only 4) with RAID 5 (previously only RAID 6) support as well. You can also increase capacity by addin/replacing drives.

Up to 12 disks can now be supported internally in an ESXi host, up from 8 in VSA 1.0. In addition, up to 16 disks can now be supported in an expansion chassis or JBOD (Just A Bunch Of Disks). This gives a maximum number of physical disks per host of 28. However, there is a limit on the maximum size of VMFS volume that an ESXi host can support. This is 25TB in VSA 5.1.

If we then take a 3 node cluster with a 25TB VMFS defined on each, we get 75TB total storage. Add in the mirror configuration of appliances, and we have 37.5TB business storage across all hosts.

vCenter can run now directly on the VSA cluster – this was major limitation of VSA 1.0 where the vCenter server had to “live” outside of the VSA, on local storage, SAN/NAS or on separate physical host. In VSA 5.1 vCenter can be installed on local storage (one part)> then the VSA needs to be configured (on remaining local storage) > then SvMotion can be used (if licensed) to move the vCenter VM to the VSA cluster. Additionaly, the local storage (where vCenter lived before the move) can be used by leveraging the new Resize function, which is available through UI.

This is envisioned as a way to allow VSA to be deployed when there is no external vCenter. First deploy vCenter server, then deploy the VSA, then migrate the vCenter server to the shared storage and finally reclaim the remaining local disk space for shared storage. We saw previously how this could be done when we discussed adding new storage to the VSA on-the-fly. This is also a major part of the brownfield installation mechanism – how we can deploy a VSA when there is already running VMs.

The only limitation is the Tie-breaker code which cannot run on the vCenter VM if that VM is a part of VSA cluster.

If that ESXi host presenting that shared storage fails for any reason, then vCenter in a VM will also go offline/inaccessible, and then you have two votes in the cluster down -> the whole cluster will go down.

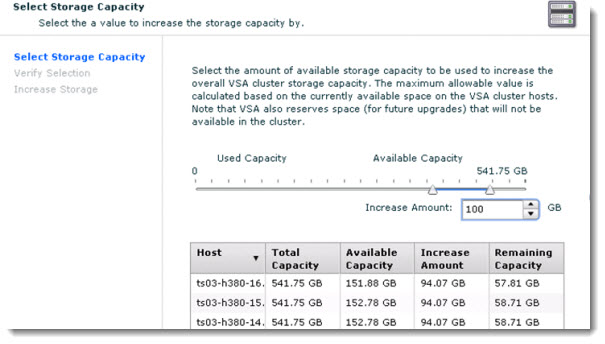

Possibility to Resize through UI after deployment – the VSA 5.1 supports an online increasing of storage capacity of the VSA cluster. For existing customers (VSA 1.0) there are 3 possibilities:

1. Convert RAID 10 to RAID 5/6.

2. Add more disks, destroy the current RAID and recreate new RAID.

3. Add more disks and create new RAID, keeping the data

When new storage is ready with the VMFS-5 grewn to match this new storage, there is a new option in the UI which can be used to grow the VSA 5.1 appliance and the shared NFS datastores.

Both procedures 1 & 2 destroy on disk data on the selected ESXi host. However data is preserved on mirror replicas. Doing this one node at a time, & sync’ing back using the in-built Replace Node procedure is achievable. Steps one would have to follow to do this include remove node via maintenance mode, add new storage, & replace node to put it back in the cluster, wait for data resynchronization of the data, then rinse & repeat on all hosts. Finally increase the VSA storage via a new feature in the UI.

In procedure 3, customers will have to do a manual step to extend VMFS-5 to use new storage. Once the VMFS is grown, increase the VSA storage via a new feature available in the UI.

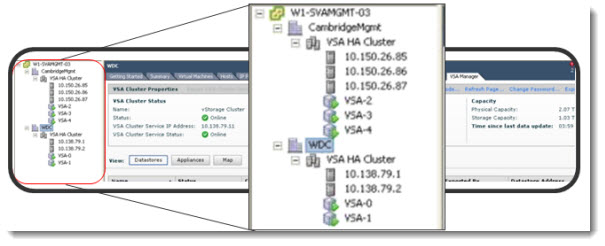

Multiple VSA Clusters can be managed by single vCenter – can be interesting for remote offices (ROBO) which can be managed through simgle vCenter server installation. vCenter server can reside on different network subnet to the VSA cluster (limit in VSA 1.0).

Remote Install Possibility of the VSA 5.1 – the VSA 5.1 can be predeployed at the main office, and then shipped to the remote office, then added back to vCenter via the “Reconfigure Network” workflow.

Remote office VSA can be installed from central office via the wire, without the need for a person to travel to the remote site. An attended install is of course possible as well, when being present at the remote office.

Vanilla Installation is no longer necessary – in VSA 1.0, a clean ESXi installation was necessary for deployment of VSA in 2 or 3 node cluster. This is no longer necessary. vCenter can get installed in a VM on local datastore allong with other VMs, on one of the ESXi hosts. Once shared storage gets created by the VSA 5.1 isntallation, those VMs can get migrated (svMotion or cold migration) to the VSA's Shared Storage. Then once those VMs migrated, you can increase the VSA storage via UI.

VSA 5.1 introduces an automated mechanism to allow an install to take place even when the hosts are already in production.

Performance improvements of VSA 5.1 – there is better balance between sync I/O and I/O from the VMs, so the sync speed has increased. A contention can be eliminated when 2 mirrors having the same disks were syncing by serializing the operation.

Another improvements can be observed when a resynch operation was in progress and an interruption had occured. Now the process will start where it left and not from the beginning (case for VSA 1.0).

Other enhancements

– VSA 5.1 can run on vSphere 5.0 and 5.1

– Single Sign-On is supported

– Memory over-commitment is supported for VSA 5.1 running on ESXi 5.1

– There IP address checking for conflicts operation before entering maintenance mode

– The IP form gets pre-populated with curent IP addresses

– No necessity to change all IP addresses with the VSA – a subset only can be changed.

– VSA can run on IPv6 enabled vCenter Server

vSphere 5.1:

- VMware vSphere 5.1 – Virtual Hardware Version 9

- vSphere Data Protection – a new backup product included with vSphere 5.1

- vSphere Storage Appliance (VSA) 5.1 new features and enhancements – This post

- vCloud Director 5.1 released – what's new

- vSphere Web Client – New in VMware vSphere 5.1

- VMware Enhanced vMotion – New in vSphere 5.1

- vSphere 5.1 Networking – New features

- VMware SRM 5.1 and vSphere Replication – New release – 64bit process, Application Quiescence

- Top VMware vSphere 5.1 Features

- vSphere 5.1 licensing – vRAM is gone – rather good news, any more?

- Coolest VMworld Videos

- Licensing VMware – Further Reading

- ESXi 5.1 Free with no vRAM limit but physical RAM limit of 32Gb