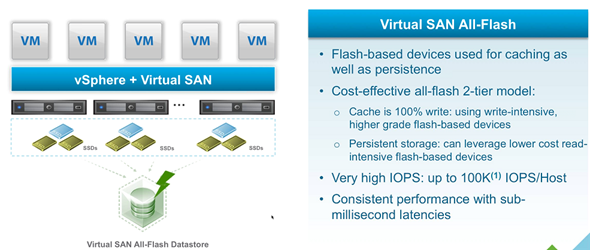

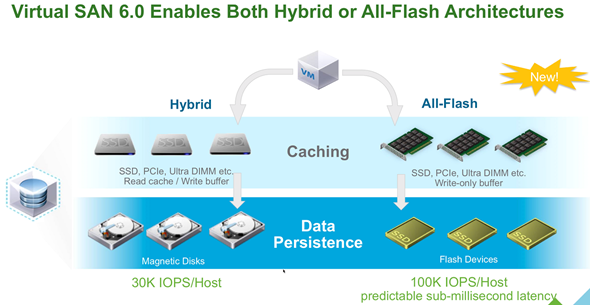

VMware vSphere 6 also brings VSAN 6.0 which brings some significant performance enhancements (As twice as fast in hybrid setup OR 5 times faster in All-Flash setup!!) and new features which the one really excites is that VSAN now allows to be a Flash only (All-Flash), that means that no spinning media is no longer necessary. It's still possible to use spinning media and configure hybrid VSAN 6.0 but as the flash media replaces slowly the spinning media, the All-Flash version of VSAN makes sense.

In VSAN 5.5 it was possible to use only hybrid aproach. We did that in VSAN 5.5, but in addition now in VSAN 6.0 it will be possible to have 2 tiers of Flash in VSAN (for example PCIe high speed SSDs, low latency flash which will be used for high-speed caching (write buffer) and on another tier we can have standard, lower performance SSDs, which will be used as persistent storage.

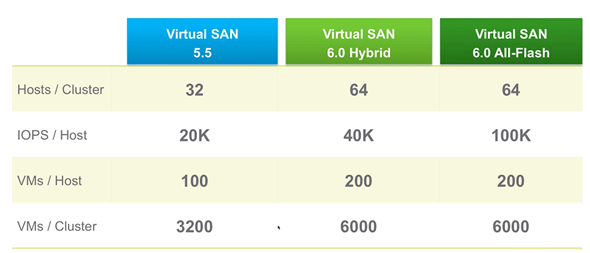

The full flash VSAN release has up to 5x performance improvements over the the previous release and scales up to 64 nodes (2x more).

I'll get into details in this post, but you might want to check other posts concerning VMware vSphere 6:

- vSphere 6 Page

- vSphere 6 Features – New Config Maximums, Long Distance vMotion and FT for 4vCPUs

- vSphere 6 Features – vCenter Server 6 Details, (VCSA and Windows)

- vSphere 6 Features – vSphere Client (FAT and Web Client)

- vSphere 6 Features – VSAN 6.0 Technical Details – (this post)

- ESXi 6.0 Security and Password Complexity Changes

- How to install VMware VCSA

- vSphere 6 Features – Mark or Tag local disk as SSD disk

Note that all the screenshots comes from VMware presentation that I assisted few weeks before the launch. Take a seat and enjoy… -:)

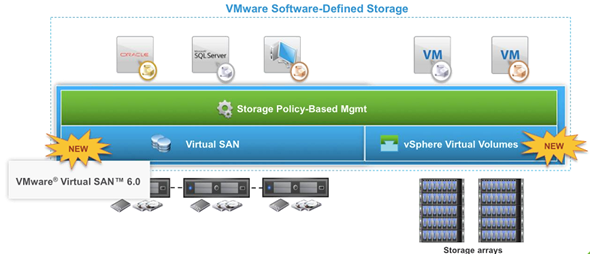

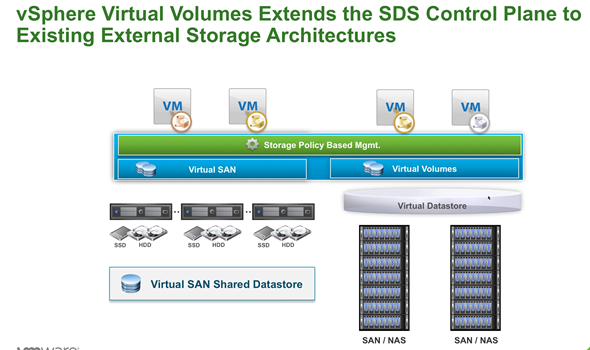

vSphere 6 Features – VSAN 6.0 and VVOLs

vSphere 6 brings VSAN and VVOLs as Two major new features (or rather product functions).

VMware vSphere 6 Features – VSAN 6.0

- Faster (up to 5x faster than previous release), if Full-flash mode. In hybrid mode 2times faster.

- Scales up to 64 nodes (hosts) which also is due to the vSphere 6 scalability improvements.

- Can execute up to 200 VMs per host (previously 100VMs)

- Virtual Disk size up to 62Tb

- VSAN snapshots and clones with high performance now and larger supported capacity of snapshots and clones per VMs (32 per VM).

- Rack awareness to tolerate rack failures with Fault domains

- JBOD support (direct attached storage)

- HW based checksum and encryption

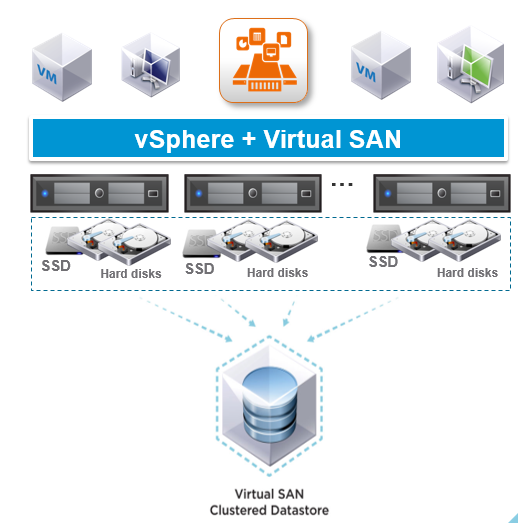

VSAN with those functions is now ready for business critical applications. VSAN runs on standard servers, it's a software-defined storage built into vSphere. It allows to deliver 2x more IOPs with VSAN Hybrid (up to 40 000 IOPS/host).

The All-flash VSAN 6.0 architecture…

VSAN Enterprise-Class Scale and Performance. A table showing the different performance levels, comparing the 5.5 version to 6.0 whch is divided into All-Flash or Hybrid

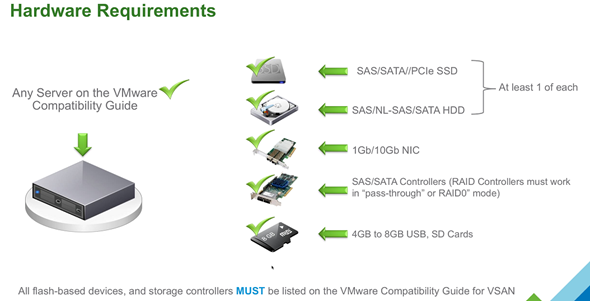

VSAN HCL needs to be followed. This is not unusual as previously VSAN 5.5 had the same requirements, especially the SAS/SATA controllers which needs to have a certain queue depth… (at least 600).

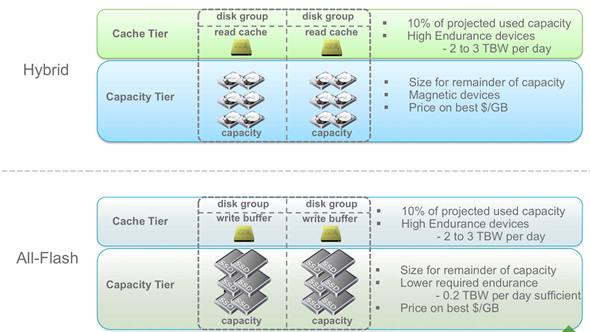

VSAN Hybrid Version – The flash disks are used two ways:

- Non-volatile write buffer (30%) – writes are acknowledged then they enter prepare stage on the flash-based devices, which allows to reduce latency for writes.

- Read Cache (70%) – cache hits will allow the latency reduction. Cache miss will needs to retrieve data from magnetic disks.

Better hardware you have – better performance you get! Same for both versions of VSAN!

VSAN All-Flash Version

- Cache Tier – highly performand and endurant flash devices present on the HCL

- Capacity tier – low endurance flash devices present on the HCL

Networking

Concerning networking, it's possible to use 1Gb/10 Gb NICS, but for the All-Flash version you'll need 10Gb network.

Jumbo Frames – Using jumbo frames can reduce CPU overhead in large environments and can provide nominal performance increase.

VSAN supports both – VSS or VDS, where NetIOC needs VDS.

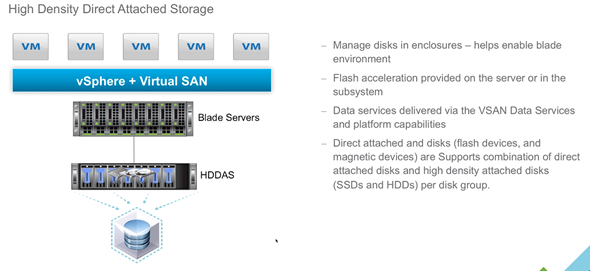

High density DAS support in vSphere 6

Some devices will be listed on VMware HCL. JBODs, but restrictions through HCL.

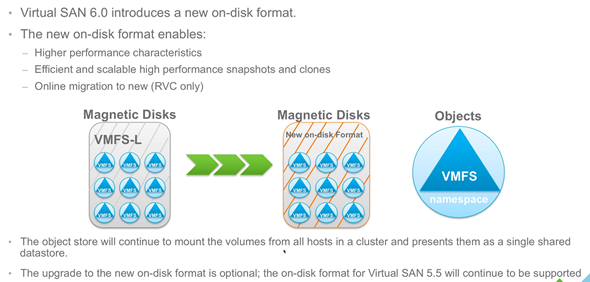

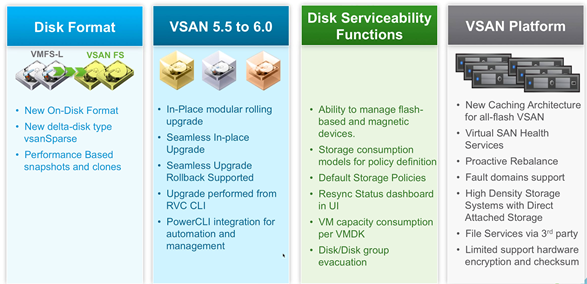

New On-Disk format

New On-Disk format

There is new disk format. It's a On-Disk format.

New delta-disk type vsanSparse. It's this disk format which allows the performance gains for snapshots and clones. Why? Because the new delta disk called vsanSparse which shall deliver comparable performance like native SAN snapshots.

The vsanSparse disk format is more efficient because of new on-disk format writing and extended caching capabilities.

All disk in a vsanSparse disk-chain shall be vsanSparse (except base disk).

Some drawbacks:

- It's not possible to create linked clones of a VM with vsanSparse snapshots on a non-vsan datastore.

- If VM has existing snapshots (based on redo logs), this VM will continue to get redo log based snapshots until the user consolidates and deletes all current snapshots.

VSAN 5.5 it's a In-place modular rolling upgrade

Non disruptive in-place upgrade which also supports rollback. The upgrade is performed not within the GUI but RVC and CLI (Ruby vSphere Console).

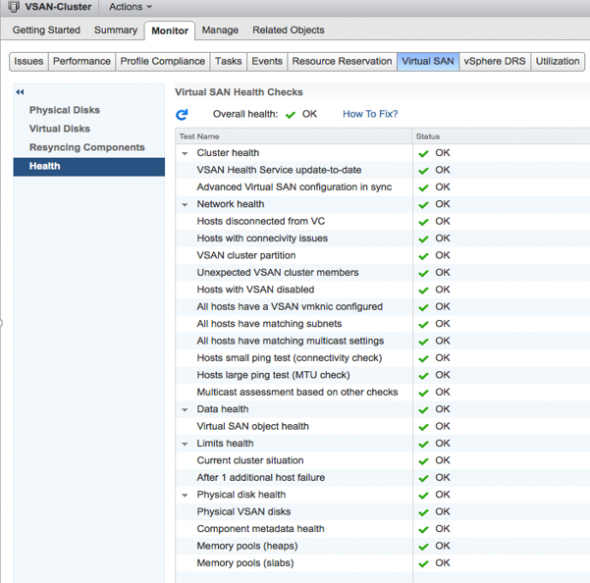

There is health services for VSAN as well which shows the green check boxes (or not) for each of the VSAN services. An outline of all new features present in VSAN is here…

The whole VSAN architecture: All-Flash or Hybrid where the Full flash needs 10Gb network…

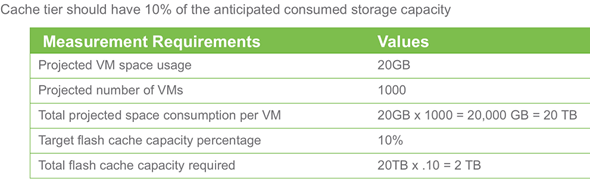

The Hybrid and All-Flash architecture. The All-Flash architecture can leverage cheaper (0.2 TBW/day) SSDs for capacity tier and 2-3 TBW per day for performance tier. The performance tier shall be 10% of projected used capacity. It means that if you plan for example 6TB per disk group the performance tier shall be about 10% of that.

The calculations and predictions:

The cache SSDs should be high write endurance models – take 2+ TBW/day or 3650+/5 year. The total cache capacity percentage should be based on use case requirements where for write-intensive workloads a higher amount should be configured. You shall increase cache size if you're expecting heavy use of snapshots.

VSAN Upgrade paths:

The new delta-disk type present in VSAN 6 need to follow some upgrade paths. Obviously first must be the vCenter and ESXi upgrades, then the disk format conversion on VSAN. The VSAN 6.0 has new on disk format for disk groups and exposes new-delta disk type.

Manageability Improvements in VSAN 6.0

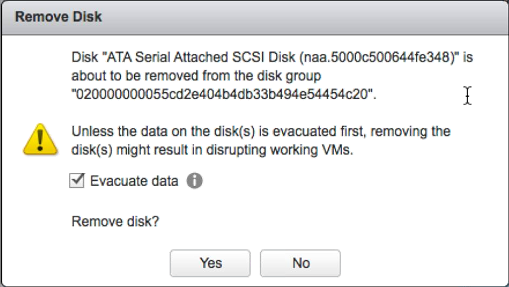

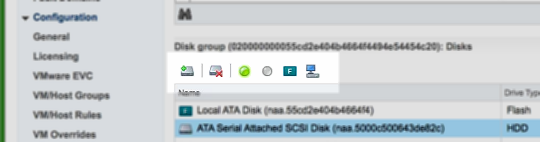

In VSAN 5.5 It's possible to not-to put a full host into maintenance mode in case you have a disk replacement. But in VSAN 6.0 you can actually evacuate a data from a single disk present in VSAN. Its supported in the UI, esxcli and RVC.

You can also launch a command through esxcli:

esxcli vsan storage remove –evacuation-mode=EvacuateAllDate -disk=naa.5000c50065f88a50

In VSAN 5.5 you had to put host in maintenance mode.

In VSAN 6.0 you can first identify the disk through the UI and also on the physical system. In fact it's possible to turn the magnetic-disk's LED ON or OFF… so you can identify the disk in case you want to replace it.

This is good stuff !!! -:)

You can also tag non recognized flash disk within the UI.

Also, disk which are not recognized as local to ESXi can now be tagged/untagged as local disks. This had to be done in vSphere 5.x through the CLI. (Note: It's also one of the exam's topic so in case you're studying for VMware certification exam you better know how to do it manually).

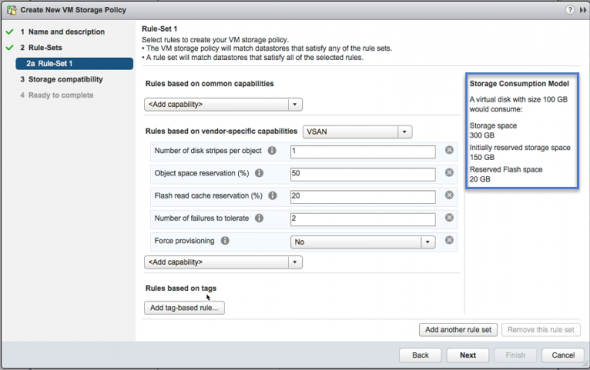

Also what's improved within the UI is a new usability improvements when it comes to What-If scenarios and when changing VM Storage Policy. You'll be able to see the changes on the space consumption when:

- Policy is edited

- Policy is created

- When reaplying a policy

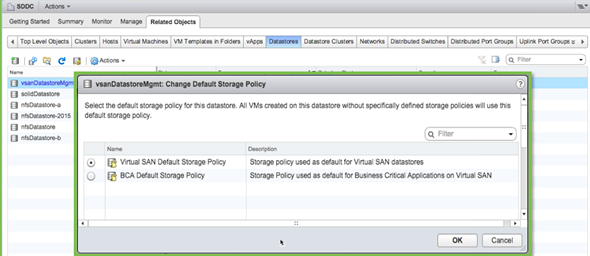

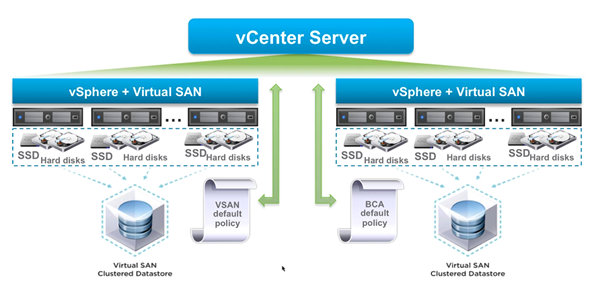

User defined default policy

Admin can designate a preferred VM storage policy as the preferred default policy for the VSAN.

And vCenter can manage different policies for each VSAN cluster…

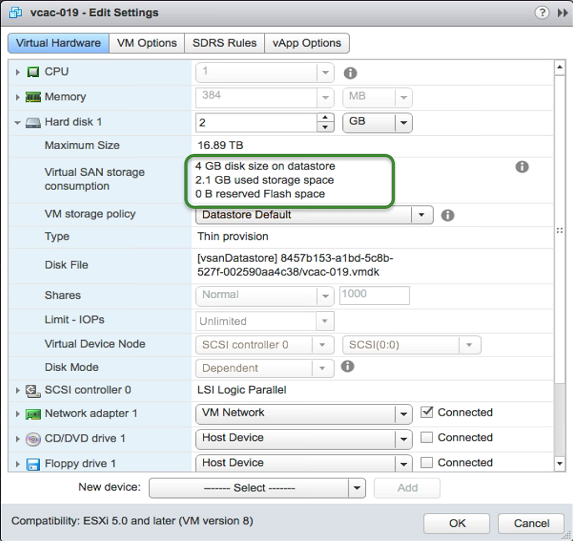

When configuring a VMs properties, you can see the VMs disk utilization on the VSAN datastore, but also the used storage space. It's possible to show the real usage on

- Spinning disks

- Flash devices

- It's displayed in the vSphere web client and RVC

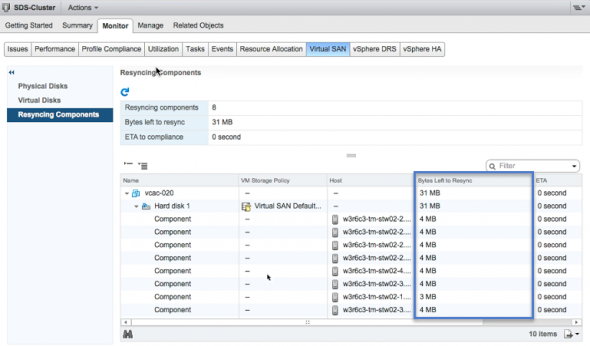

New Resynchronization status as a dashboard UI

Proactive Rebalance

This is also new and it allows to be beneficial in two particular cases:

- When adding a new node to existing VSAN cluster or bringing a node out of decommission state.

- Utilize new nodes even if the fullness of existing disks are below 80%.

In those cases the rebalance can be more effective if it can be started earlier then disk almost full… It can be done through RVC. There is a command vsan.proactive_rebalance –start

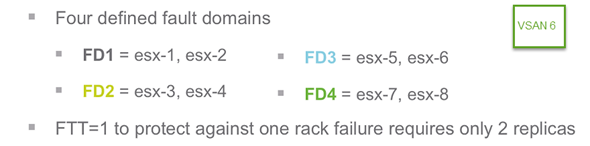

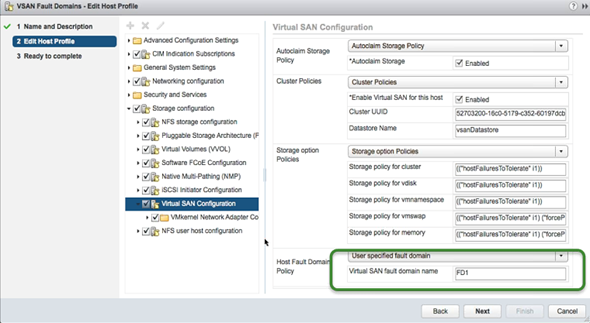

Fault Domains

It's possible to define failure domains by grouping multiple hosts within a cluster. This is to avoid:

- Rack Failures

- Storage controller failure

- Network failure

- Power Failure

You'll need 3 fault domains to define failure domain.

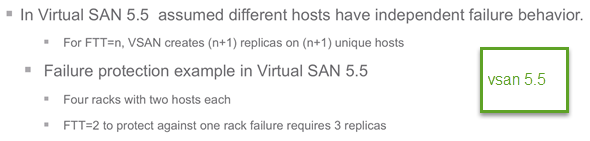

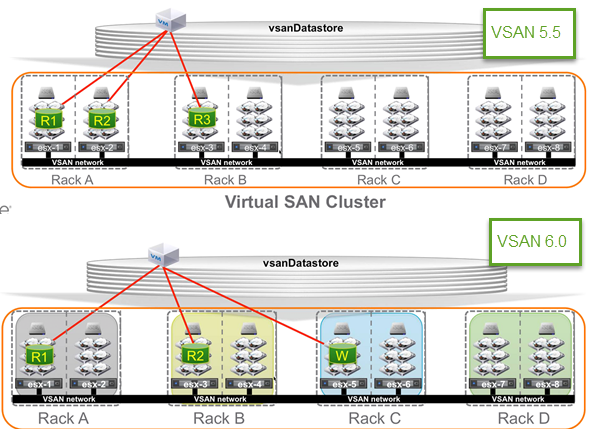

Compare VSAN 5.5 and VSAN 6.0 where there is a possibility to leverage fault domain. You have a guarantee that files aren't placed in the same rack. There is FTT=1 to protect against one rack failures and it requires only two replicas.

VSAN 6:

Compare to with VSAN 5.5 where you need FTT=2 ( 3 replicas )

The whole picture where we can see the compare of VSAN 6.0 with VSAN 5.5 concerning the Fault domain. (Note the location of W in rack C in VSAN 6 – “Witness”)

Fault domains are configurable through:

- The Web Based UI (vSphere web client)

- Host Profiles (persistent across reboots)

- CLI

- RVC (Ruby vSphere Console)

The FTT are applied based on fault domains and not on hosts (like in VSAN 5.5). For example when FTT=n, (2n+1) fault domains are required.

NexentaConnect on the Top of VSAN

provides file services on the top of VSAN (NFS, SMB). NexentaConnect stores files only and VSAN stroes VMs. NexentaConnect allows using abstracted pool of files services, has live monitoring capabilities or DR recovery planning capabilities.

PowerCLI 6.0 – New!

Some of the existing cmdlets were altered to work with VSAN and there are new cmdlets introduced for VSAN:

Export-SpbmStoragePolicy

- Get-SpbmCapability

- Get-SpgmCompatibleStorage

- Get-SpbmStoragePolicy

- Get-VSANDisk

- Get-VsanDiskGroup

- Import-SpbmStoragePolicy

- New-SpbmRule

- New-SpbmRuleSet

- New-SpbmStoragePolicy

- New-VsanDisk

- New-VsanDiskGroup

- Remove-SpbmStoragePolicy

- Remove-VsanDisk

- Remove-VsanDiskGroup

- Set-SpbmEntityConfiguration

- Set-SpbmStoragePolicy

Ruby vSphere Console (RVC)

There are new RVC commands for management and config of VSAN 6. RVC and VSAn Observer were used to monitor VSAN 5.5 – See Getting Started with VSAN Observer (RVC).

New commands:

- vsan.v2_ondisk_upgrade

- vsan.proactive_rebalance

- vsan.purge_inaccessible_vswp_objects

- vsan.enable_capacity_flash

- vsan.host_claim_disks_differently

- vsan.host_wipe_non_vsan_disk

- vsan.host_evacuate_data

- vsan.host_exit_evacuation

- vsan.scrubber_info

- basic.screenlog

VSAN 6.0 Health

All health informations in the same screen… -:)

VMware vSphere 6 Features – vSphere Virtual volumes (VVoLs)

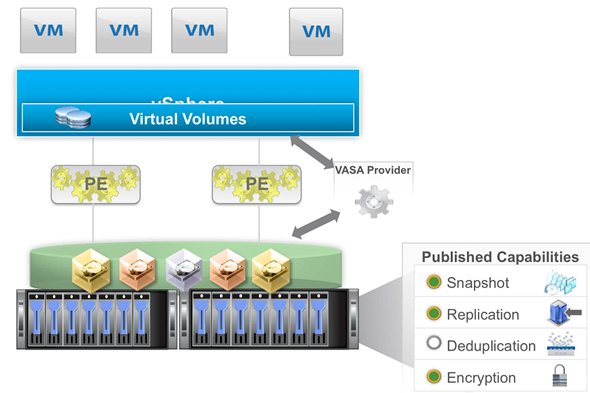

Virtual disks are natively represented on arrays and enables VM granular storage operations using array-based data services. VVoLs is a function which does not need to be activated in vSphere 6, but it's just there. Extends vSphere storage policy-based to storage ecosystem. It does support existing storage I/O protocols (FC, iSCSI, NFS). It's supported by major storage vendors.

In order to leverage VVols, the array has to be VVOLs capable and has to have some capabilities which are surfaced via VASA (vSphere API for stroage awareness) APIs.

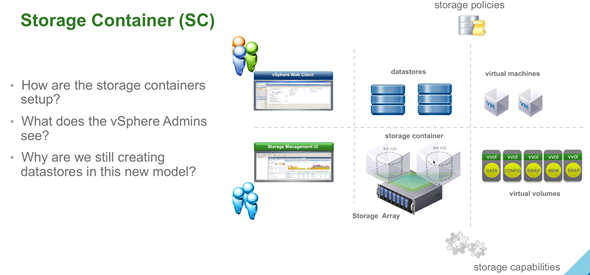

- The storage is logically partitioned into containers called Storage containers.

- VMs disks called Virtual Volumes are stored native way on those storage containers.

- IO from ESXi to the array is addressed through an acces point called Protocol Endpoint (PE)

- DataServices are offloaded to the array

The Schema:

Virtual volumes are virtual machine objects which are stored on sthe SAN's storage containers (logically partitioned). There is no VMFS.

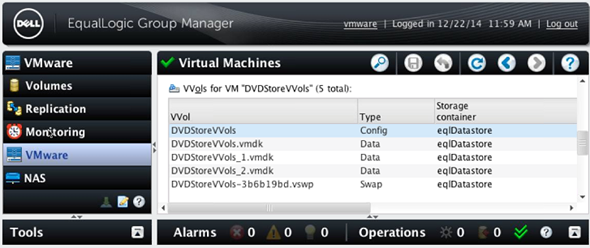

VVols are stored in those containers and mapped to VM's files (swap, VMDK, vmem…) so in fact there is 5 different types of recognized Virtual Volumes:

- Data (VMDK)

- Config (VM home, config files, logs…)

- Memory (snapshots)

- Swap (VM memory swap)

- Other ( generic, solution specific – example CBRC in Horizon View).

Vendor Provider (VP)

The VP is responsible for creating Virtual Volumes and provides storage awareness services. It's a software component which is developped by Storage array vendors to be able to work with VASA APIs exported by ESX. ESX and vCenter connect to VASA provider.

Storage Container (SC)

For VMware admin there is no change as he continue to see datastores, VMs, VMDKs….

Example of storage view via Dell Equalogic group manager.

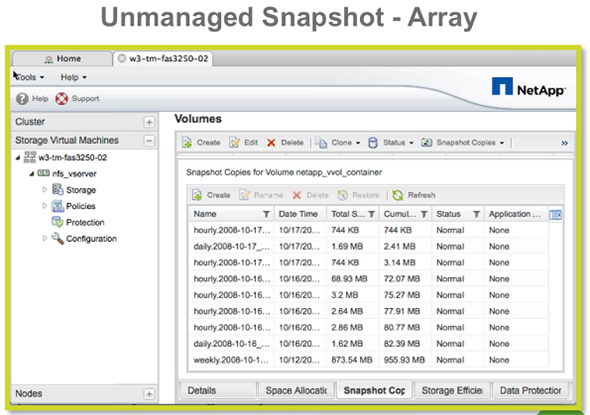

Snapshots

VVOLs introduces unmanaged snapshots. But we firs need to know what are managed snapshots, right? The managed snapshots are the classical snapshots in vSphere (up to 32 snapshots per VM).

Umanaged snapshots are snapshots managed by an array… but initiated through vSphere web client.

While VVOLs are great technology I'm more and more interested by VMware VSAN as I feel the future datacenter direction going the way of linear scale and performance… VVOLs will however still play significant role to simplify the storage management in vSphere.

There is always be use cases for VSAN and on the other hand use cases for VVOLs and traditional shared storage arrays.

vSphere 6 :

- vSphere 6 Page

- vSphere 6 Features – New Config Maximums, Long Distance vMotion and FT for 4vCPUs

- vSphere 6 Features – vCenter Server 6 Details, (VCSA and Windows)

- vSphere 6 Features – vSphere Client (FAT and Web Client)

- vSphere 6 Features – VSAN 6.0 Technical Details (this post)

Source: VMware

New On-Disk format

New On-Disk format

Export-SpbmStoragePolicy

Export-SpbmStoragePolicy

This improvement would really make some noise to IT peeps, especially for those cloud apps and mission critical.

Another great blog!

~Mike

I can’t wait to try this version of vsan.

Lots of technical detail about VSAN 6.0. What I like best is the ALL-FLASH solution that can open endless posibilities. Great article. Cheers

Lots of great info. Still trying to get my head around vVOLS.

Excellent Post

Perfect utilization of flash disks

I’m still not certain that VSAN has a place in our environment, but this year will be looking more deeply at it to see if a test deployment can be run to evaluate. Really helpful article on this.

Thanks

Thanks for all this info. I agree with Yuri the all flash solution could be a real showstopper (on the positive way).

All Flash arrays are gaining a lot of momentum these days. It is good to see VMware throw its hat in the ring with VSAN.

VVOLs and VSAN6 both look great. Excited to get my hands on the new tech.

Thanks for the post!

100K IOPS sound crazy – I love it!

With this new feature for VSAN Hybrid, VM that use database or lots of file acess will improve performance.

It will be great for DBA´s that question poor performance in virtual databases.

Fantastic post

Great write up.

Thanks for another great blog!

Really looking forward to trying out the new version.

Great article, all the info on VSAN 6 and great what you did by comparing with 5.5 and also mentioning how to upgrade from

Thanks for a great post Vladan! Looking forward to taking a look at VSAN 6, I see a few use cases for it in my environment given the additional features you mention.

Thanks Vladan, you did awesome job to help us with such comprehensive details about vSAN 6.0 and great enhancements it does over tradition SANs.

Great Article,\

\

Thanks

Good job!

too long post, but very useful … 😉

Excellent and comprehensive.

Nice article..

alot of info on vsan. seems to be the big product of vmware. i like the all flash feature

Excellent and very detailed post. Thanks.

Thanks for this post

Great summary about VSAN, great improvement from version 5.5

Great information and comparison on VSAN 5.5 to VSAN 6.0.

This is great, I have been looking for an article that explains how data is distributed across the hosts, and racks. This gives me a much clearer picture of where things sit.

Great article, I loved your insights about All-Flash configuration!!! Thank you!

How do I run VSAN Proactive test via Ruby RVC? I’ve looked at the Health Check Plugin PDF guide (pg 101), but cannot get the command to run. Just says Invalid Command. The plugin is enabled and available via the web client. First two tests run fine, but the third test “Storage Performance Test” – as soon as I click it, the whole browser crashes. Have tried IE and Chrome, as admin, still no joy, hence why am trying to run via RVC

vsa.health.cluster_load_test_prepare -r PerfTest -t “Stress test” 0

I am running VSAN 6.0

I’d suggest that you get in touch with VMware if you’re on the support. There has been several version of the Health Check plugin which is now (6.1) bundled, preinstalled with vCenter. And in VSAN 6.2 the performance monitoring will be completely integrated into the stack with distributed DB.