VMware vSphere 5.1 brings enhancements to the vDS. There are quite a few. The new version of vDS is version 5.1. Network health check can verify your physical network before deploying vSphere, to see, if the setup of the physical network will assure the deployment of VMs.

Scalability:

vSphere 5.1 supports more dvPortgroups, more dvports per vDS and also number of host per vDS has been increased. The number of vDS in vCenter grew as well.

– Static dvPortgroups goes up from 5 K to 10 K

– Number of dvports goes up from 20 K to 60 K

– Number of Hosts per vDS goes up from 350 to 500

– Number of vDS supported on a vCenter goes up from 32 to 128

Network Health Check

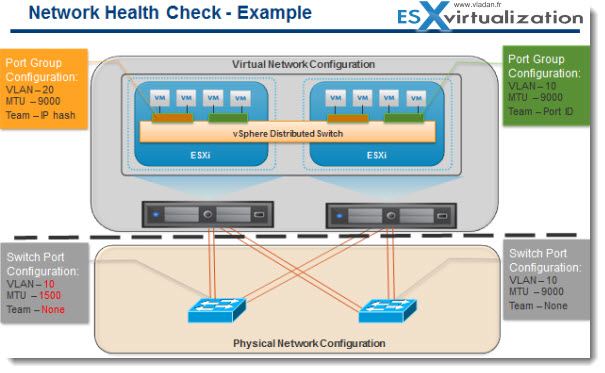

Check and detects miss-configurations across virtual and physical networking. Assures proper physical and virtual operation by providing health monitoring for physical network setups including VLAN, MTU or Teaming. Today, vSphere administrators have no tools to verify whether the physical setup is capable of deploying virtual machines correctly. Even simple physical setups can be frustrating to debug end to end. Network health check eases these issues by giving you a window into the operation of your physical and virtual network.

The network health check in vSphere 5.1 can detect mismatched VLAN trunks between virtual switch and physical switch. Wrong MTU (Maximum Transmit Unit) settings between vNIC, virtual switch, physical adapter and physical switch ports. Also wrong teaming configurations can be detected by this new feature.

In this example you can see that there is an error in this configuration between the physical switch port and virtual switch.

The Green port group is configured with VLAN 10; MTU as 9000; and Teaming option a port ID hash. If we look at the configuration on the physical switch ports. As you can see here VLAN, MTU and teaming configuration matches the port group configuration.

The orange port group configuration – On this port group VLAN is 20; MTU 9000, Teaming configuration is IP hash. For this configuration of the port group the physical switch should have ether channel configured on the physical switch. Now if you look at the switch port configuration you can see that the ports are not configured for VLAN, MTU as well as Teaming.

This will be detected by the network health check and warnings will be shown on the vCenter Server regarding these mis configurations.

vSphere 5.1 Networking – Configuration Backup and Restore.

Configuration Back Up and Restore – Provides you with a way to create backups for network settings at the vDS level or at the DV PG level. This feature lets you recreate network configuration seamlessly, giving you a means of restoring full functionality in instances of network settings failures or vCenter Server database corruption.

The backup/restore is managed through vCenter. Previously, In case of database corruption or database loss events, it was not possible to recover network configurations. The virtual networking configuration had to be rebuilt from scratch.

With this feature, it will be easy to replicate the virtual network configuration in another environment or go back to the last working configuration after any accidental changes to virtual networking settings. You can even think of creating a configuration backup before making some significant changes, so then there is an easy way to go back.

Supported Operations:

-Backup VDS/dvPortgroup configuration on disk (Export)

-Restore VDS/dvPortgroup from a backup (Restore)

-Create new entity from backup (Import)

-Revert to the previous dvPortgroup configuration after changes are made

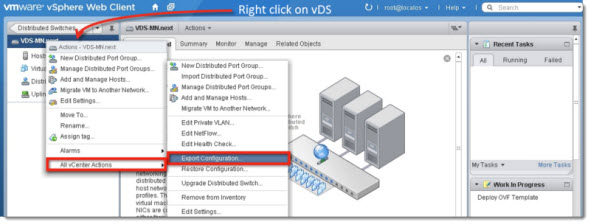

You can see the steps required to backup/restore vDS configuration, through the new web client, by right clicking the vDS.

You can see from this screenshot below that the new VMware vSphere 5.1 Web client is being used for connection to vCenter.

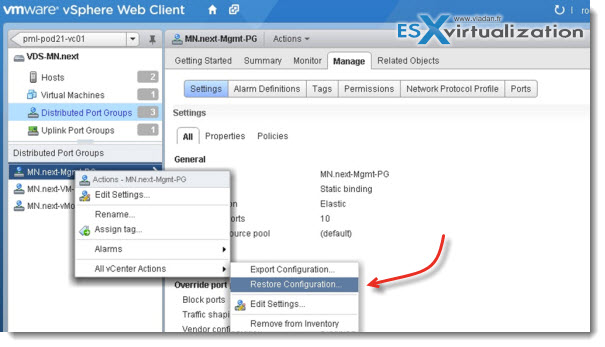

The Restore of port group configuration – it's possible to restore a configuration of a port group. On the image below you can see the restore of port group configuration possibility.

After the change to the port group, go back and select the port group that was changed and right click > All vCenter Actions > Restore configuration

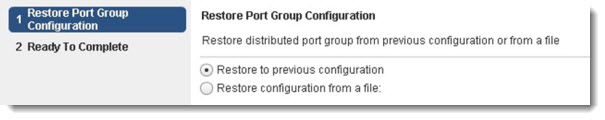

In fact, when you follow the assistant which starts when invoking the menu, you'll see that you have two possibilities to chose from:

1. Restore to previous configuration (snapshot)

2. Restore configuration from file

It's clear. If you will use this feature every week and you would wont (for X reasons) to go back to one month back configuration, you would have probably choose the second option.

Automatic Rollback and Recovery for management network on a vDS.

Many clients use to have the management network configured on separated network vSwitch. Not on vDS. Just because of the possible miss-configuration “thread”. They were running those “hybrid” configurations.

Automatic Rollback and recovery monitors the state of management network connectivity from vCenter Server to vDS and rolls back to the previous state automatically if connectivity is lost during a configuration change. By default this feature is set to enabled.

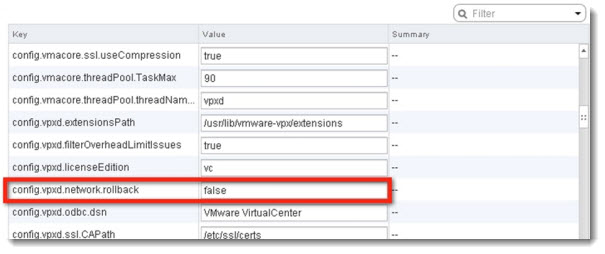

There is an override for that function by changing a value in vCenter server (not recommended):

config.vpxd.network.rollback = “false”

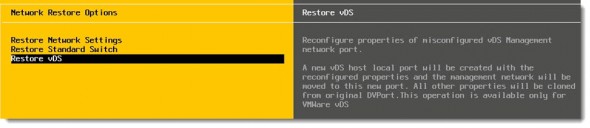

You than have two options to restore the connectivity of vCenter to your hosts if you set the config.vmxd.network.rollback value to FALSE (not recomedend):

1. Through the DCUI (Direct console user interface). You would have to connect individually to every host, one by one…

2. Through the vCenter. Here is the workflow:

– Host network configuration is out of synch with the vCenter Server

– Migrate the vmknic from host local port to the dvport

vSphere 5.1 – LACP Support

LACP support on VDS – VDS can now participate in Link Aggregation Group created by the physical access switches using Link Aggregation Control Protocol providing dynamic failover and enhanced link utilization.

Definition:

Link Aggregation Control Protocol (LACP) is a standard based link aggregation method to control the bundling of several physical network links together to form a logical channel for increased bandwidth and redundancy purposes. LACP allows a network device to negotiate an automatic bundling of links by sending LACP packets to the peer.

The advantages of LACP

– Plug and play

– Can detect link failures and cabling mistakes and reconfigures links automatically

vSphere 5.1 – SR-IOV

– One PCIe card can be presented as multiple separate logical IO devices. Can be used to offload IO processing to those adapters and reduce network latency. (When selected, vMotion, FT and HA are not available).

Single Root IO Virtualization is a standard that allows one PCI express (PCIe) adapter to be presented as multiple separate logical devices to the VMs. The hypervisor manages the physical function (PF) while the virtual functions (VFs) are exposed to the VMs. In the hypervisor SR-IOV capable network devices offer the benefits of direct I/O, which includes reduced latency and reduced host CPU utilization. VMware vSphere ESXi platform’s VM Direct Path (pass through) functionality provides similar benefits to the customer, but requires a physical adapter per VM. In SR-IOV the pass through functionality can be provided from a single adapter to multiple VMs through VFs.

vSphere 5.1 – BPDU Filter (Bridge Packet Data Unit)

What does the BPDU filter? The BPDU filter does filter the BPDU packets that are generated by virtual machines and thus prevents any Denial of Service attack situation. (DoS)

VMware vSphere Standard and Distributed switches are supported. BPDU filter can be enabled by changing the advanced “Net” settings on ESXi host.

– BPDUs are packets exchanged between switches as part of Spanning Tree Protocol (STP)

– Virtual Switches don’t support STP and thus don’t generate BPDU packets

In which situation there can be DoS attack ?

– When a compromised virtual machine sends BPDU frames.

– Virtual switch doesn’t block these frames.

– Physical switch port gets blocked.

vSphere 5.1 – Port Mirroring Enhancements

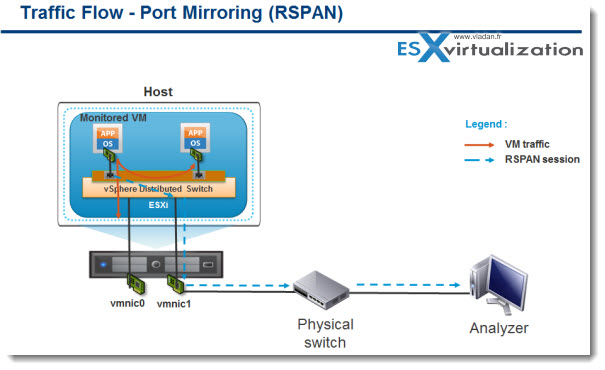

Port Mirroring enhancements – Introduces Cisco's ERSPAN and RSPAN to vDS. ERSPAN and RSPAN allow vDS to mirror traffic across the datacenter to perform remote traffic collection for central monitoring.

IPFIX or NetFlow version v10 is the advanced and flexible protocol that allows to define the NetFlow records that can be collected at the VDS and sent across to a collector tool.

Customers can use templates to define the records. Template descriptions are communicated by the VDS to the Collector engine. Can report IPv6, MPLS, VXLAN flows.

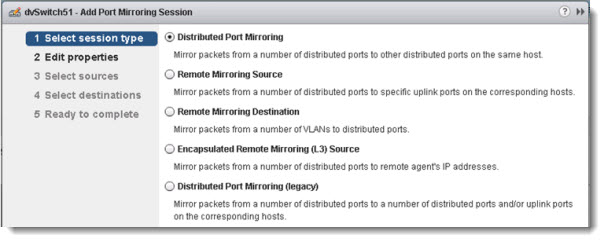

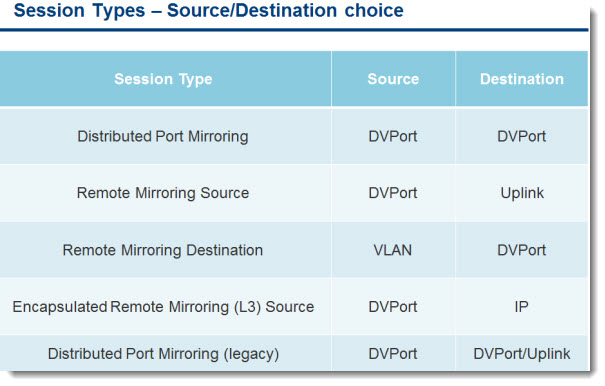

There is several option, if you want to create a port mirroring session. Choose the option which is based on where the monitoring device is placed in your infrastructure.

What's important is the choice of source (the traffic that needs monitoring) and destination (where the traffic will be mirrored to ).

See the image bellow which shows you the source and destination option for the different port mirror session type.

vSphere 5.1 – Other enhancements

Netdump – Usefull for debugging. The diskless ESXi hosts (deployed via AutoDeploy or Stateless) can now core dump over the network. In order to use netdump, you'll need to setup a server/collector and a VMKNIC on ESXi host that can reach the Server.

The feature is supported not only on vDS but also on VSS (standard switch).

– UDP protocol with server listening on port 6500

– Picks the first usable uplink from the team

– Supports VLAN tagging

In vSphere 5.0, enabling netdump on an ESXi host with the management network configured on a VDS was not allowed. In vSphere 5.1, this limitation has been removed. Users now can configure netdump on ESXi hosts using management network on VDS.

Better MAC address management – It supports now locally administered MAC addresses, so users can specify MAC prefix and/or MAC ranges with a control over All 48 bits of MAC address.

It removes the limitation of using only VMware’s Organizationally Unique Identifier (OUI) based allocation. But it's not hard limit, can be reconfigured back to re-enable the VMware OUI based allocation.

The VMware vSphere 5.0 MAC address management had the following limitations:

– Each vCenter Server can allocate up to 64 k MAC addresses. This limit is too small in the cloud environment.

– Duplicate MAC address are possible with multiple vCenter Server instances that are administered by different IT

This limit was lifted with introduction of the new MAC address management in VMware vSphere 5.1

Auto Port Expand – When static port binding selected, the feature is enabled. Enabled by default in vSphere 5.1. Now don't have to manually expand or shrink humber of virtual ports in distributed port group.

SNMP – V1 V2 and V3 suport – in vSphere 5 only V1 and V2 were supported. Now, the V3 is supported as well.

The Examples of some of VMware MIBs are “Vmware-Sysem-MIB” and “VMware-Env-MIB”. In this release of vSphere 5.1 SNMP support is enhanced through the following key capabilities:

– Better security through the support for SNMPv3.

– Support for IEEE/IETF networking MIB modules that provides additional visibility into virtual networking infrastructure.

vSphere 5.1:

- VMware vSphere 5.1 – Virtual Hardware Version 9

- vSphere Data Protection – a new backup product included with vSphere 5.1

- vSphere Storage Appliance (VSA) 5.1 new features and enhancements

- vCloud Director 5.1 released – what's new

- vSphere Web Client – New in VMware vSphere 5.1

- VMware Enhanced vMotion – New in vSphere 5.1

- vSphere 5.1 Networking – New features – This Post

- VMware SRM 5.1 and vSphere Replication – New release – 64bit process, Application Quiescence

- Top VMware vSphere 5.1 Features

- vSphere 5.1 licensing – vRAM is gone – rather good news, any more?

- Coolest VMworld Videos

- Licensing VMware – Further Reading

- ESXi 5.1 Free with no vRAM limit but physical RAM limit of 32Gb